-

Notifications

You must be signed in to change notification settings - Fork 25.6k

[Re-landing 68111] Add JIT graph fuser for oneDNN Graph API (Preview4.1) #74572

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

🔗 Helpful links

💊 CI failures summary and remediationsAs of commit c02ece3 (more details on the Dr. CI page):

4 failures not recognized by patterns:

This comment was automatically generated by Dr. CI (expand for details).Please report bugs/suggestions to the (internal) Dr. CI Users group. |

|

@sanchitintel to make review easier, can you simply cherry-picked landed commit into the branch and then apply any other changes on top of that? |

Sorry @malfet, please clarify which landed commit you're referring to. |

|

This one: cd17683 |

Summary: ## Description Preview4 PR of this [RFC](pytorch#49444). On the basis of pytorch#50256, the below improvements are included: - The [preview4 release branch](https://github.com/oneapi-src/oneDNN/releases/tag/graph-v0.4.1) of the oneDNN Graph API is used - The fuser now works with the profiling graph executor. We have inserted type check nodes to guard the profiled tensor properties. ### User API: The optimization pass is disabled by default. Users could enable it by: ``` torch.jit.enable_onednn_fusion(True) ``` ### Performance: [pytorch/benchmark](https://github.com/pytorch/benchmark) tool is used to compare the performance: - SkyLake 8180 (1 socket of 28 cores):  - SkyLake 8180 (single thread):  \* By mapping hardswish to oneDNN Graph, it’s 8% faster than PyTorch JIT (NNC + OFI) \** We expect performance gain after mapping transpose, contiguous & view to oneDNN graph ops ### Directory structure of the integration code Fuser-related code are placed under: ``` torch/csrc/jit/codegen/onednn/ ``` Optimization pass registration is done in: ``` torch/csrc/jit/passes/onednn_graph_fuser.h ``` CMake for the integration code is: ``` caffe2/CMakeLists.txt ``` ## Limitations - In this PR, we have only supported the optimization on Linux platform. The support on Windows and MacOS will be enabled as the next step. - We have only optimized the inference use case. Pull Request resolved: pytorch#68111 Reviewed By: eellison Differential Revision: D34584878 Pulled By: malfet fbshipit-source-id: ce817aa8cc9052ee9ed930c9cf66be83449e61a4

7b7dbfc to

bc4739a

Compare

|

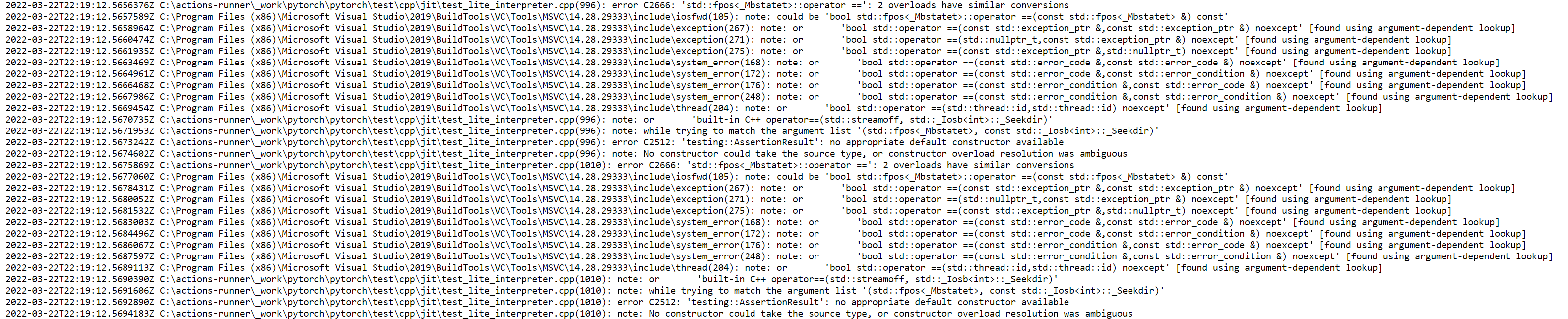

Windows build failed while compiling a lite interpreter file ( Will rebase later to check if the issue got fixed. |

|

Closing & reopening as #74596. Thanks! |

|

|

Description

Relanding #68111

Preview4 PR of this RFC.

On the basis of #50256, the below improvements are included:

User API:

The optimization pass is disabled by default. Users could enable it by:

Performance:

pytorch/benchmark tool is used to compare the performance:

** We expect performance gain after mapping transpose, contiguous & view to oneDNN graph ops

Directory structure of the integration code

Fuser-related code are placed under:

Optimization pass registration is done in:

CMake for the integration code is:

Limitations