-

Notifications

You must be signed in to change notification settings - Fork 25.6k

[ONNX] Use decorators for symbolic function registration #84448

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

[ghstack-poisoned]

This comment was marked as outdated.

This comment was marked as outdated.

[ghstack-poisoned]

[ghstack-poisoned]

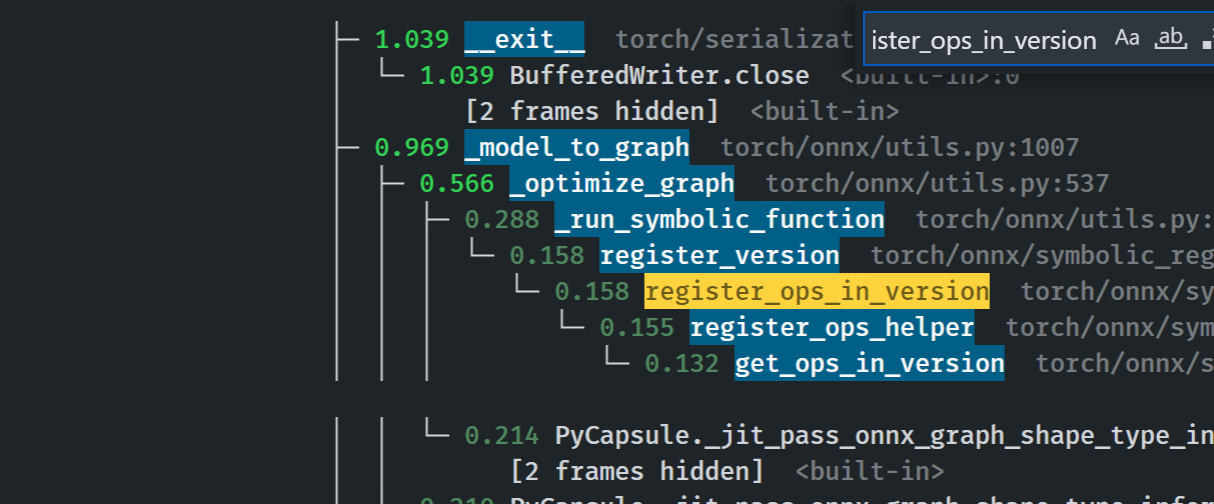

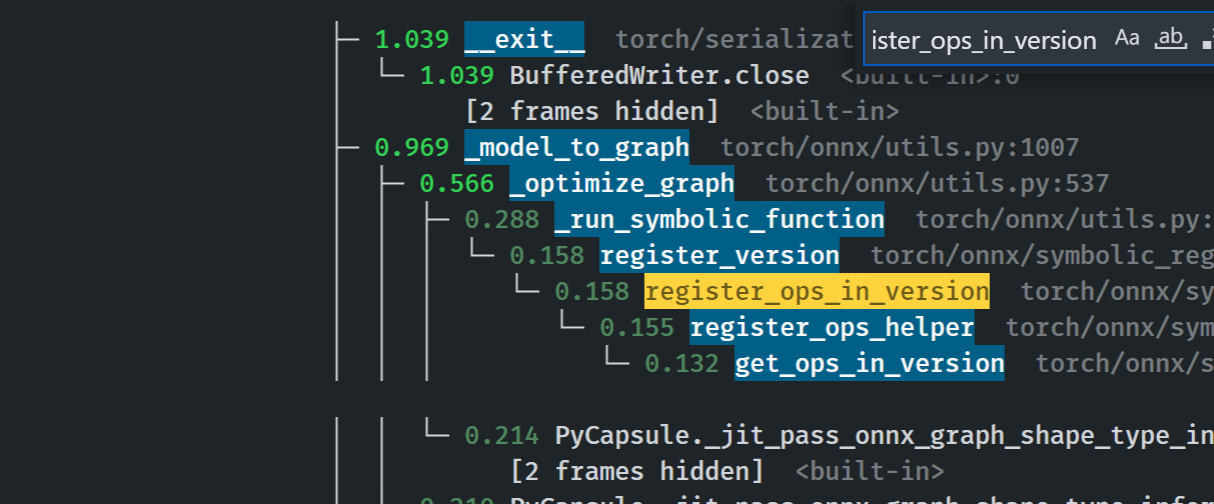

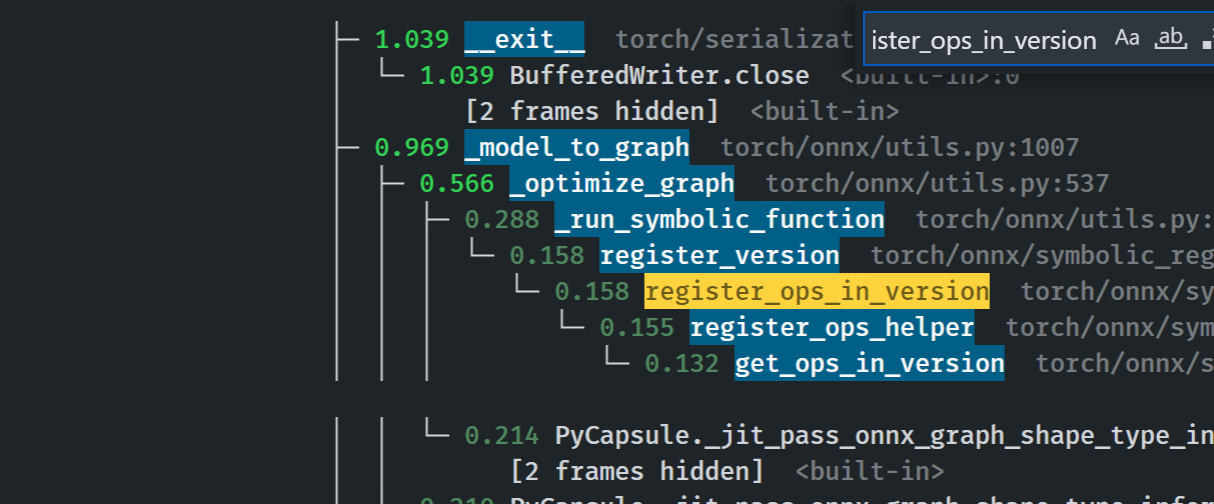

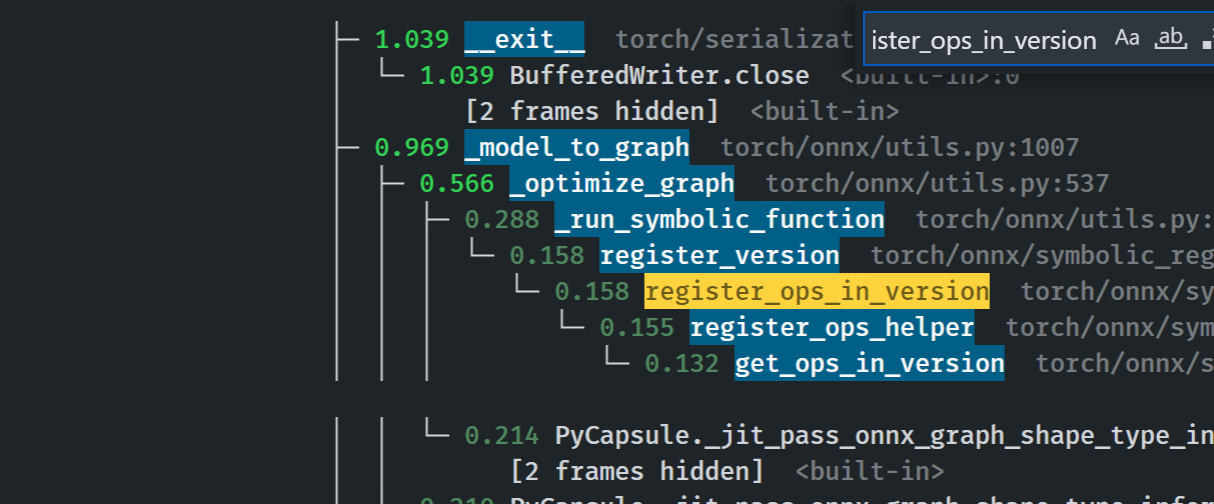

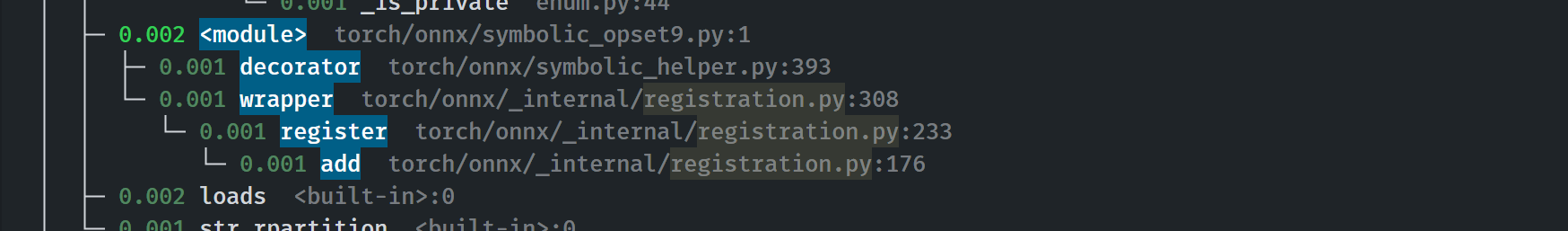

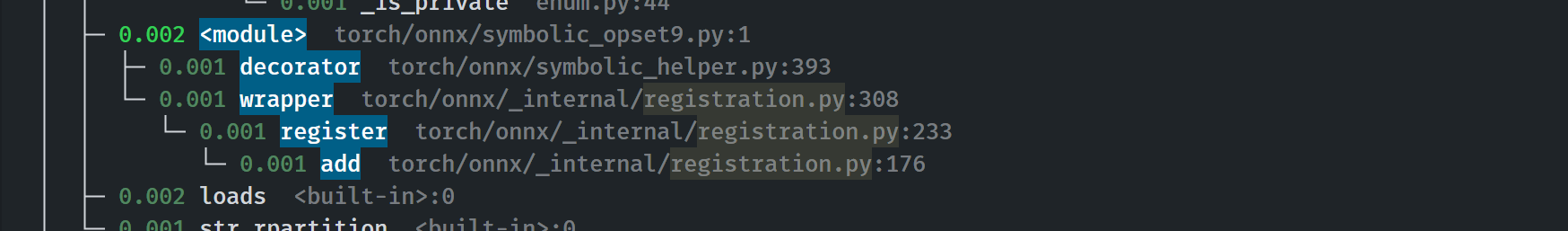

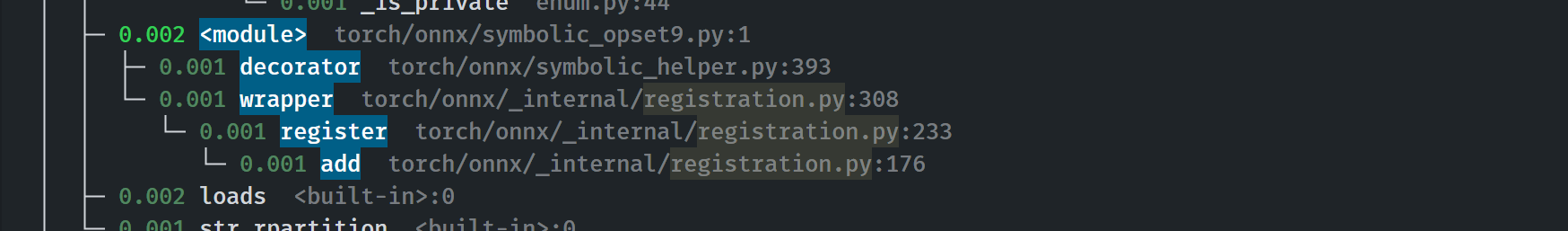

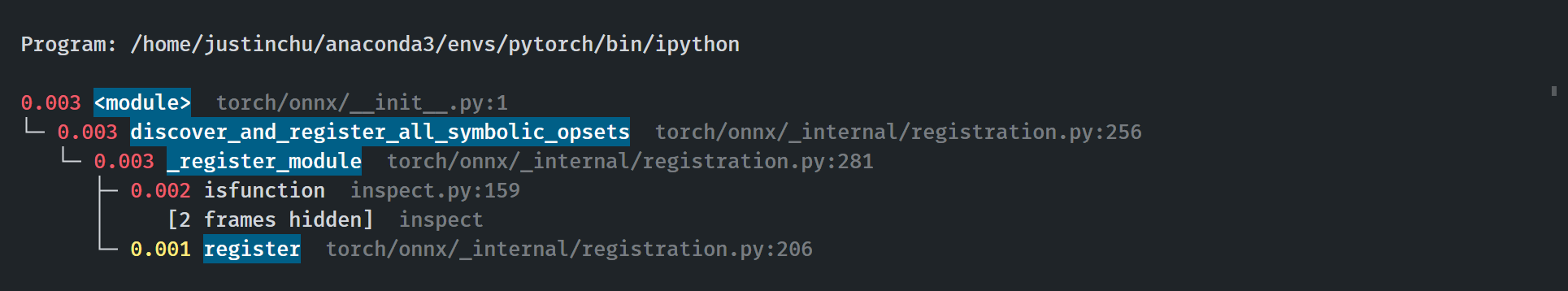

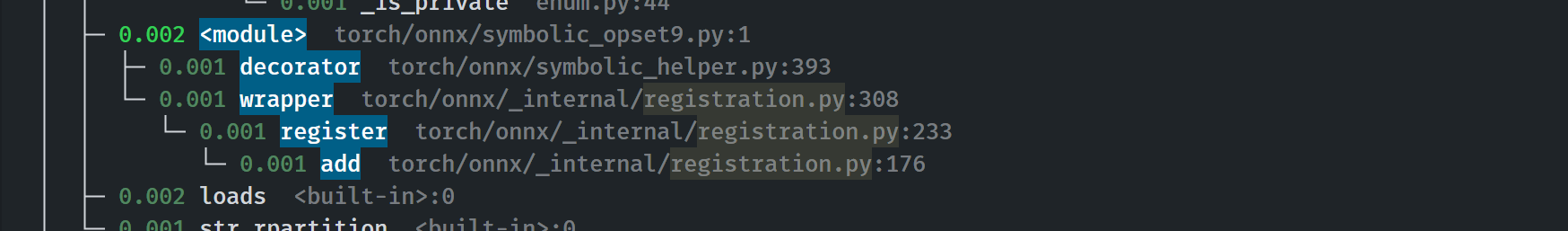

## Summary The change brings the new registry for symbolic functions in ONNX. The `SymbolicRegistry` class in `torch.onnx._internal.registration` replaces the dictionary and various functions defined in `torch.onnx.symbolic_registry`. The new registry - Has faster lookup by storing only functions in the opset version they are defined in - Is easier to manage and interact with due to its class design - Builds the foundation for the more flexible registration process detailed in #83787 Implementation changes - **Breaking**: Remove `torch.onnx.symbolic_registry` - `register_custom_op_symbolic` and `unregister_custom_op_symbolic` in utils maintain their api for compatibility - Update _onnx_supported_ops.py for doc generation to include quantized ops. - Update code to register python ops in `torch/csrc/jit/passes/onnx.cpp` ## Profiling results -0.1 seconds in execution time. -34% time spent in `_run_symbolic_function`. Tested on the alexnet example in public doc. ### After ``` └─ 1.641 export <beartype(torch.onnx.utils.export) at 0x7f19be17f790>:1 └─ 1.641 export torch/onnx/utils.py:185 └─ 1.640 _export torch/onnx/utils.py:1331 ├─ 0.889 _model_to_graph torch/onnx/utils.py:1005 │ ├─ 0.478 _optimize_graph torch/onnx/utils.py:535 │ │ ├─ 0.214 PyCapsule._jit_pass_onnx_graph_shape_type_inference <built-in>:0 │ │ │ [2 frames hidden] <built-in> │ │ ├─ 0.190 _run_symbolic_function torch/onnx/utils.py:1670 │ │ │ └─ 0.145 Constant torch/onnx/symbolic_opset9.py:5782 │ │ │ └─ 0.139 _graph_op torch/onnx/_patch_torch.py:18 │ │ │ └─ 0.134 PyCapsule._jit_pass_onnx_node_shape_type_inference <built-in>:0 │ │ │ [2 frames hidden] <built-in> │ │ └─ 0.033 [self] ``` ### Before  ### Start up time The startup process takes 0.03 seconds. Calls to `inspect` will be eliminated when we switch to using decorators for registration in #84448  [ghstack-poisoned]

[ghstack-poisoned]

## Summary The change brings the new registry for symbolic functions in ONNX. The `SymbolicRegistry` class in `torch.onnx._internal.registration` replaces the dictionary and various functions defined in `torch.onnx.symbolic_registry`. The new registry - Has faster lookup by storing only functions in the opset version they are defined in - Is easier to manage and interact with due to its class design - Builds the foundation for the more flexible registration process detailed in #83787 Implementation changes - **Breaking**: Remove `torch.onnx.symbolic_registry` - `register_custom_op_symbolic` and `unregister_custom_op_symbolic` in utils maintain their api for compatibility - Update _onnx_supported_ops.py for doc generation to include quantized ops. - Update code to register python ops in `torch/csrc/jit/passes/onnx.cpp` ## Profiling results -0.1 seconds in execution time. -34% time spent in `_run_symbolic_function`. Tested on the alexnet example in public doc. ### After ``` └─ 1.641 export <beartype(torch.onnx.utils.export) at 0x7f19be17f790>:1 └─ 1.641 export torch/onnx/utils.py:185 └─ 1.640 _export torch/onnx/utils.py:1331 ├─ 0.889 _model_to_graph torch/onnx/utils.py:1005 │ ├─ 0.478 _optimize_graph torch/onnx/utils.py:535 │ │ ├─ 0.214 PyCapsule._jit_pass_onnx_graph_shape_type_inference <built-in>:0 │ │ │ [2 frames hidden] <built-in> │ │ ├─ 0.190 _run_symbolic_function torch/onnx/utils.py:1670 │ │ │ └─ 0.145 Constant torch/onnx/symbolic_opset9.py:5782 │ │ │ └─ 0.139 _graph_op torch/onnx/_patch_torch.py:18 │ │ │ └─ 0.134 PyCapsule._jit_pass_onnx_node_shape_type_inference <built-in>:0 │ │ │ [2 frames hidden] <built-in> │ │ └─ 0.033 [self] ``` ### Before  ### Start up time The startup process takes 0.03 seconds. Calls to `inspect` will be eliminated when we switch to using decorators for registration in #84448  [ghstack-poisoned]

[ghstack-poisoned]

## Summary The change brings the new registry for symbolic functions in ONNX. The `SymbolicRegistry` class in `torch.onnx._internal.registration` replaces the dictionary and various functions defined in `torch.onnx.symbolic_registry`. The new registry - Has faster lookup by storing only functions in the opset version they are defined in - Is easier to manage and interact with due to its class design - Builds the foundation for the more flexible registration process detailed in #83787 Implementation changes - **Breaking**: Remove `torch.onnx.symbolic_registry` - `register_custom_op_symbolic` and `unregister_custom_op_symbolic` in utils maintain their api for compatibility - Update _onnx_supported_ops.py for doc generation to include quantized ops. - Update code to register python ops in `torch/csrc/jit/passes/onnx.cpp` ## Profiling results -0.1 seconds in execution time. -34% time spent in `_run_symbolic_function`. Tested on the alexnet example in public doc. ### After ``` └─ 1.641 export <beartype(torch.onnx.utils.export) at 0x7f19be17f790>:1 └─ 1.641 export torch/onnx/utils.py:185 └─ 1.640 _export torch/onnx/utils.py:1331 ├─ 0.889 _model_to_graph torch/onnx/utils.py:1005 │ ├─ 0.478 _optimize_graph torch/onnx/utils.py:535 │ │ ├─ 0.214 PyCapsule._jit_pass_onnx_graph_shape_type_inference <built-in>:0 │ │ │ [2 frames hidden] <built-in> │ │ ├─ 0.190 _run_symbolic_function torch/onnx/utils.py:1670 │ │ │ └─ 0.145 Constant torch/onnx/symbolic_opset9.py:5782 │ │ │ └─ 0.139 _graph_op torch/onnx/_patch_torch.py:18 │ │ │ └─ 0.134 PyCapsule._jit_pass_onnx_node_shape_type_inference <built-in>:0 │ │ │ [2 frames hidden] <built-in> │ │ └─ 0.033 [self] ``` ### Before  ### Start up time The startup process takes 0.03 seconds. Calls to `inspect` will be eliminated when we switch to using decorators for registration in #84448  [ghstack-poisoned]

[ghstack-poisoned]

## Summary The change brings the new registry for symbolic functions in ONNX. The `SymbolicRegistry` class in `torch.onnx._internal.registration` replaces the dictionary and various functions defined in `torch.onnx.symbolic_registry`. The new registry - Has faster lookup by storing only functions in the opset version they are defined in - Is easier to manage and interact with due to its class design - Builds the foundation for the more flexible registration process detailed in #83787 Implementation changes - **Breaking**: Remove `torch.onnx.symbolic_registry` - `register_custom_op_symbolic` and `unregister_custom_op_symbolic` in utils maintain their api for compatibility - Update _onnx_supported_ops.py for doc generation to include quantized ops. - Update code to register python ops in `torch/csrc/jit/passes/onnx.cpp` ## Profiling results -0.1 seconds in execution time. -34% time spent in `_run_symbolic_function`. Tested on the alexnet example in public doc. ### After ``` └─ 1.641 export <beartype(torch.onnx.utils.export) at 0x7f19be17f790>:1 └─ 1.641 export torch/onnx/utils.py:185 └─ 1.640 _export torch/onnx/utils.py:1331 ├─ 0.889 _model_to_graph torch/onnx/utils.py:1005 │ ├─ 0.478 _optimize_graph torch/onnx/utils.py:535 │ │ ├─ 0.214 PyCapsule._jit_pass_onnx_graph_shape_type_inference <built-in>:0 │ │ │ [2 frames hidden] <built-in> │ │ ├─ 0.190 _run_symbolic_function torch/onnx/utils.py:1670 │ │ │ └─ 0.145 Constant torch/onnx/symbolic_opset9.py:5782 │ │ │ └─ 0.139 _graph_op torch/onnx/_patch_torch.py:18 │ │ │ └─ 0.134 PyCapsule._jit_pass_onnx_node_shape_type_inference <built-in>:0 │ │ │ [2 frames hidden] <built-in> │ │ └─ 0.033 [self] ``` ### Before  ### Start up time The startup process takes 0.03 seconds. Calls to `inspect` will be eliminated when we switch to using decorators for registration in #84448  [ghstack-poisoned]

[ghstack-poisoned]

ghstack-source-id: 35816aa Pull Request resolved: pytorch#84448 Snapshot

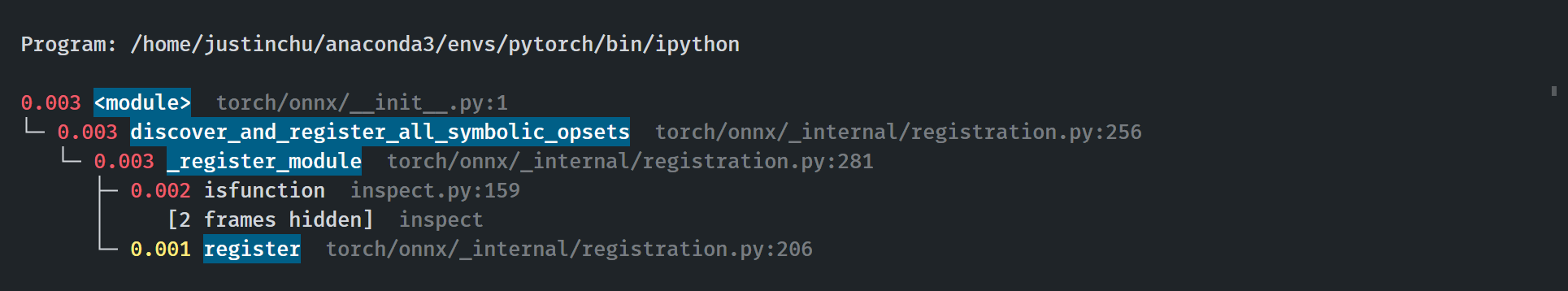

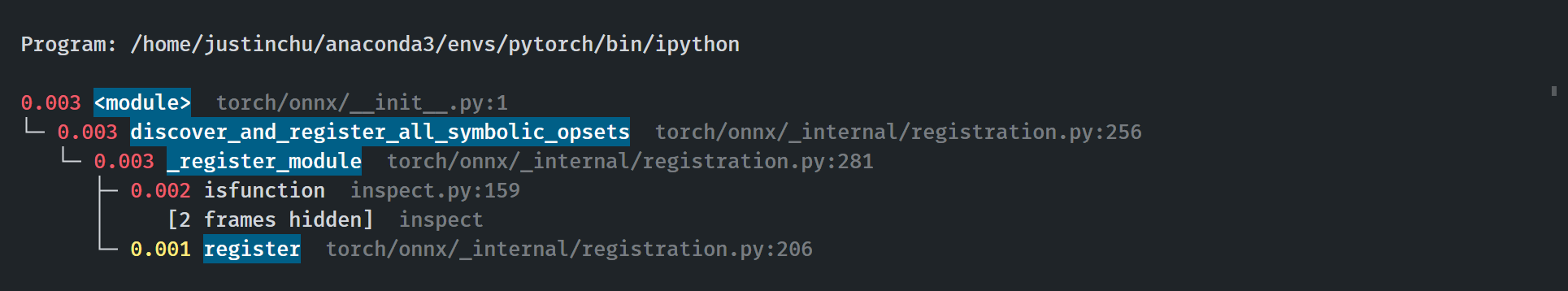

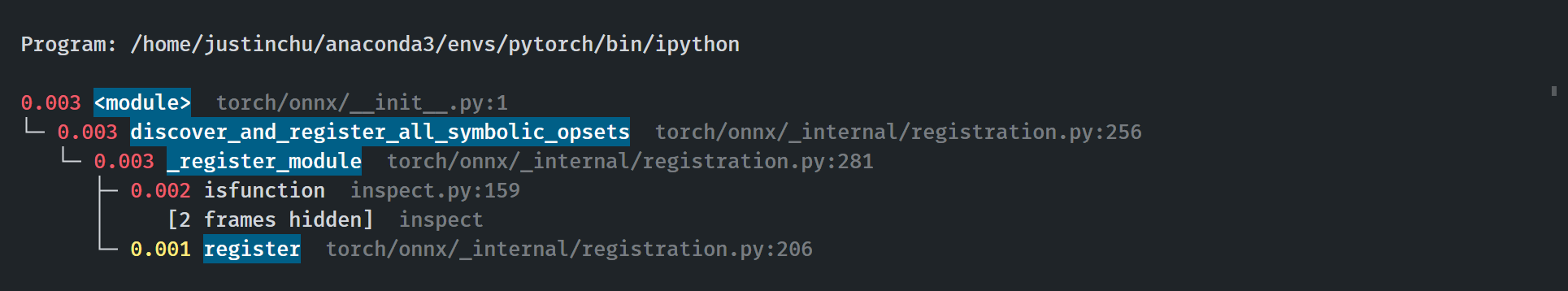

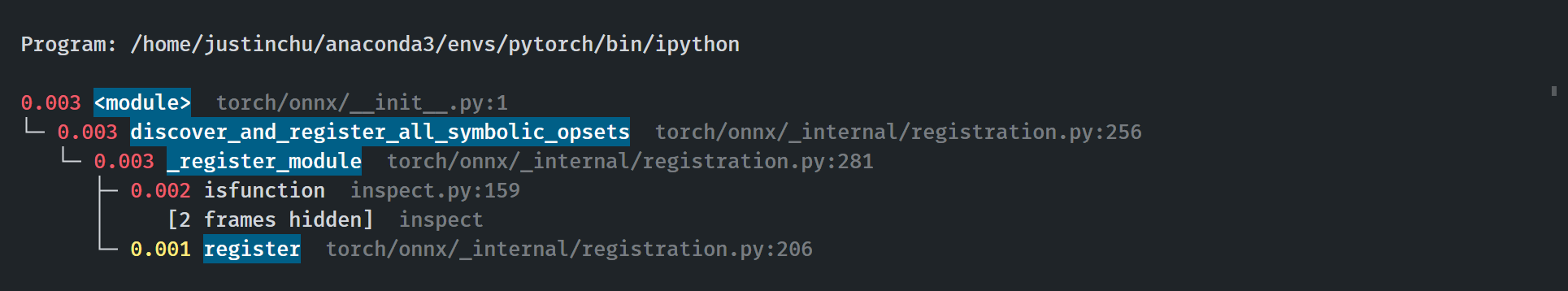

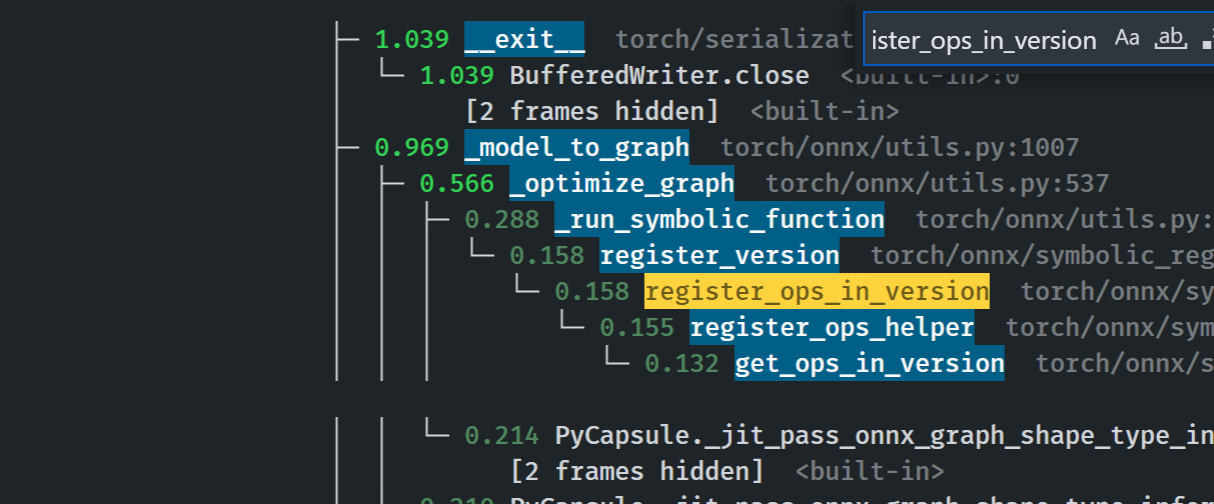

This is the 4th PR in the series of #83787. It enables the use of `onnx_symbolic` across `torch.onnx`. - **Backward breaking**: Removed some symbolic functions from `__all__` because of the use of `onnx_symbolic` for registering the same function on multiple aten names. - Decorate all symbolic functions with `onnx_symbolic` - Move Quantized and Prim ops out from classes to functions defined in the modules. Eliminate the need for `isfunction` checking, speeding up the registration process by 60%. - Remove the outdated unit test `test_symbolic_opset9.py` - Symbolic function registration moved from the first call to `_run_symbolic_function` to init time. - Registration is fast:  [ghstack-poisoned]

ghstack-source-id: 776c4a8 Pull Request resolved: pytorch#84448 Snapshot

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM, a follow up comment on _export. I'd suggest adding _export to ensure __all__ is unchanged. It is safer to avoid any potential backward breakage, until the inter calling of symbolic function is solved in later work.

Done |

This is the 4th PR in the series of #83787. It enables the use of `onnx_symbolic` across `torch.onnx`. - **Backward breaking**: Removed some symbolic functions from `__all__` because of the use of `onnx_symbolic` for registering the same function on multiple aten names. - Decorate all symbolic functions with `onnx_symbolic` - Move Quantized and Prim ops out from classes to functions defined in the modules. Eliminate the need for `isfunction` checking, speeding up the registration process by 60%. - Remove the outdated unit test `test_symbolic_opset9.py` - Symbolic function registration moved from the first call to `_run_symbolic_function` to init time. - Registration is fast:  [ghstack-poisoned]

This is the 4th PR in the series of #83787. It enables the use of `onnx_symbolic` across `torch.onnx`. - **Backward breaking**: Removed some symbolic functions from `__all__` because of the use of `onnx_symbolic` for registering the same function on multiple aten names. - Decorate all symbolic functions with `onnx_symbolic` - Move Quantized and Prim ops out from classes to functions defined in the modules. Eliminate the need for `isfunction` checking, speeding up the registration process by 60%. - Remove the outdated unit test `test_symbolic_opset9.py` - Symbolic function registration moved from the first call to `_run_symbolic_function` to init time. - Registration is fast:  [ghstack-poisoned]

ghstack-source-id: bcc1ca5 Pull Request resolved: pytorch#84448 Snapshot

|

@pytorchbot merge -g |

|

@pytorchbot successfully started a merge job. Check the current status here. |

## Summary The change brings the new registry for symbolic functions in ONNX. The `SymbolicRegistry` class in `torch.onnx._internal.registration` replaces the dictionary and various functions defined in `torch.onnx.symbolic_registry`. The new registry - Has faster lookup by storing only functions in the opset version they are defined in - Is easier to manage and interact with due to its class design - Builds the foundation for the more flexible registration process detailed in #83787 Implementation changes - **Breaking**: Remove `torch.onnx.symbolic_registry` - `register_custom_op_symbolic` and `unregister_custom_op_symbolic` in utils maintain their api for compatibility - Update _onnx_supported_ops.py for doc generation to include quantized ops. - Update code to register python ops in `torch/csrc/jit/passes/onnx.cpp` ## Profiling results -0.1 seconds in execution time. -34% time spent in `_run_symbolic_function`. Tested on the alexnet example in public doc. ### After ``` └─ 1.641 export <@beartype(torch.onnx.utils.export) at 0x7f19be17f790>:1 └─ 1.641 export torch/onnx/utils.py:185 └─ 1.640 _export torch/onnx/utils.py:1331 ├─ 0.889 _model_to_graph torch/onnx/utils.py:1005 │ ├─ 0.478 _optimize_graph torch/onnx/utils.py:535 │ │ ├─ 0.214 PyCapsule._jit_pass_onnx_graph_shape_type_inference <built-in>:0 │ │ │ [2 frames hidden] <built-in> │ │ ├─ 0.190 _run_symbolic_function torch/onnx/utils.py:1670 │ │ │ └─ 0.145 Constant torch/onnx/symbolic_opset9.py:5782 │ │ │ └─ 0.139 _graph_op torch/onnx/_patch_torch.py:18 │ │ │ └─ 0.134 PyCapsule._jit_pass_onnx_node_shape_type_inference <built-in>:0 │ │ │ [2 frames hidden] <built-in> │ │ └─ 0.033 [self] ``` ### Before  ### Start up time The startup process takes 0.03 seconds. Calls to `inspect` will be eliminated when we switch to using decorators for registration in #84448  Pull Request resolved: #84382 Approved by: https://github.com/AllenTiTaiWang, https://github.com/BowenBao

This is the 4th PR in the series of #83787. It enables the use of `@onnx_symbolic` across `torch.onnx`. - **Backward breaking**: Removed some symbolic functions from `__all__` because of the use of `@onnx_symbolic` for registering the same function on multiple aten names. - Decorate all symbolic functions with `@onnx_symbolic` - Move Quantized and Prim ops out from classes to functions defined in the modules. Eliminate the need for `isfunction` checking, speeding up the registration process by 60%. - Remove the outdated unit test `test_symbolic_opset9.py` - Symbolic function registration moved from the first call to `_run_symbolic_function` to init time. - Registration is fast:  Pull Request resolved: #84448 Approved by: https://github.com/AllenTiTaiWang, https://github.com/BowenBao

Stack from ghstack (oldest at bottom):

symbolic_function_groupto dispatch function calls #85350g.opmethod to the class #84728This is the 4th PR in the series of #83787. It enables the use of

@onnx_symbolicacrosstorch.onnx.__all__because of the use of@onnx_symbolicfor registering the same function on multiple aten names.@onnx_symbolicisfunctionchecking, speeding up the registration process by 60%.test_symbolic_opset9.py_run_symbolic_functionto init time.