-

Couldn't load subscription status.

- Fork 129

[Not to Merge] A PoC of Graph Break Compiler #489

Conversation

Summary: This is a PoC to break graphs in order to support gradient hooks for the AOT backend. E2E use cases are composing DDP/FSDP with dynamo. Test Plan: WIP.

This comment was marked as outdated.

This comment was marked as outdated.

|

Overall this seems reasonable, it proves you can insert graph breaks inside the backend. I'm wondering if putting communication primitives in the graph would also be possible. |

Do you mean tracing through the gradient hooks or manually inserting communication ops? |

We could do whichever is easier, but the result would be a graph that contains communication ops. |

|

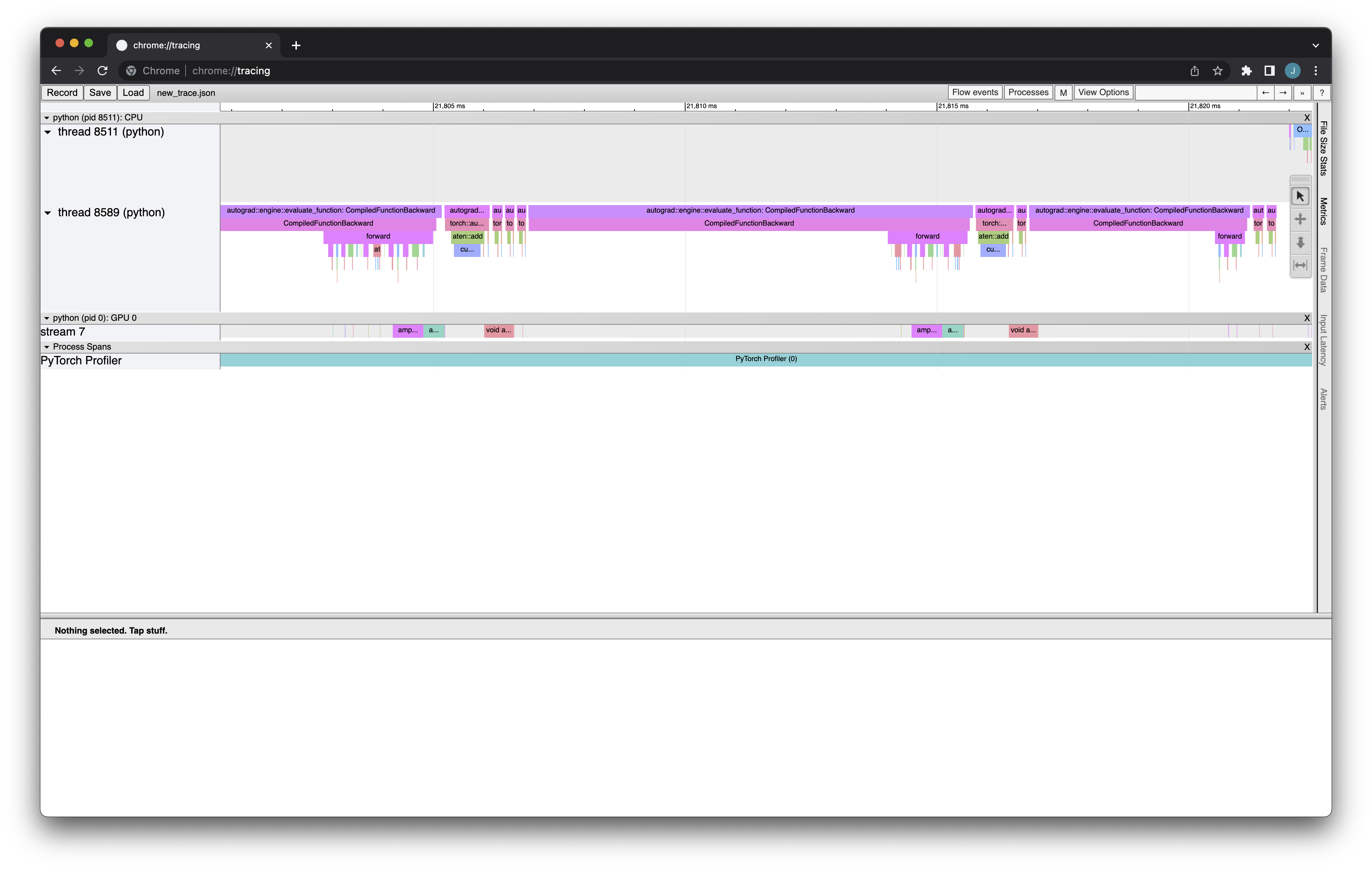

This is the output from dynamo_example2.py which is an improved PoC that actually can have gradient hooks fired in between. |

|

Hi @alanwaketan! Thank you for your pull request. We require contributors to sign our Contributor License Agreement, and yours needs attention. You currently have a record in our system, but the CLA is no longer valid, and will need to be resubmitted. ProcessIn order for us to review and merge your suggested changes, please sign at https://code.facebook.com/cla. If you are contributing on behalf of someone else (eg your employer), the individual CLA may not be sufficient and your employer may need to sign the corporate CLA. Once the CLA is signed, our tooling will perform checks and validations. Afterwards, the pull request will be tagged with If you have received this in error or have any questions, please contact us at cla@fb.com. Thanks! |

|

We have migrated More details and instructions to port this PR over can be found in #1588 |

Summary:

This is a PoC to break graphs in order to support gradient hooks for the

AOT backend. E2E use cases are composing DDP/FSDP with dynamo.

Test Plan:

WIP.