-

Notifications

You must be signed in to change notification settings - Fork 7.2k

Allow F.normalize function to use float and list of float as mean and… #5569

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Allow F.normalize function to use float and list of float as mean and… #5569

Conversation

|

Hi @YosuaMichael! Thank you for your pull request and welcome to our community. Action RequiredIn order to merge any pull request (code, docs, etc.), we require contributors to sign our Contributor License Agreement, and we don't seem to have one on file for you. ProcessIn order for us to review and merge your suggested changes, please sign at https://code.facebook.com/cla. If you are contributing on behalf of someone else (eg your employer), the individual CLA may not be sufficient and your employer may need to sign the corporate CLA. Once the CLA is signed, our tooling will perform checks and validations. Afterwards, the pull request will be tagged with If you have received this in error or have any questions, please contact us at cla@fb.com. Thanks! |

💊 CI failures summary and remediationsAs of commit 602d4a6 (more details on the Dr. CI page): 💚 💚 Looks good so far! There are no failures yet. 💚 💚 This comment was automatically generated by Dr. CI (expand for details).Please report bugs/suggestions to the (internal) Dr. CI Users group. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the PR @YosuaMichael ! Following up on your questions on workchat, you'll need to sign the CLA as individual. I made a few comments below, LMK what you think

torchvision/transforms/functional.py

Outdated

|

|

||

|

|

||

| def normalize(tensor: Tensor, mean: List[float], std: List[float], inplace: bool = False) -> Tensor: | ||

| def normalize(tensor: Tensor, mean: Union(float, List[float]), std: Union(float, List[float]), inplace: bool = False) -> Tensor: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

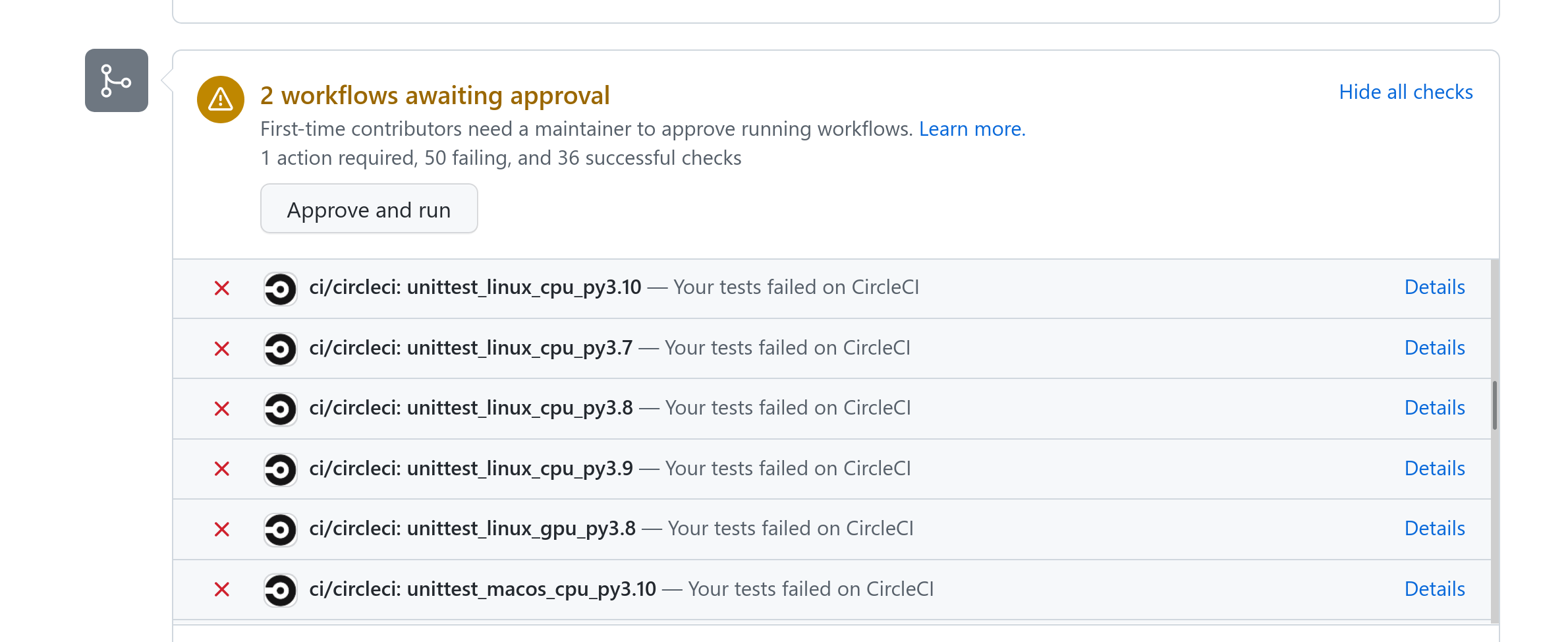

If you look at one of the CI jobs below

you will see that they're failing with the following error:

torchvision/transforms/transforms.py:17: in <module>

from . import functional as F

torchvision/transforms/functional.py:337: in <module>

def normalize(tensor: Tensor, mean: Union(float, List[float]), std: Union(float, List[float]), inplace: bool = False) -> Tensor:

E NameError: name 'Union' is not defined

So it looks like this is missing an import

test/test_transforms.py

Outdated

| torch.manual_seed(42) | ||

| n_channels = 3 | ||

| img_size = 10 | ||

| mean = 0.5 | ||

| std = 0.5 | ||

| img = torch.rand(n_channels, img_size, img_size) | ||

| # Check that this work | ||

| target = F.normalize(img, mean, std) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nit: Since we don't re-use these variable names, we don't really need to declare them. We can just shorten it to

img = torch.rand(3, 10, 10)

target = F.normalize(img, mean=0.5, std=0.5)But in reality, we don't actually need this test at all, as it wouldn't give us any error anyway, because the tests aren't checked by mypy. What we should try to do is to use mean=some_float or mean=std somewhere in our code instead. If there are no occurrence of such a thing, maybe we can just verify manually.

Note that we have a CI job for type annotations as well, called type_check_python https://app.circleci.com/pipelines/github/pytorch/vision/15467/workflows/20203b6e-c9ca-47a3-9d77-22148ff68086/jobs/1249720

|

@YosuaMichael Thanks for the PR! First of all the tests are failing because you didn't include |

|

Thank you for signing our Contributor License Agreement. We can now accept your code for this (and any) Meta Open Source project. Thanks! |

|

Hi @datumbox @NicolasHug thanks for the comment! Yeah, I have just read the CI error and indeed I forgot to import Union... @NicolasHug you are right about the test! I think we dont need the unit test. Usually what is the best way to detect this import issue offline? |

|

@datumbox @YosuaMichael I'm not sure we need to worry about pytorch/pytorch#69434, at least for now. If mypy and the rest of our tests are happy with

There's a typo, it should be test_transforms.py. If you ran exactly Also, flake8 won't check types, only mypy does. So to make sure that mypy is happy with the changes, the best way is to locally write a basic file that calls |

Hmmmm. Maybe you got old dependencies or something? Numpy has previously throw a bunch of warnings for me and it was because I had a very old version. Mypy should also catch this problem. You could run it from project root with |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You also got a typo on the annotation (brackets vs parenthesis).

torchvision/transforms/functional.py

Outdated

|

|

||

| def normalize(tensor: Tensor, mean: List[float], std: List[float], inplace: bool = False) -> Tensor: | ||

| def normalize( | ||

| tensor: Tensor, mean: Union(float, List[float]), std: Union(float, List[float]), inplace: bool = False |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| tensor: Tensor, mean: Union(float, List[float]), std: Union(float, List[float]), inplace: bool = False | |

| tensor: Tensor, mean: Union[float, List[float]], std: Union[float, List[float]], inplace: bool = False |

|

@YosuaMichael You need to update the method on |

…efore calling torch.as_tensor

|

Hi @datumbox , thanks for your quick response! After changing functional_tensor, it seems to still give error when it call |

|

Noted the failed test on Tuple type, and will work to fix it |

|

Take note that using As for the tuple, I think it should be resolved by using typing.Sequence instead of typing.List. However unfortunately, it is not supported as of now: https://pytorch.org/docs/stable/jit_language_reference.html#unsupported-typing-constructs. **Note: if we use For the int, I think it is reasonable enough not to support this because usually the value of mean and std should be floating point rather than int. |

|

@YosuaMichael Thanks for the detailed analysis. The reasons you mentioned above are why I'm skeptical merging this. I feel the same for my own PR at #5568. It feels like we fix something but break something else. What are your thoughts on this? BTW have you raised a ticket on PyTorch for the specific problem? It might be worth raising it to get it fixed as this way it will make merging this PR a no-brainer. |

@datumbox ,I also feel like it should not be merged because of this since it is very likely people use Tuple on mean / std. I will raise an issue on the pytorch github soon, will update here again. |

|

@YosuaMichael Thanks for being an awesome contributor. Please stick around and send more PRs. :) For exactly the same reasons you mention, I decided to also close the other PR. See #5568 (comment) Let's close this PR, raise the issue to PyTorch core and reassess on the near future once the issue is patched. |

|

@datumbox Raised this issue on Pytorch: pytorch/pytorch#74030 Yeah, will close this PR for now. |

Resolve issue #5046