-

Notifications

You must be signed in to change notification settings - Fork 45.4k

Description

System information

- What is the top-level directory of the model you are using: tensorflow/models/research

- Have I written custom code (as opposed to using a stock example script provided in TensorFlow): no

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): command issued from Linux Ubuntu 16.04 to run job at Google ML Engine.

- TensorFlow installed from (source or binary): Installed by Google ML Engine.

- TensorFlow version (use command below): 1.8

- Bazel version (if compiling from source): N/A

- CUDA/cuDNN version: no CUDA

- GPU model and memory: no GPU

- Exact command to reproduce:

gcloud ml-engine jobs submit training ${JOB_ID} --runtime-version 1.8 --job-dir=gs://${DATASET_BUCKET}/mini-dataset/trained-models/${JOB_ID}/staging --packages dist/object_detection-0.1.tar.gz,slim/dist/slim-0.1.tar.gz,dist/pycocotools-2.0.tar.gz --module-name object_detection.model_main --region us-central1 --config object_detection/samples/cloud/cloud.yml -- --model_dir=gs://${DATASET_BUCKET}/mini-dataset/trained-models/${JOB_ID} --pipeline_config_path=gs://${DATASET_BUCKET}/mini-dataset/plate/v0/ssd_mobilenet_v1_sas.config

Describe the problem

I created an object detection training job to run at Google ML Engine with a quite simple dataset with the command above.

Training configs are defined in the file ssd_mobilenet_v1_sas.config (attached as ssd_mobilenet_v1_sas.txt).

Initially I ran a training job with 20000 steps and the job completed successfully in about an hour, then I ran the same command with different JOB_ID and the same config.

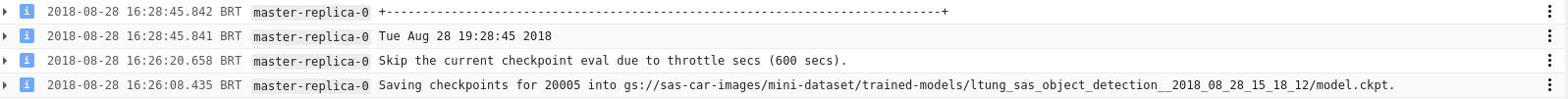

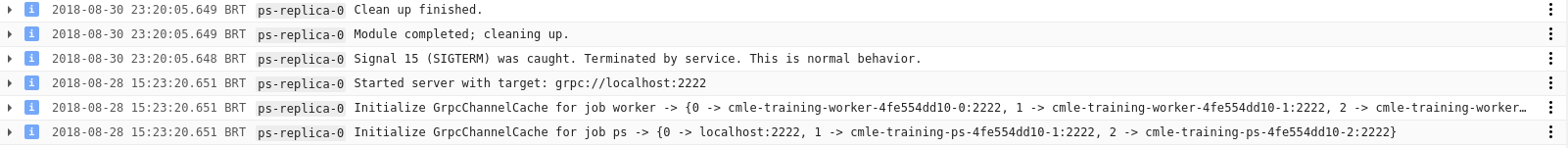

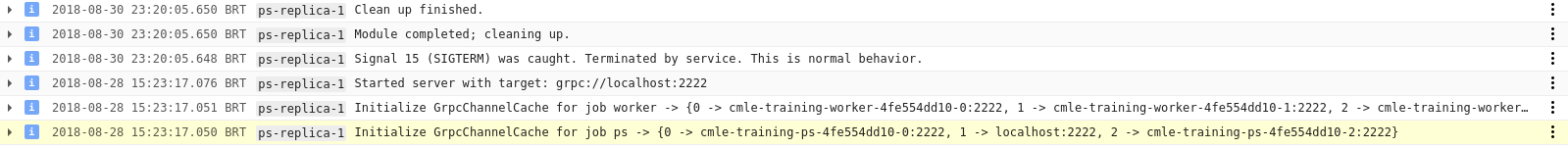

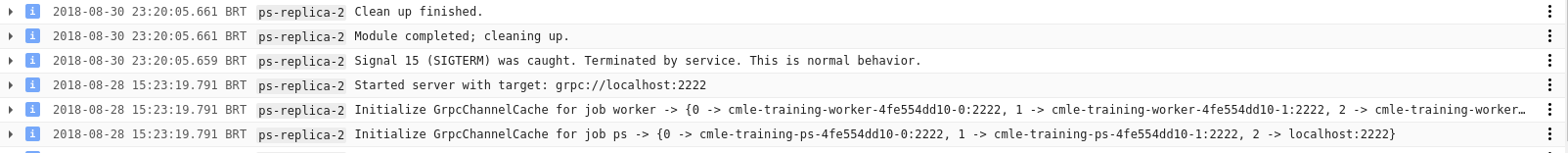

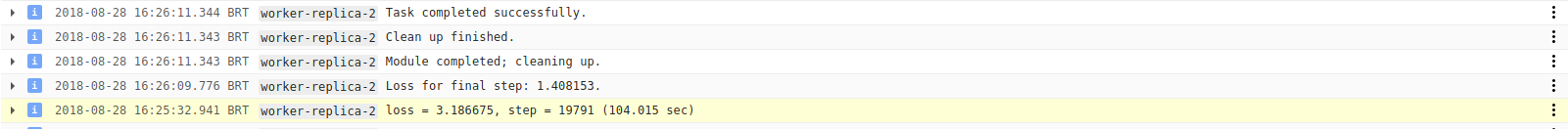

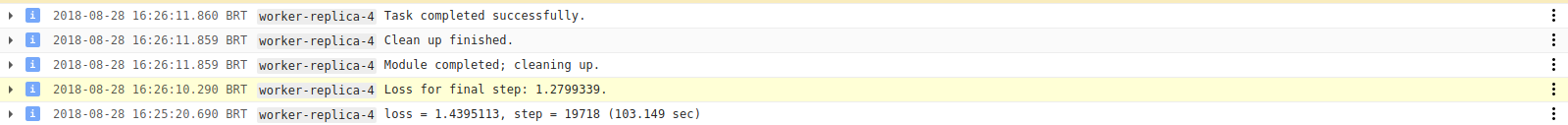

Looking at the ML Engine job log, master replica and workers both show that step 20000 was reached in about an hour and the checkpoint saved to GCS. However, the ML Engine job itself didn't finish and the parameter server replica remained active for more than 2 days, incurring ML unit charges.

After 2 days with the job active doing nothing, the parameter server replica logged the message: Signal 15 (SIGTERM) was caught. Terminated by service. This is normal behavior.

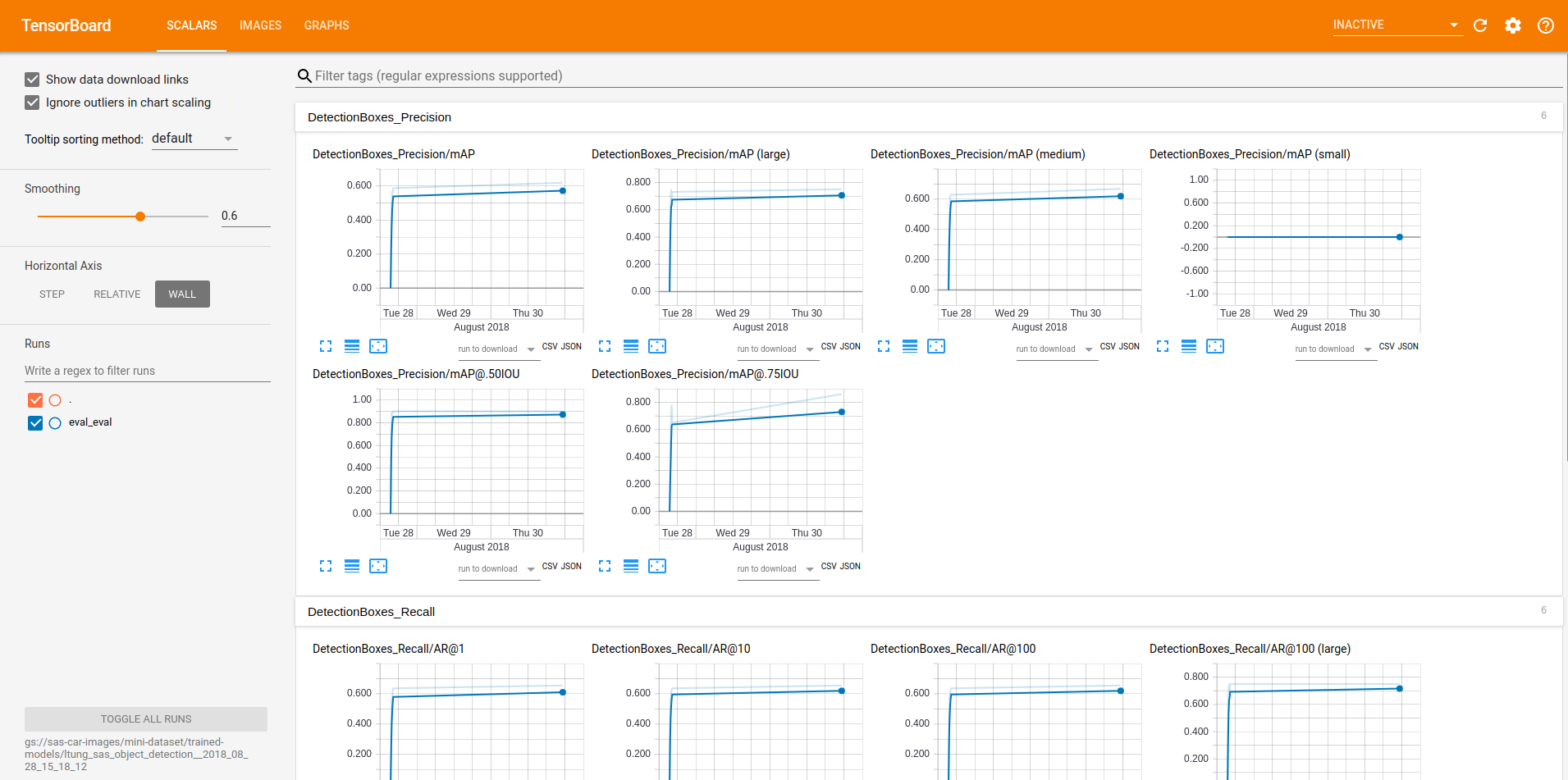

The tensorboard also shows no training progress after reaching 20000 steps.

After contacting with Google Cloud support team, their investigation pointed that there's a problem with the Tensorflow Object Detection code and suggested to file an issue here.

Source code / logs

Attached images show logs from master, parameter server and worker replicas, as well as tensorboad: