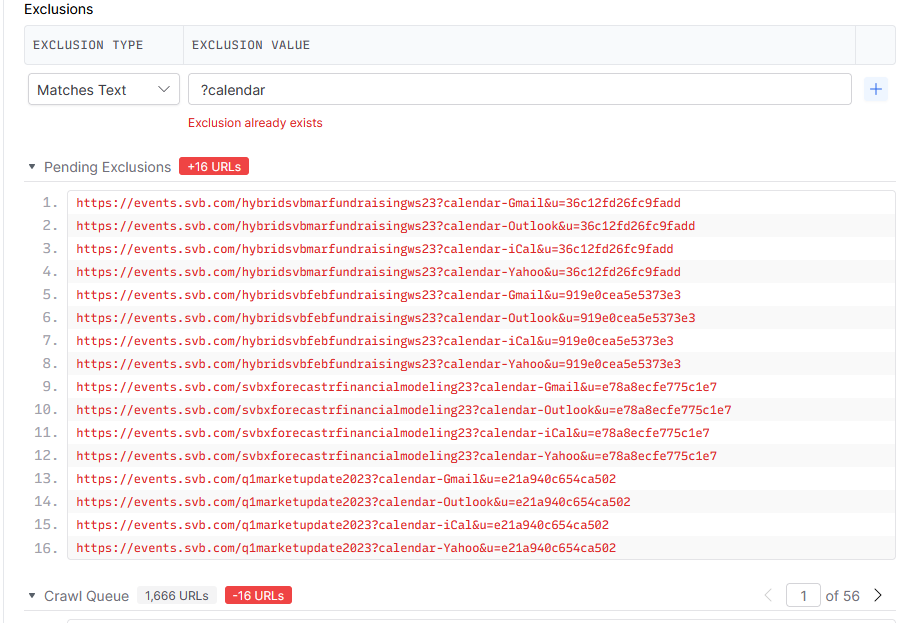

Tried crawling a site, did not set up any exclusions beforehand, when entering exclusions the page reports that they already exist, does not add anything to the table, and does not remove the URLs from the queue.

This should be fixed before we launch 1.4! 🙃