-

Notifications

You must be signed in to change notification settings - Fork 809

Description

`

// funtion one

void ImageProcess::GpuPreProcessConfigure(const uint32_t width_src, const uint32_t height_src, const uint32_t width_dst, const uint32_t height_dst) {

std::cout << "TESTING GPU PREPROCESS CONFIGURE" << std::endl;

std::cout << "CONFIGURE INFO width_src: " << width_src << " height_src: " << height_src << " width_dst: " << width_dst << " height_dst: " << height_dst << std::endl;

// 0. cl shedule && init input tensor

// CLScheduler::get().default_init();

CLBackendType backend_type = CLBackendType::Native;

auto ctx_dev_err = create_opencl_context_and_device(backend_type);

// print device info form tuple

std::cout << "OpenCL device info: " << std::endl;

std::cout << "-------------------" << std::endl;

std::cout << "Device name: " << std::get<1>(ctx_dev_err).getInfo<CL_DEVICE_NAME>() << std::endl;

CLScheduler::get().default_init_with_context(std::get<1>(ctx_dev_err), std::get<0>(ctx_dev_err), nullptr);

input_tensor_.allocator()->init(TensorInfo(TensorShape(3U, width_src, height_src), 1, DataType::U8).set_data_layout(DataLayout::NHWC));

input_tensor_.info()->set_format(Format::U8);

// 1. configure image padding, padding rectangle to square [width_src, width_src]

pad_output_tensor_.allocator()->init(TensorInfo(TensorShape(3U, width_src, width_src), 1, DataType::U8).set_data_layout(DataLayout::NHWC));

pad_output_tensor_.info()->set_format(Format::U8);

PaddingList padding_list = {{0, 0}, {0, 0}, {(width_src - height_src) / 2, (width_src - height_src) / 2}}; // nhwc 第一个维度是width,第二个维度是height,第三个维度是channel, 在这里padding的是height

pad_layer_.configure(&input_tensor_, &pad_output_tensor_, padding_list, PixelValue(127), PaddingMode::CONSTANT);

// 2. configure resize

rescale_output_tensor_.allocator()->init(TensorInfo(TensorShape(3U, width_dst, height_dst), 1, DataType::U8).set_data_layout(DataLayout::NHWC));

rescale_output_tensor_.info()->set_format(Format::U8);

rescale_.configure(&pad_output_tensor_, &rescale_output_tensor_, ScaleKernelInfo(InterpolationPolicy::BILINEAR, BorderMode::CONSTANT));

// 3. configure nhwc to nchw

nchw_cl_tensor_.allocator()->init(TensorInfo(TensorShape(width_dst, width_dst, 3U), 1, DataType::U8).set_data_layout(DataLayout::NCHW));

nchw_cl_tensor_.info()->set_format(Format::U8);

const arm_compute::PermutationVector nhwc_to_nchw_permutation_vector = {1U, 2U, 0U};

permute_nhwc_to_nchw_.configure(&rescale_output_tensor_, &nchw_cl_tensor_, nhwc_to_nchw_permutation_vector);

// 4. allocate memory

input_tensor_.allocator()->allocate();

rescale_output_tensor_.allocator()->allocate();

nchw_cl_tensor_.allocator()->allocate();

pad_output_tensor_.allocator()->allocate();

std::cout << "GpuPreProcessConfigure end" << std::endl;

}

void ImageProcess::GpuPreProcessXBGR(const char* src, const uint32_t width_src, const uint32_t height_src, char* dest, const uint32_t width_dst, const uint32_t height_dst) {

// 0. cl shedule

std::cout << "GpuPreProcessXBGR start" << std::endl;

std::cout << "PROCESS INFO: width_src: " << width_src << ", height_src: " << height_src << ", width_dst: " << width_dst << ", height_dst: " << height_dst << std::endl;

std::cout << "GpuPreProcessXBGR default init cl shedule" << std::endl;

// CLScheduler::get().default_init();

// 1. 开一个临时数组,并将xbgr转为rgb

// 这里不建议将这部分内存零散的一个个拷贝到tensor显存中,而是开一个临时buffer在内存中先处理好后再一次性拷贝到tensor显存中,实测这样cpu占用率会降低5%左右

std::cout << "GpuPreProcessXBGR convert xbgr to rgb" << std::endl;

int size = width_src * height_src * 3;

std::unique_ptr<uint8_t[]> rgb_image(new uint8_t[size]);

for (uint32_t i = 0; i < height_src; ++i) {

for (uint32_t j = 0; j < width_src; ++j) {

rgb_image[i * width_src * 3 + j * 3 + 0] = src[i * width_src * 4 + j * 4 + 2];

rgb_image[i * width_src * 3 + j * 3 + 1] = src[i * width_src * 4 + j * 4 + 1];

rgb_image[i * width_src * 3 + j * 3 + 2] = src[i * width_src * 4 + j * 4 + 0];

}

}

// 2. copy data to input_tensor_

std::cout << "GpuPreProcessXBGR memcpy to input_tensor_" << std::endl;

input_tensor_.map();

memcpy(input_tensor_.buffer(), rgb_image.get(), size);

input_tensor_.unmap();

pad_layer_.run();

// DrawTensorImage(&pad_output_tensor_, width_src, width_src, "pad_output_tensor_.jpg");

rescale_.run();

// DrawTensorImage(&rescale_output_tensor_, width_dst, height_dst, "rescale_output_tensor_.jpg");

permute_nhwc_to_nchw_.run();

// 5. 取出permute_nhwc_to_nchw_的结果作为返回

std::cout << "GpuPreProcessXBGR get result" << std::endl;

nchw_cl_tensor_.map();

memcpy(dest, nchw_cl_tensor_.buffer(), width_dst * height_dst * 3);

nchw_cl_tensor_.unmap();

return;

}

`

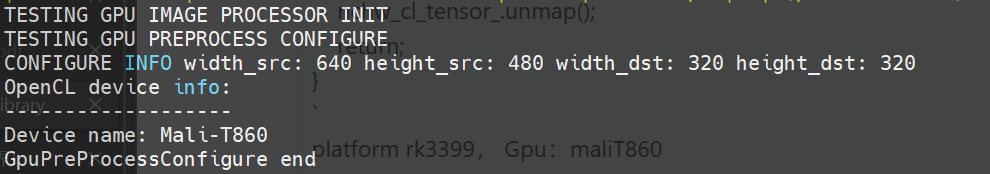

platform rk3399, Gpu:maliT860

branch v22.08

This program runs on some rk3399 boards and can normally call the gpu.

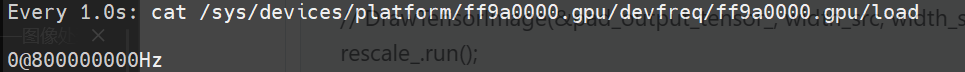

You can see that the range occupied by the gpu is 20-100.

And the recognition thread using about 10% cpu.

However, when the same program is running on some other rk3399 boards, the thread that called the function has a high CPU usage, but a low gpu usage.

gpu usage remains between 0 and 1

And It is strange that all boards can print device Mali-T860 by the code

std::cout << "Device name: " << std::get<1>(ctx_dev_err).getInfo<CL_DEVICE_NAME>() << std::endl;