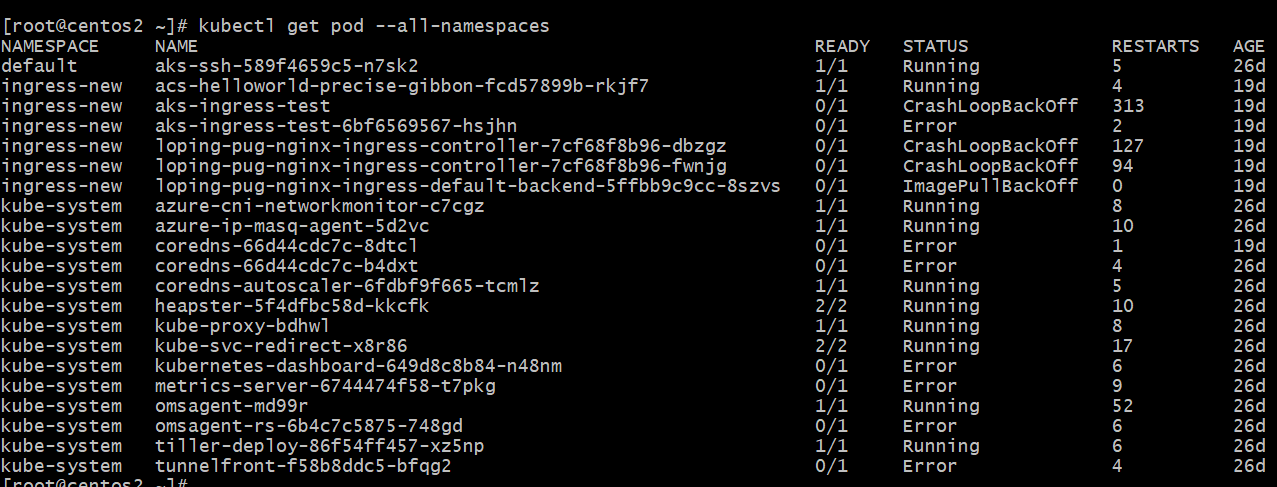

Cluster based on Azure CNI, we found POD in error state when start the node, usually stop and start the node, POD statu can back to normal, is it an issue with Azure CNI or Kubernets?

Version:

Azure CNI: v1.0.17

Kubernetes:1.12.7

AKS engine:v0.33.4

node is started at Jul 09 03:49 (UTC), when started many pods are in error state, download the kubernete log and CNI log as below, when stop and start the node, POD status back to normal, this issue can repro whenever the node is started, we found there're similar issues with different CNI other than Azure CNI, but also indicate " failed to read pod IP from plugin/docker", please help investigate, thanks

kubenetes error log.txt

Azure CNI log.txt