-

Notifications

You must be signed in to change notification settings - Fork 2.3k

Description

Description

TensorRT runs slower than cuda on c# environment.

model: resnet34 pretrained from pytorch.

input: 126,3,96,64 for 72 loops

1st conv1 and last fc layer modified.

Python

TensorRT on ubuntu runs faster than onnxruntime gpu.

C#

Onnxruntime built from source with --use_tensorrt

tensorrt runs slower than cuda. It is very slow that the difference is 9 times slower.

Environment

TensorRT Version: 7.2.2.3, 7.2.3.4

NVIDIA GPU: RTX 2080 Ti

NVIDIA Driver Version: 461.92

CUDA Version: 11.1, 11.2

CUDNN Version: 8.0. , 8.1., 8.2,

Operating System: Windows 10 x64

Python Version (if applicable): 3.6

Tensorflow Version (if applicable):

PyTorch Version (if applicable): 1.7.0

Baremetal or Container (if so, version):

The combination was

TensorRT 7.2.2.3, cudnn 8.0. cuda 11.1

TensorRT 7.2.2.3, cudnn 8.0. cuda 11.2

TensorRT 7.2.3.4, cudnn 8.1. cuda 11.2

TensorRT 7.2.3.4, cudnn 8.2. cuda 11.2

all of which had a slow inference time running with TensorRT

Relevant Files

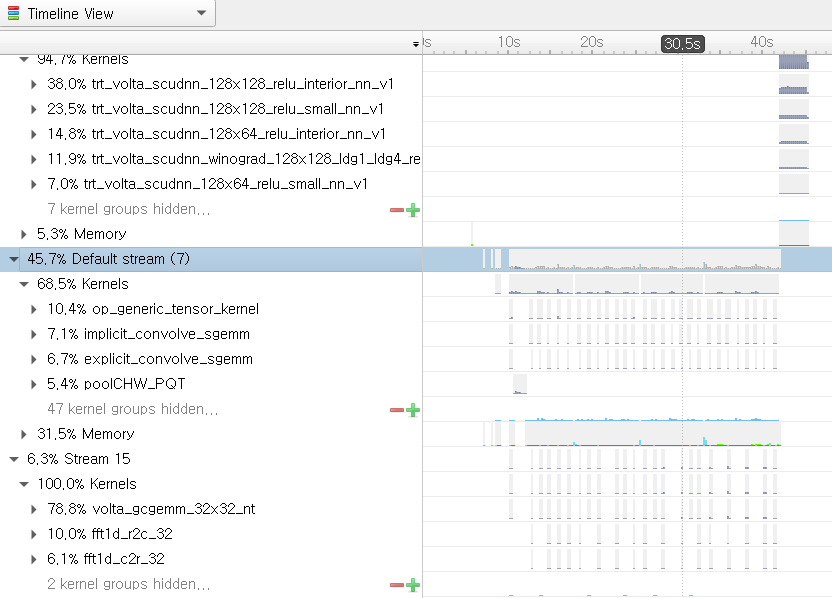

This is Nsight System on TensorRT inference. I am not sure what causes such delays before the full operation at the end.

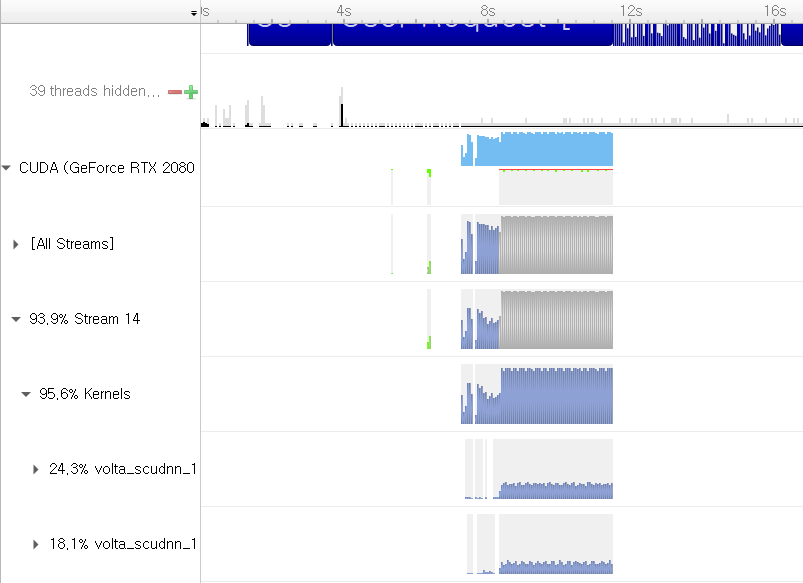

This is Nsight System on Cuda inference. Unlike TensorRT, there is very little delay before the full operation at the end