-

Notifications

You must be signed in to change notification settings - Fork 2.3k

Description

Description

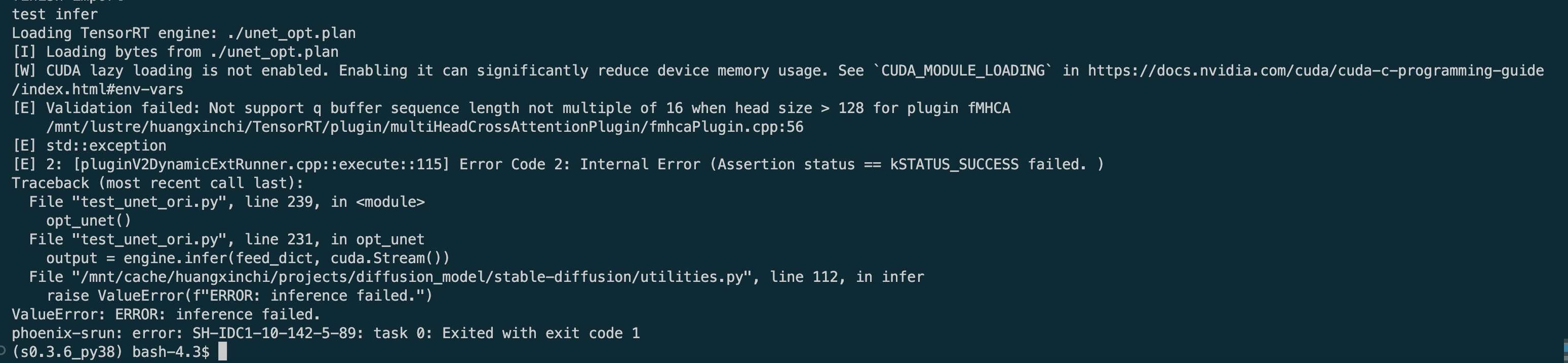

Hi TensorRT team, appreciate your great work! I were trying to increase the inference speed of stable diffusion by adding plugins like fMHA and fMHCA into UNet. The size of input image of my model were 512*768, 640*640 and 768*512, so I set a dynamic shapes when transforming torch model to onnx, and onnx to tensorRT engine. However, when the tensorRT engine was used with an input image which size was 640*640, ValueError appeared:

.

.

Will you consider to support size like 640*640 for fMHCA?

Environment

TensorRT Version: 8.5.1.7

NVIDIA GPU: A100

NVIDIA Driver Version: 460.32.03

CUDA Version: cuda-11.6

CUDNN Version: 8.7.0.84

Operating System: CentOS

Python Version (if applicable): 3.8

Tensorflow Version (if applicable):

PyTorch Version (if applicable): 1.12.1+cuda113_cudnn8.3.2

Baremetal or Container (if so, version):

Relevant Files

https://github.com/NVIDIA/TensorRT/tree/release/8.5/demo/Diffusion