-

Notifications

You must be signed in to change notification settings - Fork 2.3k

Description

Description

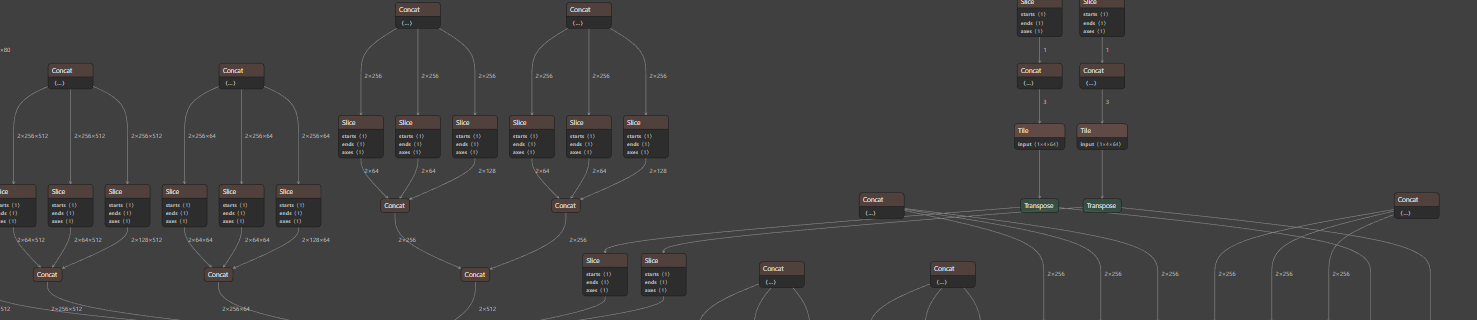

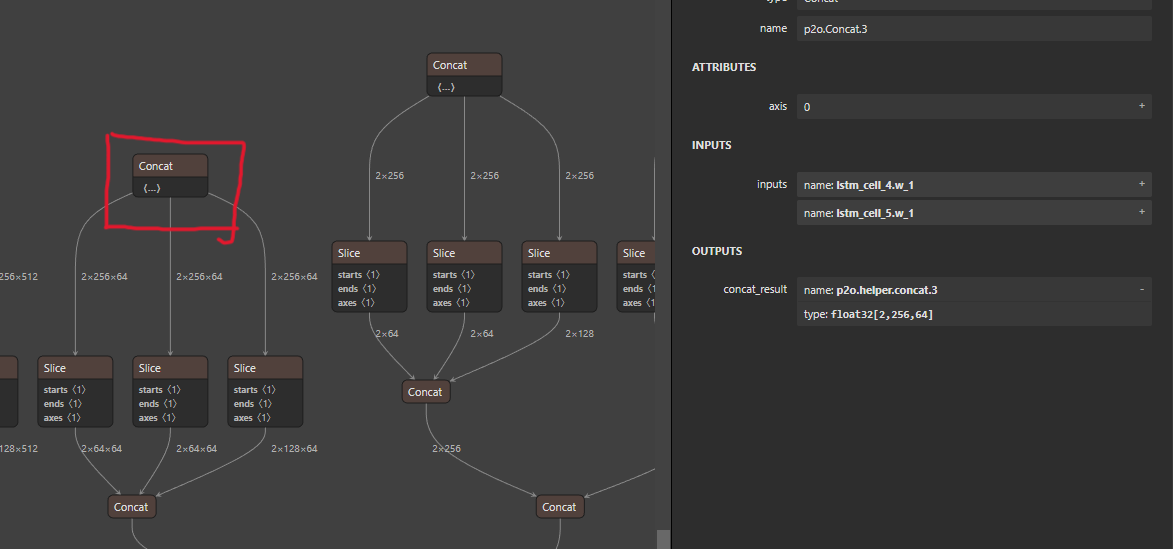

Hi, I recently have a problem when convert an onnx model to trt, alghough the conversion is successfully, their output results are inconsistent. I use polygraphy tool to check their output results layer-by-layer. I found that the constanst nodes as concatenate layer's input are not initialized in tensorrt correctly, but well in onnx. I try trt8.0/8.2/8.5, all of these versions cannot fix this problem. But when I use onnx-graphsurgeon tool to modify the onnx model by constant-fold, then trt will would convert well and the result is ok. Is there any other solution to fix this problem?

(onnx's output is x, trt's output is y)

Environment

TensorRT Version: 8.0.3/8.2/8.5

NVIDIA GPU: RTX-3090/NVIDIA-Xaiver-NX

NVIDIA Driver Version:

CUDA Version: 11.3/10.2

CUDNN Version: 8.2

Operating System: Ubuntu

Python Version (if applicable): 3.8

Tensorflow Version (if applicable):

PyTorch Version (if applicable):

Baremetal or Container (if so, version):

Relevant Files

Steps To Reproduce

-

Env:

- RTX3090

- cuDNN: 8.2.0

- tensorrt 8.0.1.6/8.2.3.0/8.5.2.2

- CUDA 11.3

-

ONNX file:

paddlev2_simp.zip -

polygraph(version 0.43.1) command:

polygraphy run paddlev2_simp.onnx --trt --validate --trt-outputs mark all --save-results=trt_out.json --workspace=256Mpolygraphy run paddlev2_simp.onnx --onnxrt --onnx-outputs mark all --save-results=onnx_out.json