-

Notifications

You must be signed in to change notification settings - Fork 1.4k

meta tensor basic profiling #4223

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

meta tensor basic profiling #4223

Conversation

ebb9db6 to

45a0c9a

Compare

|

the current results on a macos are I think we should improve the override of torch_function |

|

Thanks for this. It might be worth noting that 177 µs is still pretty quick, and presumably the difference between the classes will decrease for more non-trivial tasks ( |

|

Locally (also MacOS), I got this for And this for I agree though that we should try to optimise where possible! P.S. I think displaying the mean might be more useful than the minimum: bench_time = float(torch.sum(torch.Tensor(bench_times))) / (NUM_REPEATS * NUM_REPEAT_OF_REPEATS)instead of: bench_time = float(torch.min(torch.Tensor(bench_times))) / NUM_REPEATS |

|

Using the mean makes things a little bit less clear-cut: Type Tensor had a mean time of 5256.852149963379 us and a standard deviation of 383.22440814226866 us.

Type SubTensor had a mean time of 5128.483772277832 us and a standard deviation of 132.8055397607386 us.

Type SubWithTorchFunc had a mean time of 5159.413814544678 us and a standard deviation of 241.35354906320572 us.

Type MetaTensor had a mean time of 5491.213798522949 us and a standard deviation of 324.95150808244944 us. |

|

sure, this is mainly to show the overhead of creating/copying the meta info. good point on the metric, I'll try median values as well.. |

|

Yeah, maybe we can use something like |

|

Here are some preliminary results using |

|

thanks, it looks interesting, perhaps we can come back to the optimisation topic once the metatensor is in a good shape, what do you think? (I'm still looking into this deepcopy issue https://github.com/Project-MONAI/MONAI/runs/6287828695?check_suite_focus=true) |

|

@rijobro could you share the cProfile commands? I can include them in the PR if it's simple to set up. I think this PR is useful for getting the performance monitored during our developments. |

|

Sorry for slow reply @wyli . Here's the profile code, requires from monai.data.meta_tensor import MetaTensor

import torch

import cProfile

if __name__ == "__main__":

n_chan = 3

for hwd in (10, 200):

shape = (n_chan, hwd, hwd, hwd)

a = MetaTensor(torch.rand(shape), meta={"affine": torch.eye(4) * 2, "fname": "something1"})

b = MetaTensor(torch.rand(shape), meta={"affine": torch.eye(4) * 3, "fname": "something2"})

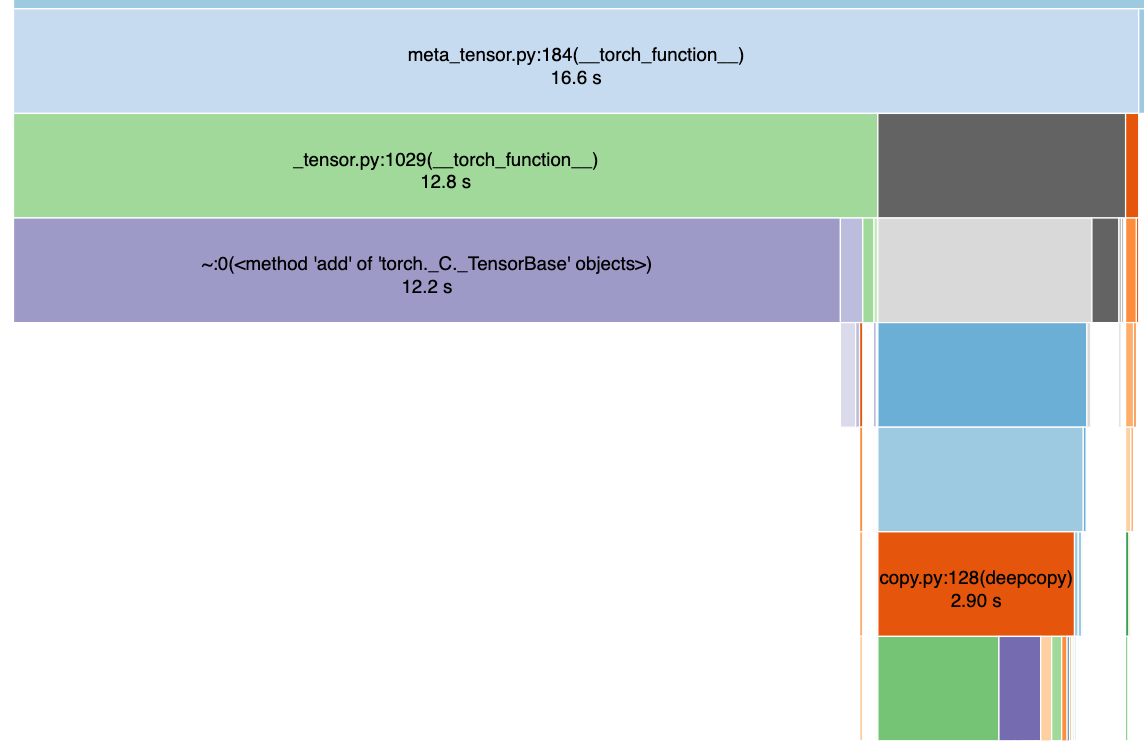

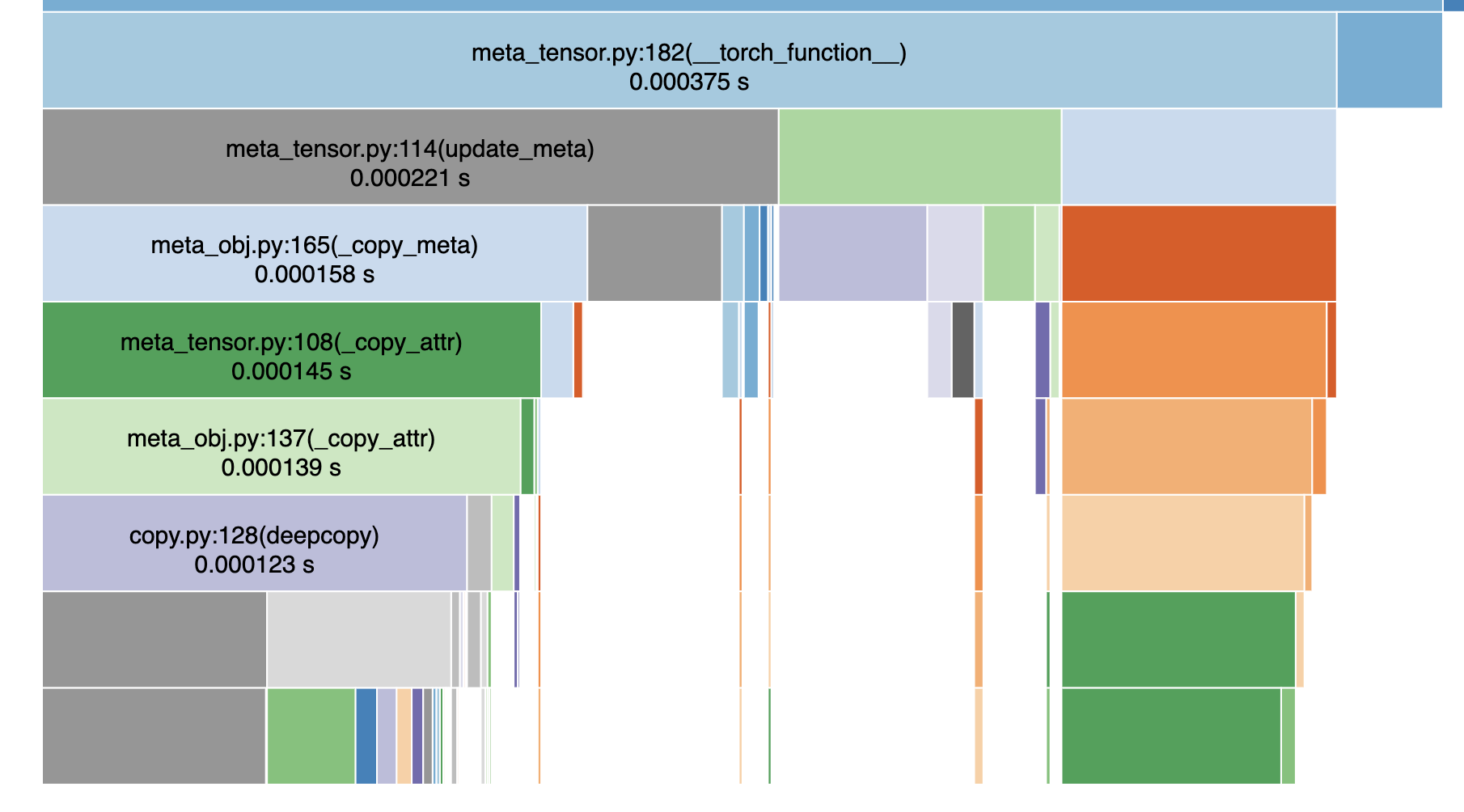

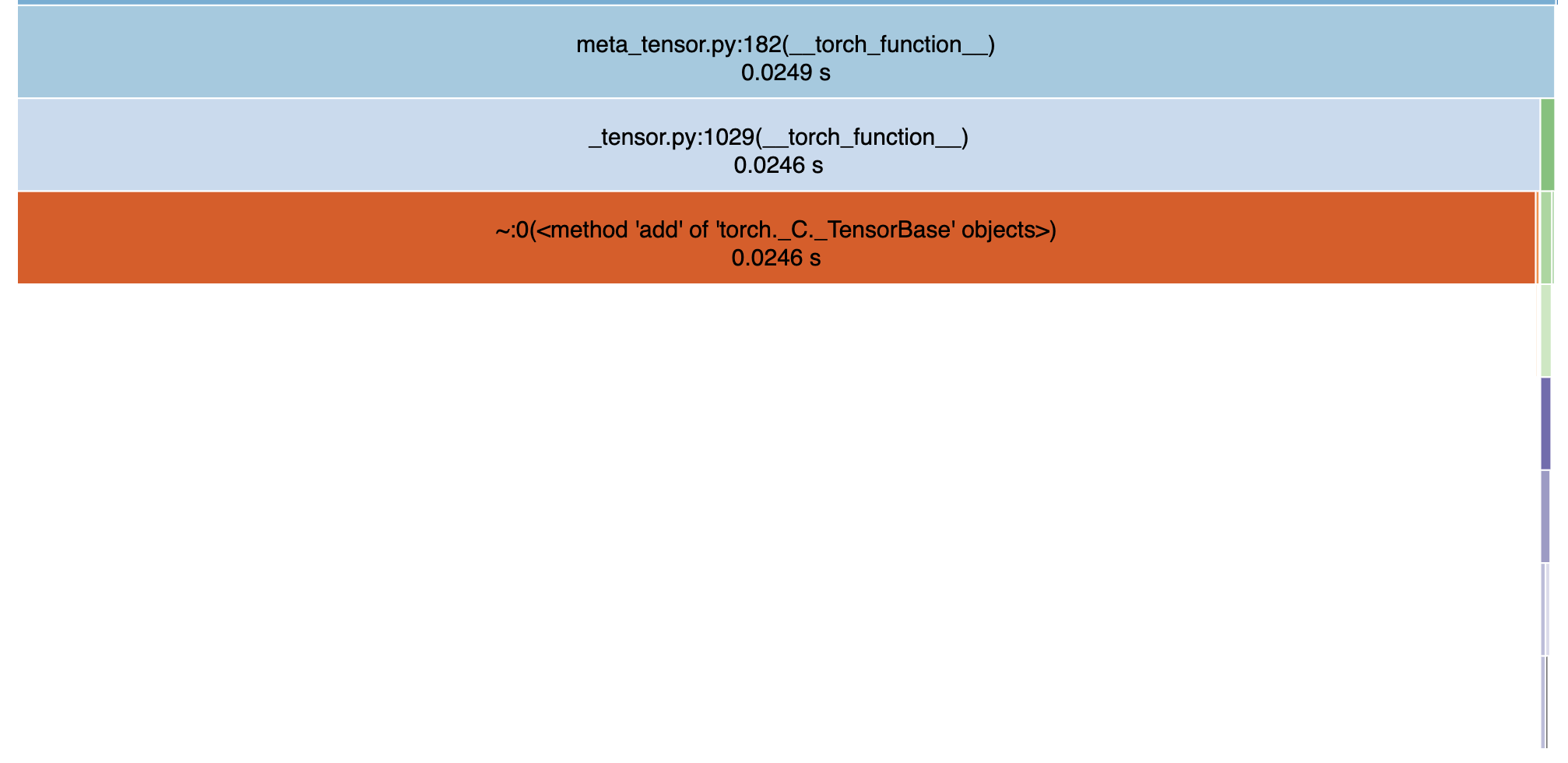

cProfile.run("c = a + b", filename=f"out_{hwd}.prof")(3, 10, 10, 10)(3, 200, 200, 200) |

Signed-off-by: Wenqi Li <wenqil@nvidia.com>

66a088e to

3a81148

Compare

|

Hi @rijobro, I've added the cProfile script here. Do you have any other concerns about this PR? |

|

@wyli, No looks good to me. Thanks! |

Signed-off-by: Wenqi Li wenqil@nvidia.com

Description

basic profiling of

__torch_function__inMetaTensor, need to run manuallycode based on https://github.com/pytorch/pytorch/tree/v1.11.0/benchmarks/overrides_benchmark

Status

Ready

Types of changes

./runtests.sh -f -u --net --coverage../runtests.sh --quick --unittests --disttests.make htmlcommand in thedocs/folder.