DE Bootcamp Project Two

What would you like people to do with the data you have produced? Are you supporting BI or ML use-cases?

The goal is provide exchange rate data for BI purposes.

What users would find your data useful?

Investment bankers.

What questions are you trying to solve with your data?

- 7 day moving average different currencies, for example AUD and USD, GBP, INR.

- Daily percentage change in Exchange rates.

- General price trends (economic events)

What datasets are you sourcing from?

├── AirflowDAGs

├── Data Integration

├── docker

├── ExchangeRates

│ ├── analyses

│ ├── dbt_packages

│ │ └── dbt_utils

│ │ ├── docs

│ │ │ └── decisions

│ │ ├── etc

│ │ ├── integration_tests

│ │ │ ├── ci

│ │ │ ├── data

│ │ │ │ ├── cross_db

│ │ │ │ ├── datetime

│ │ │ │ ├── etc

│ │ │ │ ├── geo

│ │ │ │ ├── materializations

│ │ │ │ ├── schema_tests

│ │ │ │ ├── sql

│ │ │ │ └── web

│ │ │ ├── macros

│ │ │ ├── models

│ │ │ │ ├── cross_db_utils

│ │ │ │ ├── datetime

│ │ │ │ ├── generic_tests

│ │ │ │ ├── geo

│ │ │ │ ├── materializations

│ │ │ │ ├── sql

│ │ │ │ └── web

│ │ │ └── tests

│ │ │ ├── generic

│ │ │ ├── jinja_helpers

│ │ │ └── sql

│ │ ├── macros

│ │ │ ├── cross_db_utils

│ │ │ │ └── deprecated

│ │ │ ├── generic_tests

│ │ │ ├── jinja_helpers

│ │ │ ├── materializations

│ │ │ ├── sql

│ │ │ └── web

│ │ └── tests

│ │ └── functional

│ │ ├── cross_db_utils

│ │ └── data_type

│ ├── logs

│ ├── macros

│ ├── models

│ │ ├── Gold

│ │ └── Silver

│ ├── seeds

│ ├── snapshots

│ └── tests

└── logs

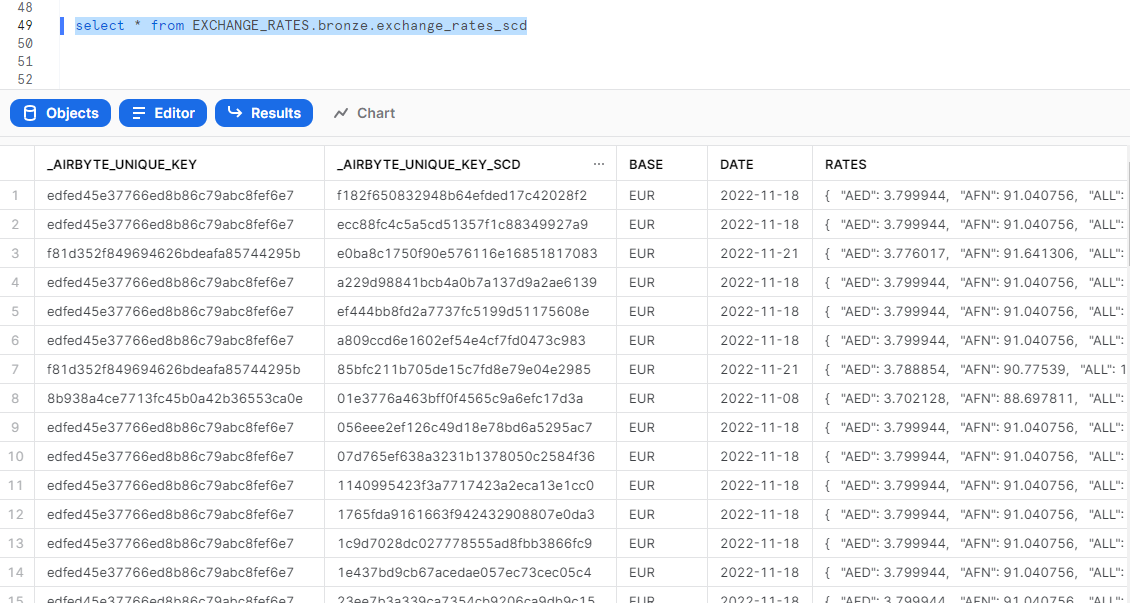

Exchange Rates API connector is available in Airbyte. The data is extracted using credentials and loaded into the Bronze layer of Snowflake. There are 3 raw tables conatining similar data. These are:

- Exchange_Rates

- Exchange_Rates_Rates

- Exchange_Rates_SCD

The Exchange_Rates_SCD table conatins data such as Base currency, Date, Rates, etc at Daily level.

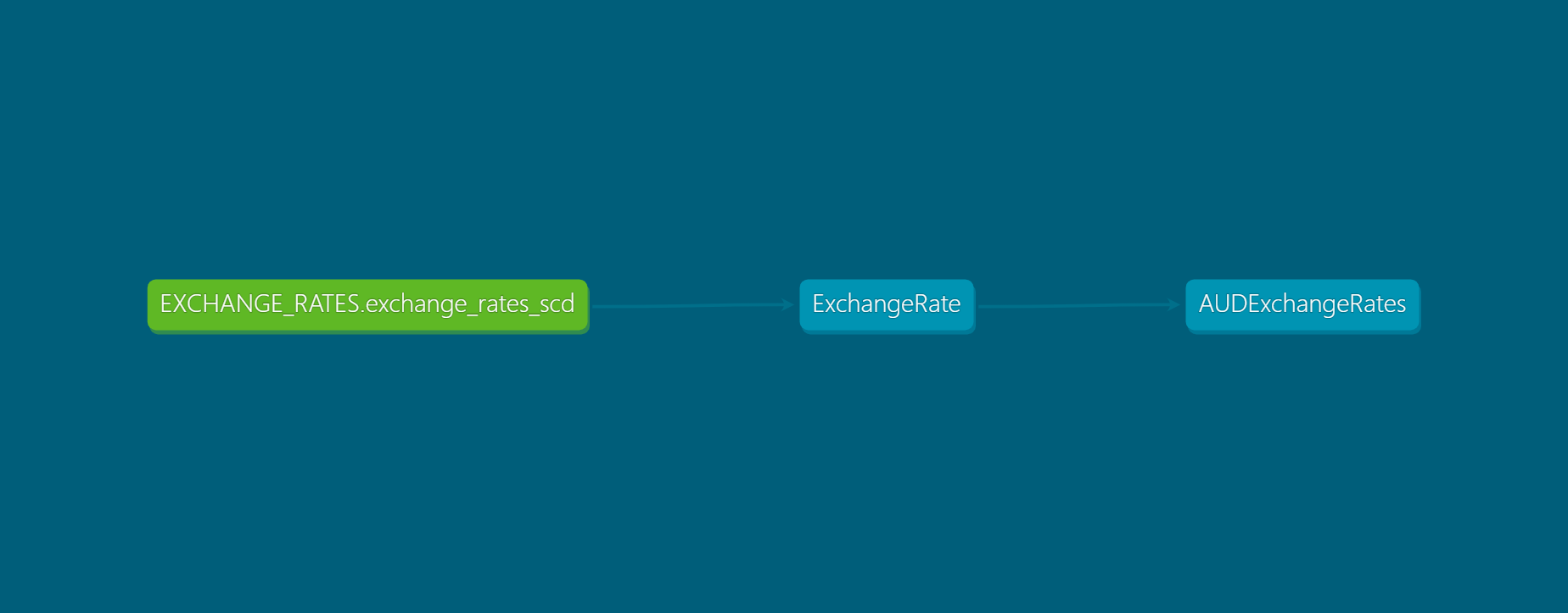

The Exchange_Rates_SCD tables data is incrementally ingested into the silver at daily interval. Here the data is unpacked from the JSON format for the prices and stored in individual columns for the required currencies. This table contains data such as Base, date, AUD_Daily_Price, BTC_Daily_price, etc.

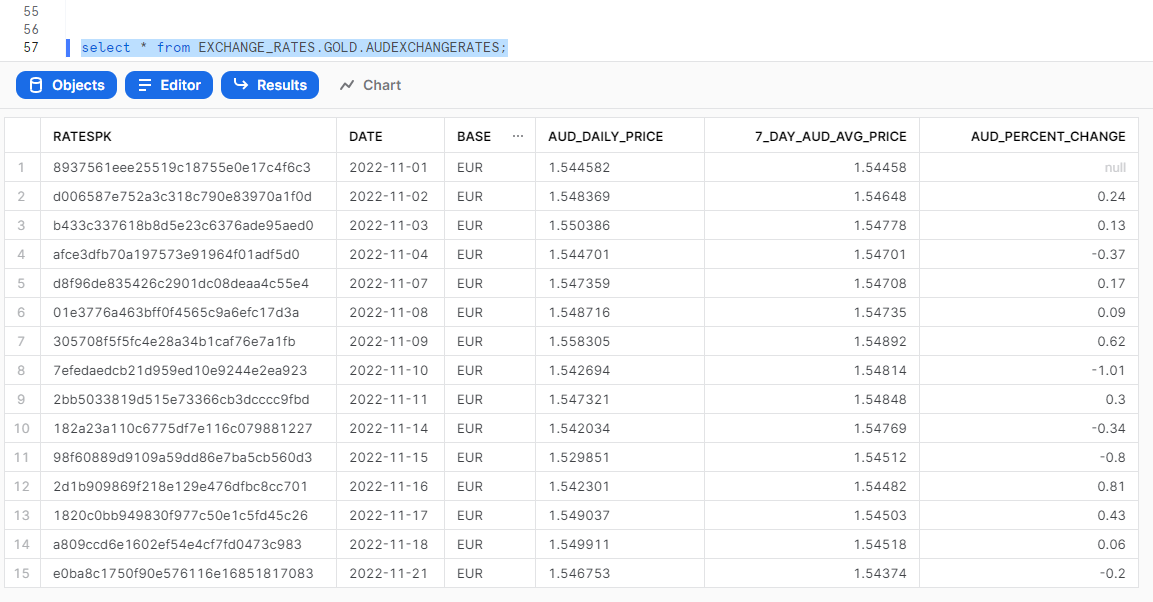

Thge Gold layer tables is a full-refresh table. The transformations done at this stage are:

- All other currencies were dropped except AUD.

- A new column was created based on the 7 day rolling average.

- Another new column was created to calculate daily price changes.

- Only Active records are used for the calcuations (where row_active_ind = 1)

Exchange Rates API

Snowflake

Airbyte

Snowflake

DBT

Airflow

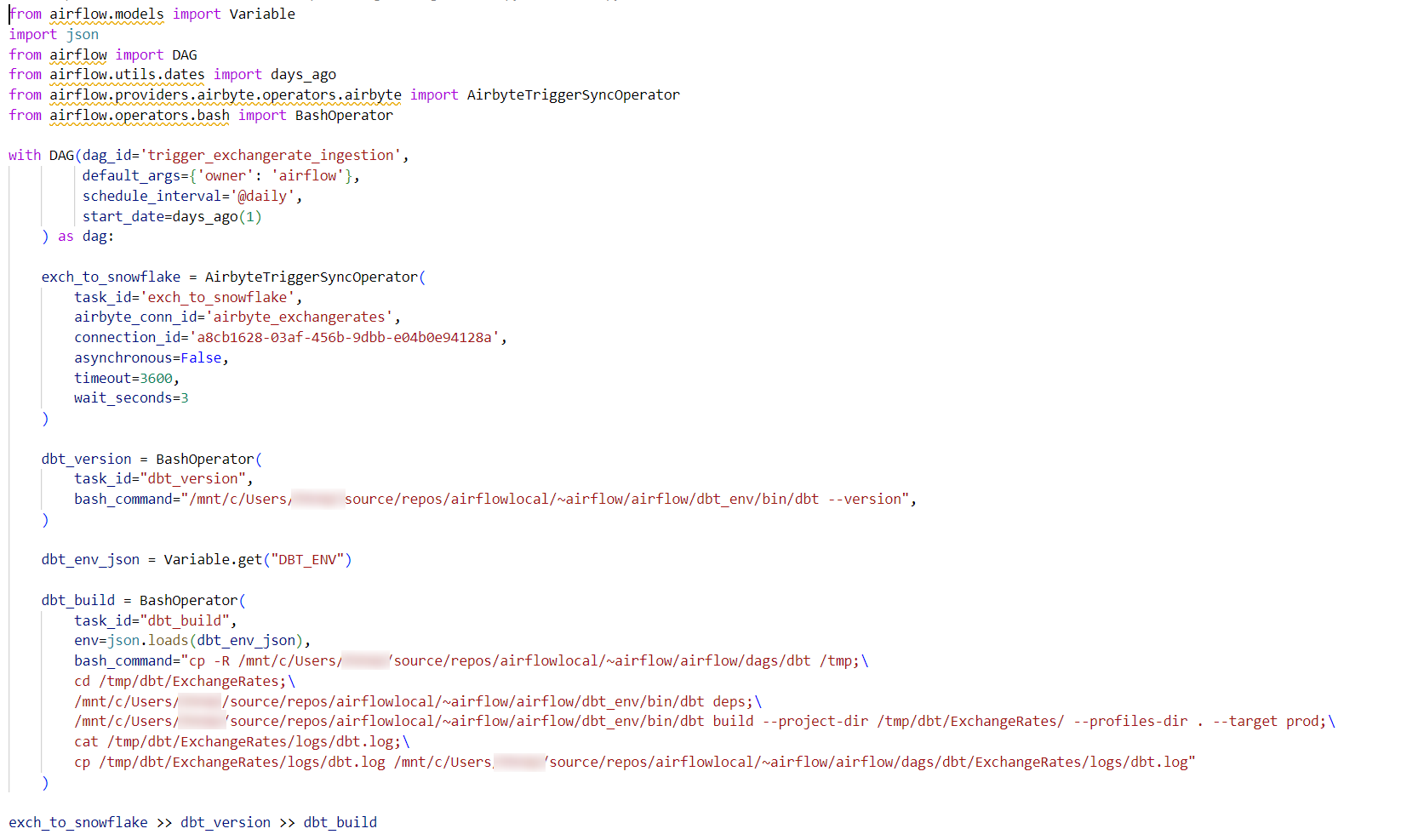

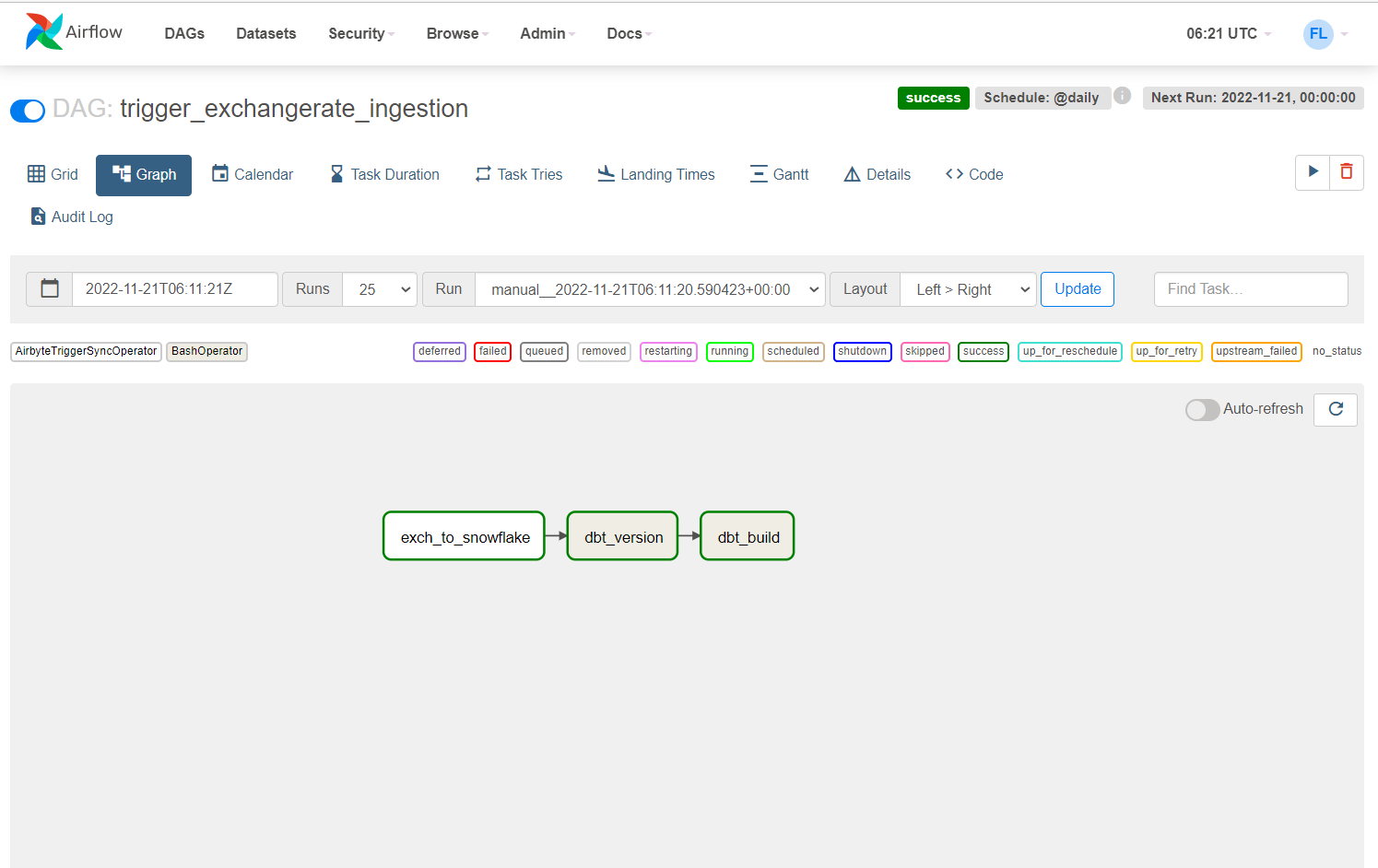

The Airflow DAG allows you to orchestrate the ingestion via AIrflow including querying via API & loading data to Snowflake (via connection to Airbyte) and execute the ExchangeRates DBT Project on the Snowflake data to promote data to Silver & Gold.

Fill in blanks in code as indicated in the file. See example below for guidance. Add dag_exchangerates.py to your Airflow DAG folder.

- Working Airflow environment with DBT setup and working as a sub-environment.

- AirByte running locally or dockerized + locally on port 8000. If dockerized locally, set server=host.docker.internal. If hosted locally, use server=localhost. If cloud-hosted, use appropriate IP address based on configuration.

Snowflake.

- Make sure your Docker Images are not cooked.