-

Notifications

You must be signed in to change notification settings - Fork 90

Allow AutoMLSearch to handle Unknown type #2477

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Changes from all commits

09944f3

7cf15e4

f31bf9e

285e125

83bbaa3

63c16c9

422ee1c

0eeff5e

515e818

0260c6f

0391219

f6a179f

e063860

1009baf

7e70340

99ec0ac

ae36364

d2d760e

2bcb251

5dd3480

6aac33b

78233b1

837cb0f

4cd8368

6c8d7c4

531e447

c3778a3

0472355

ea38c79

ebefee5

93363f8

3ad383b

9c18416

bbc1021

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -262,7 +262,7 @@ def test_per_column_imputer_woodwork_custom_overrides_returned_by_components( | |

| override_types = [Integer, Double, Categorical, NaturalLanguage, Boolean] | ||

| for logical_type in override_types: | ||

| # Column with Nans to boolean used to fail. Now it doesn't | ||

| if has_nan and logical_type == Boolean: | ||

| if has_nan and logical_type in [Boolean, NaturalLanguage]: | ||

|

Contributor

Author

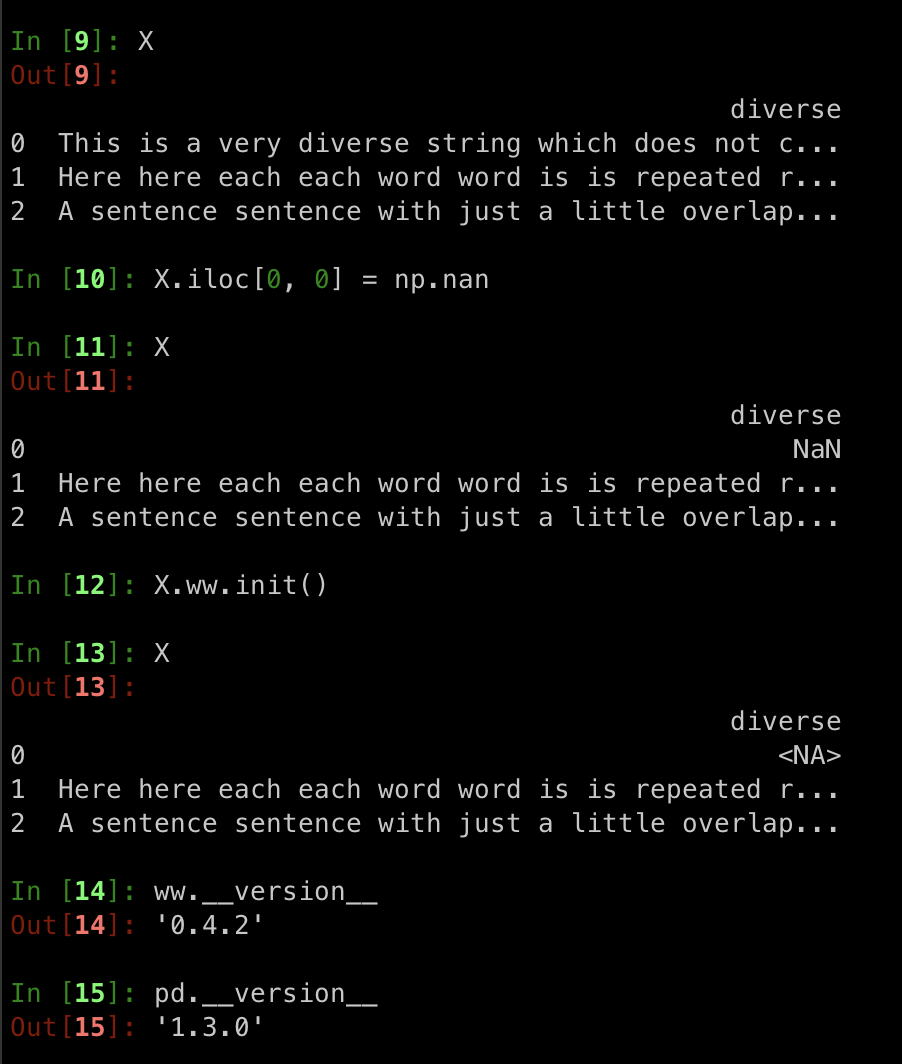

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Leave out NaturalLanguage since casting this will result in

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. But how come this wouldn't happen before? Woodwork doesn't convert

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. @freddyaboulton I believe it's due to the pandas upgrade!

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Thanks for looking into this @bchen1116 Let's list this as a breaking change for now. I imagine we might want to file an issue to discuss if there are any changes we need to make to the simpleimputer? If users run it on natural language after this pr they'll get a stacktrace they didn't get before.

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. @freddyaboulton updated the release notes with the breaking change and filed the issue here! |

||

| continue | ||

| try: | ||

| X = X_df.copy() | ||

|

|

||

Uh oh!

There was an error while loading. Please reload this page.