-

Notifications

You must be signed in to change notification settings - Fork 13.8k

[FLINK-25256][streaming] Externally induced sources replay barriers received over RPC instead of inventing them out of thin air. #19138

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

8ded29b to

99ed2d6

Compare

...ng-java/src/main/java/org/apache/flink/streaming/runtime/tasks/SourceOperatorStreamTask.java

Show resolved

Hide resolved

| // note that at this point, we should probably not emit more data such that data is | ||

| // properly aligned | ||

| // however, unless we receive a reliable checkpoint abort RPC, this may deadlock |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We should probably discuss if this is the best choice.

| // cleanup any old checkpoint that was cancelled before trigger | ||

| triggeredCheckpoints.headSet(checkpointMetaData.getCheckpointId()).clear(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The cleanup (and the one in #trigger) don't work well with concurrent checkpoints. Do we have a way to determine max concurrent checkpoints or can we actually rely on abortCheckpoint?

| new UntriggeredCheckpoint(checkpointMetaData, checkpointOptions)); | ||

| triggerFuture.complete(isRunning()); | ||

| } else { | ||

| // not externally induced or trigger already received (rare case) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I guess, the comment is wrong now? It is only trigger already received (rare case), right?

...ng-java/src/main/java/org/apache/flink/streaming/runtime/tasks/SourceOperatorStreamTask.java

Outdated

Show resolved

Hide resolved

...ng-java/src/main/java/org/apache/flink/streaming/runtime/tasks/SourceOperatorStreamTask.java

Show resolved

Hide resolved

dawidwys

left a comment

dawidwys

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

How hard would it be to add a test for the blocking/unblocking of the externally induced source?

...ng-java/src/main/java/org/apache/flink/streaming/runtime/tasks/SourceOperatorStreamTask.java

Outdated

Show resolved

Hide resolved

| super.triggerCheckpointAsync(checkpointMetaData, checkpointOptions); | ||

| /** Remove temporary data about a canceled checkpoint. */ | ||

| private void cleanupCheckpoint(long checkpointId) { | ||

| assert (mailboxProcessor.isMailboxThread()); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Shouldn't we potentially unblock the input here? If the only pending checkpoint was aborted/declined/cancelled?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You are absolutely right.

d9081db to

f993e8f

Compare

I have added assertions into the main test method that cover that. Please check if you think I should have additional test cases. |

dawidwys

left a comment

dawidwys

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks fine to me now.

7a399ea to

5e263a6

Compare

|

This PR has been verified by the flink-pravega maintainers to work on their tests for checkpoints (savepoint test pending). |

crazyzhou

left a comment

crazyzhou

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

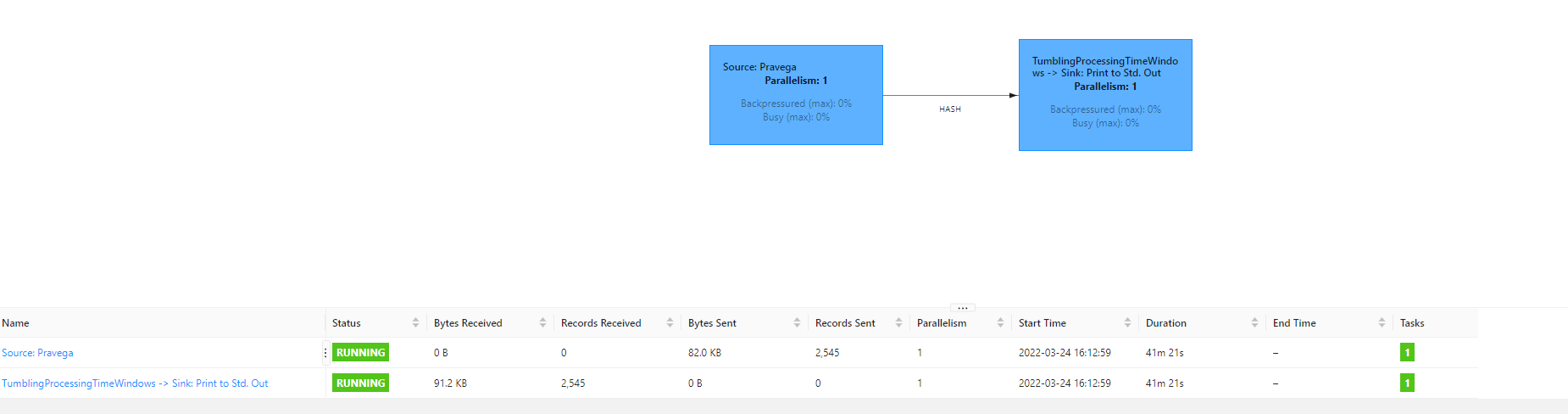

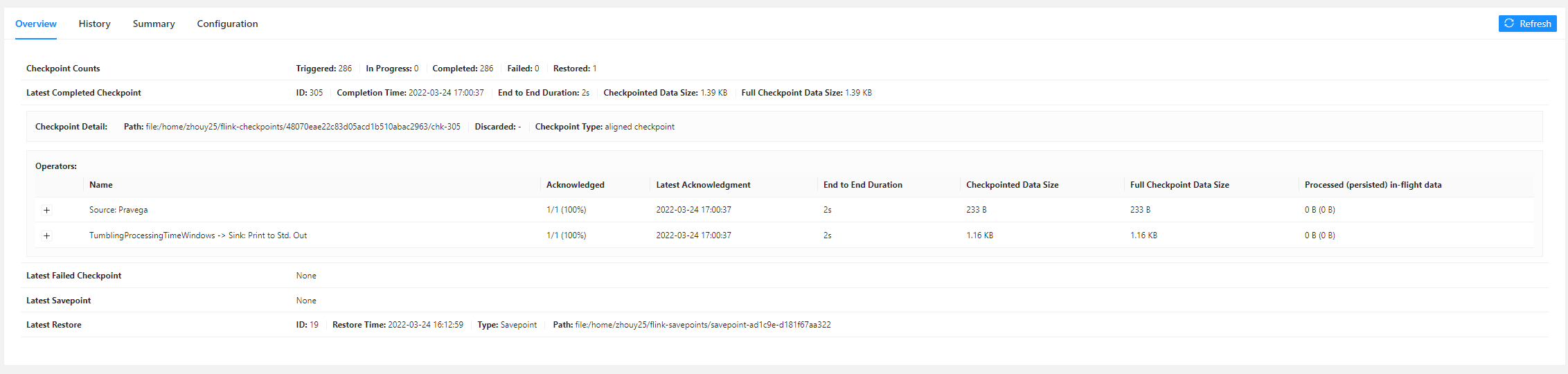

I have tested with basic Pravega reader application savepointing locally with a rocksdb state backend, with a simple app

job graph:

After this fix, this app can successfully do the stop-with-savepoint while it failed before.

It can recover nicely with the savepoint:

The application is now only having _metadata file in each checkpoint and savepoint, so still trying some more complicated cases to see I can reproduce the issue.

|

On the matter of writing into separate files instead of keeping data inside of the metadata, you might want to have a look at: |

crazyzhou

left a comment

crazyzhou

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have tested with an app with a larger state, here is the savepoint structure:

:~/flink-savepoints/savepoint-9a7e45-0215c1d6f545$ ls

9c355659-2475-4402-a5e7-3450c70394b4 _metadata

The application with Pravega source can both cancel and stop with savepoint nicely, and can successfully recover from that.

…eceived over RPC instead of inventing them out of thin air. This change preserves the CheckpointOptions and properly integrates user-triggered snapshots and workflows with more than one source. The externally induced source now merely delays the barrier instead of being able to insert one at a whim which would never work in aforementioned setups.

What is the purpose of the change

Externally induced sources are currently ill-defined and have a lot of unnecessary limitations. This PR addresses it by explicitly only holding back checkpoint barriers until the external source induces it.

While this approach seemingly limits the way externally induce sources work (they can't trigger a checkpoint on their own anymore), it actually explicitly supports the only plausible way. Sources simply can't trigger a checkpoint on their own - the checkpoint coordinator needs to track it and multiple sources need the coordinator to work at all (or else they deadlock).

Brief change log

Verifying this change

Expanded the original unit test to check that the barrier is correctly relayed.

Does this pull request potentially affect one of the following parts:

@Public(Evolving): (yes / no)Documentation