-

Notifications

You must be signed in to change notification settings - Fork 2.5k

Description

Tips before filing an issue

-

Have you gone through our FAQs?

-

Join the mailing list to engage in conversations and get faster support at dev-subscribe@hudi.apache.org.

-

If you have triaged this as a bug, then file an issue directly.

Describe the problem you faced

A clear and concise description of the problem.

To Reproduce

Steps to reproduce the behavior:

Expected behavior

A clear and concise description of what you expected to happen.

Environment Description

-

Hudi version :0.11.1

-

Spark version :

-

Hive version :2.11

-

Hadoop version :3.0

-

Storage (HDFS/S3/GCS..) :hdfs

-

Running on Docker? (yes/no) :no

Additional context

Add any other context about the problem here.

'connector'='hudi',\n" +

'path'= %s, \n" +

'table.type' = 'COPY_ON_WRITE',\n" +

'hoodie.datasource.write.recordkey.field'= %s,\n"

'write.tasks'= %s,\n" +

'write.task.max.size'=%s,\n" +

'write.merge.max_memory'=%s,\n" +

'hoodie.cleaner.commits.retained'=%s,\n" +

'hive_sync.enable'= 'true',\n" +

'hive_sync.mode'= 'hms',\n" +

'hive_sync.metastore.uris'= %s,\n" +

'hive_sync.jdbc_url'= %s,\n" +

'hive_sync.table'= %s,\n" +

'hive_sync.db'= %s,\n" +

'hive_sync.username'= %s,\n" +

'hive_sync.password'= %s,\n" +

'write.rate.limit'= %s,\n" +

'hive_sync.support_timestamp'= 'false'\n" +

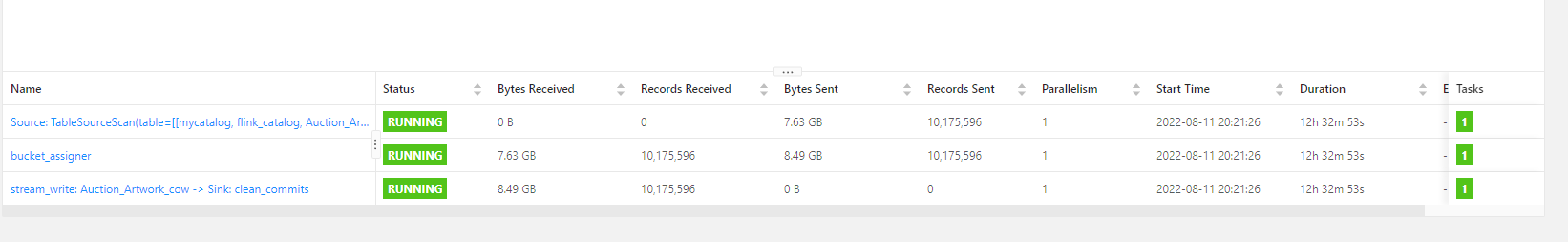

试过导入100万数据和1000万数据,最近几次导完数都会发现少一部分数据,重复试过几次,每次导完查询结果都不一样,丢的数量不一定,hive和flilnksql查的结果一样,flink程序无异常。但是前几天测的时候没有发现丢数,两次区别在于,本次设置了write.rate.limit=1000。

Stacktrace

Add the stacktrace of the error.