-

Notifications

You must be signed in to change notification settings - Fork 28.9k

[SPARK-27988][SQL][TEST] Port AGGREGATES.sql [Part 3] #24829

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

sql/core/src/test/resources/sql-tests/inputs/pgSQL/aggregates_part3.sql

Outdated

Show resolved

Hide resolved

|

Test build #106340 has finished for PR 24829 at commit

|

…aggfstr, and aggfns

|

Test build #106402 has finished for PR 24829 at commit

|

|

retest this please |

|

Test build #106407 has finished for PR 24829 at commit

|

|

Test build #106409 has finished for PR 24829 at commit

|

|

Test build #108203 has finished for PR 24829 at commit

|

|

Test build #108520 has finished for PR 24829 at commit

|

|

retest this please |

|

Test build #108855 has finished for PR 24829 at commit

|

|

Test build #108862 has finished for PR 24829 at commit

|

|

retest this please |

|

Test build #108870 has finished for PR 24829 at commit

|

sql/core/src/test/resources/sql-tests/inputs/pgSQL/aggregates_part3.sql

Outdated

Show resolved

Hide resolved

sql/core/src/test/resources/sql-tests/results/pgSQL/aggregates_part3.sql.out

Outdated

Show resolved

Hide resolved

sql/core/src/test/resources/sql-tests/inputs/pgSQL/aggregates_part3.sql

Outdated

Show resolved

Hide resolved

sql/core/src/test/resources/sql-tests/inputs/pgSQL/aggregates_part3.sql

Outdated

Show resolved

Hide resolved

sql/core/src/test/resources/sql-tests/results/pgSQL/aggregates_part3.sql.out

Outdated

Show resolved

Hide resolved

|

Test build #108909 has finished for PR 24829 at commit

|

|

retest this please |

|

Test build #108911 has finished for PR 24829 at commit

|

| -- | ||

| -- | ||

| -- AGGREGATES [Part 3] | ||

| -- https://github.com/postgres/postgres/blob/REL_12_BETA1/src/test/regress/sql/aggregates.sql#L352-L605 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

REL_12_BETA1 -> REL_12_BETA2?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please check the line numbers too if the file is changed. I hope it's unchanged.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

AGGREGATES [Part 3] unchanged, AGGREGATES [Part 4] changed:

https://github.com/postgres/postgres/blob/REL_12_BETA2/src/test/regress/sql/aggregates.sql#L992

We has added it:

spark/sql/core/src/test/resources/sql-tests/inputs/pgSQL/aggregates_part4.sql

Lines 414 to 419 in 2a2b202

| -- Make sure that generation of HashAggregate for uniqification purposes | |

| -- does not lead to array overflow due to unexpected duplicate hash keys | |

| -- see CAFeeJoKKu0u+A_A9R9316djW-YW3-+Gtgvy3ju655qRHR3jtdA@mail.gmail.com | |

| -- explain (costs off) | |

| -- select 1 from tenk1 | |

| -- where (hundred, thousand) in (select twothousand, twothousand from onek); |

| -- drop table minmaxtest cascade; | ||

|

|

||

| -- [SPARK-9830] It is not allowed to use an aggregate function in the argument of another aggregate function | ||

| -- check for correct detection of nested-aggregate errors |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Instead of adding SPARK-9830, can we check the actual error? The original PostgreSQL test is also for ensuring the error.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It's a correct behaviour, aggregate function calls cannot be nested

https://github.com/postgres/postgres/blob/REL_12_BETA2/src/test/regress/expected/aggregates.out#L1078-L1085

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ya. I know. My suggestion is to check the error message like PostgreSQL.

If it doesn't throw exceptions later, we can detect a regression at that time.

| -- select array_agg(distinct a order by a desc nulls last) | ||

| -- from (values (1),(2),(1),(3),(null),(2)) v(a); | ||

|

|

||

| -- Skip the test below because it requires 4 UDFs: aggf_trans, aggfns_trans, aggfstr, and aggfns |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sorry, but this looks insufficient to ignore the followings.

We can register these functions like we did for tables tenk1 , cann't we?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

UDF is easy, UDAF seems need to implement UserDefinedFunction.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Got it. Never mind.

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c), | ||

| -- generate_series(1,3) i; | ||

|

|

||

| -- select aggfstr(distinct a,b,c order by b) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

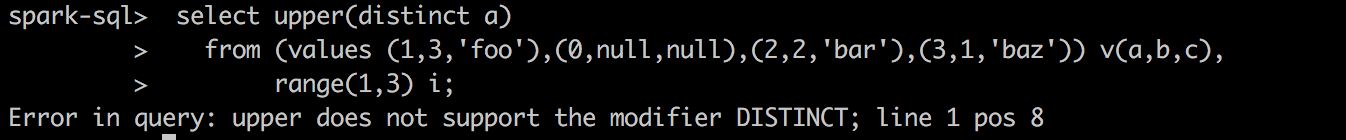

Did you mean that Spark doesn't support UDF invocation syntax like this udfName(distinct a,b,c order by b)?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do we have a JIRA for that? Then, please add the ID as a comment here.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

postgres=# select max(distinct a) from (values('a'), ('b')) v(a);

max

-----

b

(1 row)

spark-sql> select max(distinct a) from (values('a'), ('b')) v(a);

b

spark-sql>postgres=# select upper(distinct a) from (values('a'), ('b')) v(a);

ERROR: DISTINCT specified, but upper is not an aggregate function

LINE 1: select upper(distinct a) from (values('a'), ('b')) v(a);

spark-sql> select upper(distinct a) from (values('a'), ('b')) v(a);

Error in query: upper does not support the modifier DISTINCT; line 1 pos 7

spark-sql>Do we need to add an ID? It seems that only the error message is different.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

|

|

||

| -- test specific code paths | ||

|

|

||

| -- select aggfns(distinct a,a,c order by c using ~<~,a) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It seems that we need to mention the existing JIRA issue for distinct a,a,c order by c using ~<~,a?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

SPARK-28010 or a new one for USING syntax.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@maropu These are operators?

postgres=# \do ~*~

List of operators

Schema | Name | Left arg type | Right arg type | Result type | Description

------------+------+---------------+----------------+-------------+-------------------------

pg_catalog | ~<=~ | character | character | boolean | less than or equal

pg_catalog | ~<=~ | text | text | boolean | less than or equal

pg_catalog | ~<~ | character | character | boolean | less than

pg_catalog | ~<~ | text | text | boolean | less than

pg_catalog | ~>=~ | character | character | boolean | greater than or equal

pg_catalog | ~>=~ | text | text | boolean | greater than or equal

pg_catalog | ~>~ | character | character | boolean | greater than

pg_catalog | ~>~ | text | text | boolean | greater than

pg_catalog | ~~ | bytea | bytea | boolean | matches LIKE expression

pg_catalog | ~~ | character | text | boolean | matches LIKE expression

pg_catalog | ~~ | name | text | boolean | matches LIKE expression

pg_catalog | ~~ | text | text | boolean | matches LIKE expression

(12 rows)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

|

|

||

| -- Skip these tests because we do not have a bytea type | ||

| -- string_agg bytea tests | ||

| -- create table bytea_test_table(v bytea); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Shall we use BINARY as we did at strings.sql?

create table bytea_test_table(v BINARY);

After that, we are able to remove line 212 and focus on our next steps.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ur, right. I missed it. bytea is binary.

|

|

||

| -- select string_agg(v, '') from bytea_test_table; | ||

|

|

||

| -- insert into bytea_test_table values(decode('ff','hex')); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Maybe, something like the following? And, do we have a JIRA for decode?

insert into bytea_test_table values(decode('aa', 'utf-8'));

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| select (select count(*) | ||

| from (values (1)) t0(inner_c)) | ||

| from (values (2),(3)) t1(outer_c); | ||

| -- Rewriting to CASE WHEN will hit: Expressions referencing the outer query are not supported outside of WHERE/HAVING clauses |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

File a JIRA issue?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think we should revert it to original query. We can not support Aggregate Expressions with filter.

| -- select (select count(*) filter (where outer_c <> 0) | ||

| -- from (values (1)) t0(inner_c)) | ||

| -- from (values (2),(3)) t1(outer_c); | ||

| -- Rewriting to CASE WHEN will hit: Found an aggregate expression in a correlated predicate that has both outer and local references |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

File a JIRA issue?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think we should revert it to original query. We can not support Aggregate Expressions with filter.

| -- subquery in FILTER clause (PostgreSQL extension) | ||

| -- Rewriting to CASE WHEN will hit: IN/EXISTS predicate sub-queries can only be used in a Filter | ||

| -- select sum(unique1) FILTER (WHERE | ||

| -- unique1 IN (SELECT unique1 FROM onek where unique1 < 100)) FROM tenk1; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

From line 252 ~ 268, I understand the reason why you add comments like Rewriting to CASE WHEN will hit: Expressions referencing the outer query are not supported outside of WHERE/HAVING clause. But, let's have an explicit JIRA ID. Otherwise, let's not add the comment here.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes. We should revert it to original query.

|

Test build #109258 has finished for PR 24829 at commit

|

|

Test build #109293 has finished for PR 24829 at commit

|

|

retest this please |

| -- AGGREGATES [Part 3] | ||

| -- https://github.com/postgres/postgres/blob/REL_12_BETA2/src/test/regress/sql/aggregates.sql#L352-L605 | ||

|

|

||

| -- We do not support inheritance tree, skip related tests. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Out of curiosity, why didn't we file a JIRA here? Even if we explicitly don't support, it's better to file a JIRA and resolve it as Won't Fix.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done

| -- select array_agg(distinct a order by a desc nulls last) | ||

| -- from (values (1),(2),(1),(3),(null),(2)) v(a); | ||

|

|

||

| -- Skip the test below because it requires 4 UDFs: aggf_trans, aggfns_trans, aggfstr, and aggfns |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Then should it be "Skip the test below because it requires 4 UDAFs:"?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done

|

Test build #109684 has finished for PR 24829 at commit

|

|

Test build #109696 has finished for PR 24829 at commit

|

|

Merged to master. Thanks all. |

What changes were proposed in this pull request?

This PR is to port AGGREGATES.sql from PostgreSQL regression tests. https://github.com/postgres/postgres/blob/REL_12_BETA2/src/test/regress/sql/aggregates.sql#L352-L605

The expected results can be found in the link: https://github.com/postgres/postgres/blob/REL_12_BETA2/src/test/regress/expected/aggregates.out#L986-L1613

When porting the test cases, found seven PostgreSQL specific features that do not exist in Spark SQL:

SPARK-27974: Add built-in Aggregate Function: array_agg

SPARK-27978: Add built-in Aggregate Functions: string_agg

SPARK-27986: Support Aggregate Expressions with filter

SPARK-27987: Support POSIX Regular Expressions

SPARK-28682: ANSI SQL: Collation Support

SPARK-28768: Implement more text pattern operators

SPARK-28865: Table inheritance

How was this patch tested?

N/A