-

Notifications

You must be signed in to change notification settings - Fork 28.9k

[SPARK-27988][SQL][TEST] Port AGGREGATES.sql [Part 3] #24829

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Changes from all commits

c3ddcc0

baaf0c5

0a425c4

51825c4

7c8b1ed

8511dcf

55b233e

7fe3507

a2e9e57

ad5363b

3365046

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,270 @@ | ||

| -- | ||

| -- Portions Copyright (c) 1996-2019, PostgreSQL Global Development Group | ||

| -- | ||

| -- | ||

| -- AGGREGATES [Part 3] | ||

| -- https://github.com/postgres/postgres/blob/REL_12_BETA2/src/test/regress/sql/aggregates.sql#L352-L605 | ||

|

|

||

| -- [SPARK-28865] Table inheritance | ||

| -- try it on an inheritance tree | ||

| -- create table minmaxtest(f1 int); | ||

| -- create table minmaxtest1() inherits (minmaxtest); | ||

| -- create table minmaxtest2() inherits (minmaxtest); | ||

| -- create table minmaxtest3() inherits (minmaxtest); | ||

| -- create index minmaxtesti on minmaxtest(f1); | ||

| -- create index minmaxtest1i on minmaxtest1(f1); | ||

| -- create index minmaxtest2i on minmaxtest2(f1 desc); | ||

| -- create index minmaxtest3i on minmaxtest3(f1) where f1 is not null; | ||

|

|

||

| -- insert into minmaxtest values(11), (12); | ||

| -- insert into minmaxtest1 values(13), (14); | ||

| -- insert into minmaxtest2 values(15), (16); | ||

| -- insert into minmaxtest3 values(17), (18); | ||

|

|

||

| -- explain (costs off) | ||

| -- select min(f1), max(f1) from minmaxtest; | ||

| -- select min(f1), max(f1) from minmaxtest; | ||

|

|

||

| -- DISTINCT doesn't do anything useful here, but it shouldn't fail | ||

| -- explain (costs off) | ||

| -- select distinct min(f1), max(f1) from minmaxtest; | ||

| -- select distinct min(f1), max(f1) from minmaxtest; | ||

|

|

||

| -- drop table minmaxtest cascade; | ||

|

|

||

| -- check for correct detection of nested-aggregate errors | ||

| select max(min(unique1)) from tenk1; | ||

| -- select (select max(min(unique1)) from int8_tbl) from tenk1; | ||

|

|

||

| -- These tests only test the explain. Skip these tests. | ||

| -- | ||

| -- Test removal of redundant GROUP BY columns | ||

| -- | ||

|

|

||

| -- create temp table t1 (a int, b int, c int, d int, primary key (a, b)); | ||

| -- create temp table t2 (x int, y int, z int, primary key (x, y)); | ||

| -- create temp table t3 (a int, b int, c int, primary key(a, b) deferrable); | ||

|

|

||

| -- Non-primary-key columns can be removed from GROUP BY | ||

| -- explain (costs off) select * from t1 group by a,b,c,d; | ||

|

|

||

| -- No removal can happen if the complete PK is not present in GROUP BY | ||

| -- explain (costs off) select a,c from t1 group by a,c,d; | ||

|

|

||

| -- Test removal across multiple relations | ||

| -- explain (costs off) select * | ||

| -- from t1 inner join t2 on t1.a = t2.x and t1.b = t2.y | ||

| -- group by t1.a,t1.b,t1.c,t1.d,t2.x,t2.y,t2.z; | ||

|

|

||

| -- Test case where t1 can be optimized but not t2 | ||

| -- explain (costs off) select t1.*,t2.x,t2.z | ||

| -- from t1 inner join t2 on t1.a = t2.x and t1.b = t2.y | ||

| -- group by t1.a,t1.b,t1.c,t1.d,t2.x,t2.z; | ||

|

|

||

| -- Cannot optimize when PK is deferrable | ||

| -- explain (costs off) select * from t3 group by a,b,c; | ||

|

|

||

| -- drop table t1; | ||

| -- drop table t2; | ||

| -- drop table t3; | ||

|

|

||

| -- [SPARK-27974] Add built-in Aggregate Function: array_agg | ||

| -- | ||

| -- Test combinations of DISTINCT and/or ORDER BY | ||

| -- | ||

|

|

||

| -- select array_agg(a order by b) | ||

| -- from (values (1,4),(2,3),(3,1),(4,2)) v(a,b); | ||

| -- select array_agg(a order by a) | ||

| -- from (values (1,4),(2,3),(3,1),(4,2)) v(a,b); | ||

| -- select array_agg(a order by a desc) | ||

| -- from (values (1,4),(2,3),(3,1),(4,2)) v(a,b); | ||

| -- select array_agg(b order by a desc) | ||

| -- from (values (1,4),(2,3),(3,1),(4,2)) v(a,b); | ||

|

|

||

| -- select array_agg(distinct a) | ||

| -- from (values (1),(2),(1),(3),(null),(2)) v(a); | ||

| -- select array_agg(distinct a order by a) | ||

| -- from (values (1),(2),(1),(3),(null),(2)) v(a); | ||

| -- select array_agg(distinct a order by a desc) | ||

| -- from (values (1),(2),(1),(3),(null),(2)) v(a); | ||

| -- select array_agg(distinct a order by a desc nulls last) | ||

| -- from (values (1),(2),(1),(3),(null),(2)) v(a); | ||

|

|

||

| -- Skip the test below because it requires 4 UDAFs: aggf_trans, aggfns_trans, aggfstr, and aggfns | ||

| -- multi-arg aggs, strict/nonstrict, distinct/order by | ||

|

|

||

| -- select aggfstr(a,b,c) | ||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c); | ||

| -- select aggfns(a,b,c) | ||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c); | ||

|

|

||

| -- select aggfstr(distinct a,b,c) | ||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c), | ||

| -- generate_series(1,3) i; | ||

| -- select aggfns(distinct a,b,c) | ||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c), | ||

| -- generate_series(1,3) i; | ||

|

|

||

| -- select aggfstr(distinct a,b,c order by b) | ||

|

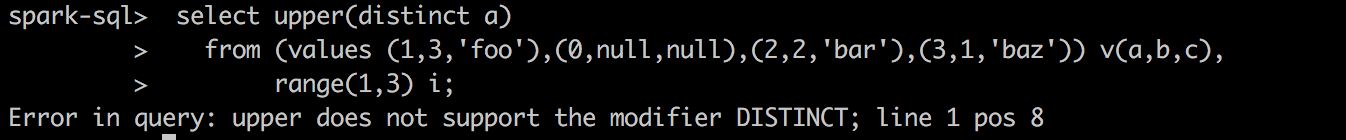

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Did you mean that Spark doesn't support UDF invocation syntax like this There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Do we have a JIRA for that? Then, please add the ID as a comment here. There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. postgres=# select max(distinct a) from (values('a'), ('b')) v(a);

max

-----

b

(1 row)

spark-sql> select max(distinct a) from (values('a'), ('b')) v(a);

b

spark-sql>postgres=# select upper(distinct a) from (values('a'), ('b')) v(a);

ERROR: DISTINCT specified, but upper is not an aggregate function

LINE 1: select upper(distinct a) from (values('a'), ('b')) v(a);

spark-sql> select upper(distinct a) from (values('a'), ('b')) v(a);

Error in query: upper does not support the modifier DISTINCT; line 1 pos 7

spark-sql>Do we need to add an ID? It seems that only the error message is different. There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. |

||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c), | ||

| -- generate_series(1,3) i; | ||

| -- select aggfns(distinct a,b,c order by b) | ||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c), | ||

| -- generate_series(1,3) i; | ||

|

|

||

| -- test specific code paths | ||

|

|

||

| -- [SPARK-28768] Implement more text pattern operators | ||

| -- select aggfns(distinct a,a,c order by c using ~<~,a) | ||

|

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. It seems that we need to mention the existing JIRA issue for There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. SPARK-28010 or a new one for There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. @maropu These are operators? There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. |

||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c), | ||

| -- generate_series(1,2) i; | ||

| -- select aggfns(distinct a,a,c order by c using ~<~) | ||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c), | ||

| -- generate_series(1,2) i; | ||

| -- select aggfns(distinct a,a,c order by a) | ||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c), | ||

| -- generate_series(1,2) i; | ||

| -- select aggfns(distinct a,b,c order by a,c using ~<~,b) | ||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c), | ||

| -- generate_series(1,2) i; | ||

|

|

||

| -- check node I/O via view creation and usage, also deparsing logic | ||

|

|

||

| -- create view agg_view1 as | ||

| -- select aggfns(a,b,c) | ||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c); | ||

|

|

||

| -- select * from agg_view1; | ||

| -- select pg_get_viewdef('agg_view1'::regclass); | ||

|

|

||

| -- create or replace view agg_view1 as | ||

| -- select aggfns(distinct a,b,c) | ||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c), | ||

| -- generate_series(1,3) i; | ||

|

|

||

| -- select * from agg_view1; | ||

| -- select pg_get_viewdef('agg_view1'::regclass); | ||

|

|

||

| -- create or replace view agg_view1 as | ||

| -- select aggfns(distinct a,b,c order by b) | ||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c), | ||

| -- generate_series(1,3) i; | ||

|

|

||

| -- select * from agg_view1; | ||

| -- select pg_get_viewdef('agg_view1'::regclass); | ||

|

|

||

| -- create or replace view agg_view1 as | ||

| -- select aggfns(a,b,c order by b+1) | ||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c); | ||

|

|

||

| -- select * from agg_view1; | ||

| -- select pg_get_viewdef('agg_view1'::regclass); | ||

|

|

||

| -- create or replace view agg_view1 as | ||

| -- select aggfns(a,a,c order by b) | ||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c); | ||

|

|

||

| -- select * from agg_view1; | ||

| -- select pg_get_viewdef('agg_view1'::regclass); | ||

|

|

||

| -- create or replace view agg_view1 as | ||

| -- select aggfns(a,b,c order by c using ~<~) | ||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c); | ||

|

|

||

| -- select * from agg_view1; | ||

| -- select pg_get_viewdef('agg_view1'::regclass); | ||

|

|

||

| -- create or replace view agg_view1 as | ||

| -- select aggfns(distinct a,b,c order by a,c using ~<~,b) | ||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c), | ||

| -- generate_series(1,2) i; | ||

|

|

||

| -- select * from agg_view1; | ||

| -- select pg_get_viewdef('agg_view1'::regclass); | ||

|

|

||

| -- drop view agg_view1; | ||

|

|

||

| -- incorrect DISTINCT usage errors | ||

|

|

||

| -- select aggfns(distinct a,b,c order by i) | ||

| -- from (values (1,1,'foo')) v(a,b,c), generate_series(1,2) i; | ||

| -- select aggfns(distinct a,b,c order by a,b+1) | ||

| -- from (values (1,1,'foo')) v(a,b,c), generate_series(1,2) i; | ||

| -- select aggfns(distinct a,b,c order by a,b,i,c) | ||

| -- from (values (1,1,'foo')) v(a,b,c), generate_series(1,2) i; | ||

| -- select aggfns(distinct a,a,c order by a,b) | ||

| -- from (values (1,1,'foo')) v(a,b,c), generate_series(1,2) i; | ||

|

|

||

| -- [SPARK-27978] Add built-in Aggregate Functions: string_agg | ||

| -- string_agg tests | ||

| -- select string_agg(a,',') from (values('aaaa'),('bbbb'),('cccc')) g(a); | ||

| -- select string_agg(a,',') from (values('aaaa'),(null),('bbbb'),('cccc')) g(a); | ||

| -- select string_agg(a,'AB') from (values(null),(null),('bbbb'),('cccc')) g(a); | ||

| -- select string_agg(a,',') from (values(null),(null)) g(a); | ||

|

|

||

| -- check some implicit casting cases, as per bug #5564 | ||

| -- select string_agg(distinct f1, ',' order by f1) from varchar_tbl; -- ok | ||

| -- select string_agg(distinct f1::text, ',' order by f1) from varchar_tbl; -- not ok | ||

| -- select string_agg(distinct f1, ',' order by f1::text) from varchar_tbl; -- not ok | ||

| -- select string_agg(distinct f1::text, ',' order by f1::text) from varchar_tbl; -- ok | ||

|

|

||

| -- [SPARK-28121] decode can not accept 'hex' as charset | ||

| -- string_agg bytea tests | ||

| -- CREATE TABLE bytea_test_table(v BINARY) USING parquet; | ||

|

|

||

| -- select string_agg(v, '') from bytea_test_table; | ||

|

|

||

| -- insert into bytea_test_table values(decode('ff','hex')); | ||

|

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Maybe, something like the following? And, do we have a JIRA for There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. |

||

|

|

||

| -- select string_agg(v, '') from bytea_test_table; | ||

|

|

||

| -- insert into bytea_test_table values(decode('aa','hex')); | ||

|

|

||

| -- select string_agg(v, '') from bytea_test_table; | ||

| -- select string_agg(v, NULL) from bytea_test_table; | ||

| -- select string_agg(v, decode('ee', 'hex')) from bytea_test_table; | ||

|

|

||

| -- drop table bytea_test_table; | ||

|

|

||

| -- [SPARK-27986] Support Aggregate Expressions with filter | ||

| -- FILTER tests | ||

|

|

||

| -- select min(unique1) filter (where unique1 > 100) from tenk1; | ||

|

|

||

| -- select sum(1/ten) filter (where ten > 0) from tenk1; | ||

|

|

||

| -- select ten, sum(distinct four) filter (where four::text ~ '123') from onek a | ||

| -- group by ten; | ||

|

|

||

| -- select ten, sum(distinct four) filter (where four > 10) from onek a | ||

| -- group by ten | ||

| -- having exists (select 1 from onek b where sum(distinct a.four) = b.four); | ||

|

|

||

| -- [SPARK-28682] ANSI SQL: Collation Support | ||

| -- select max(foo COLLATE "C") filter (where (bar collate "POSIX") > '0') | ||

| -- from (values ('a', 'b')) AS v(foo,bar); | ||

|

|

||

| -- outer reference in FILTER (PostgreSQL extension) | ||

| select (select count(*) | ||

| from (values (1)) t0(inner_c)) | ||

| from (values (2),(3)) t1(outer_c); -- inner query is aggregation query | ||

| -- select (select count(*) filter (where outer_c <> 0) | ||

| -- from (values (1)) t0(inner_c)) | ||

| -- from (values (2),(3)) t1(outer_c); -- outer query is aggregation query | ||

| -- select (select count(inner_c) filter (where outer_c <> 0) | ||

| -- from (values (1)) t0(inner_c)) | ||

| -- from (values (2),(3)) t1(outer_c); -- inner query is aggregation query | ||

| -- select | ||

| -- (select max((select i.unique2 from tenk1 i where i.unique1 = o.unique1)) | ||

| -- filter (where o.unique1 < 10)) | ||

| -- from tenk1 o; -- outer query is aggregation query | ||

|

|

||

| -- subquery in FILTER clause (PostgreSQL extension) | ||

| -- select sum(unique1) FILTER (WHERE | ||

| -- unique1 IN (SELECT unique1 FROM onek where unique1 < 100)) FROM tenk1; | ||

|

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. From line 252 ~ 268, I understand the reason why you add comments like There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Yes. We should revert it to original query. |

||

|

|

||

| -- exercise lots of aggregate parts with FILTER | ||

| -- select aggfns(distinct a,b,c order by a,c using ~<~,b) filter (where a > 1) | ||

| -- from (values (1,3,'foo'),(0,null,null),(2,2,'bar'),(3,1,'baz')) v(a,b,c), | ||

| -- generate_series(1,2) i; | ||

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,22 @@ | ||

| -- Automatically generated by SQLQueryTestSuite | ||

| -- Number of queries: 2 | ||

|

|

||

|

|

||

| -- !query 0 | ||

| select max(min(unique1)) from tenk1 | ||

| -- !query 0 schema | ||

| struct<> | ||

| -- !query 0 output | ||

| org.apache.spark.sql.AnalysisException | ||

| It is not allowed to use an aggregate function in the argument of another aggregate function. Please use the inner aggregate function in a sub-query.; | ||

|

|

||

|

|

||

| -- !query 1 | ||

| select (select count(*) | ||

| from (values (1)) t0(inner_c)) | ||

| from (values (2),(3)) t1(outer_c) | ||

| -- !query 1 schema | ||

| struct<scalarsubquery():bigint> | ||

| -- !query 1 output | ||

| 1 | ||

| 1 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Instead of adding

SPARK-9830, can we check the actual error? The original PostgreSQL test is also for ensuring the error.There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It's a correct behaviour,

aggregate function calls cannot be nestedhttps://github.com/postgres/postgres/blob/REL_12_BETA2/src/test/regress/expected/aggregates.out#L1078-L1085

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ya. I know. My suggestion is to check the error message like PostgreSQL.

If it doesn't throw exceptions later, we can detect a regression at that time.