[SPARK-28333][SQL] NULLS FIRST for DESC and NULLS LAST for ASC #25147

[SPARK-28333][SQL] NULLS FIRST for DESC and NULLS LAST for ASC #25147shivusondur wants to merge 1 commit intoapache:masterfrom

Conversation

changed the default null ordering for ACS and DESC, and updated the corresponding tests

|

Can one of the admins verify this patch? |

| case object Descending extends SortDirection { | ||

| override def sql: String = "DESC" | ||

| override def defaultNullOrdering: NullOrdering = NullsLast | ||

| override def defaultNullOrdering: NullOrdering = NullsFirst |

There was a problem hiding this comment.

I think this is a breaking change and workaround looks pretty easy. Can you check what other DBMSs do? If it's consistent with some other DBMSs, I think we shouldn't fix it and resolve the ticket as Won't Do

There was a problem hiding this comment.

@HyukjinKwon

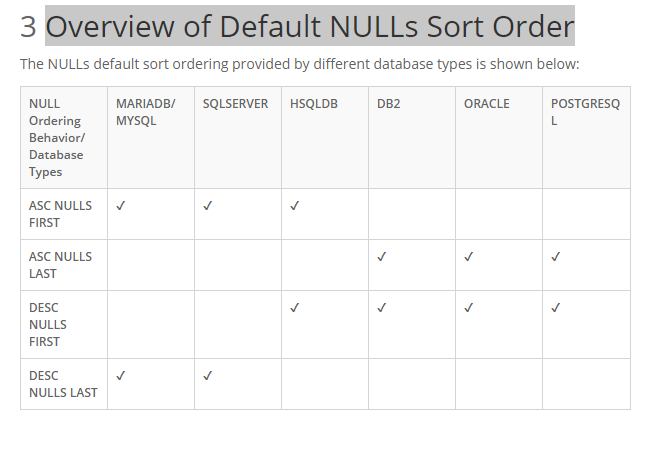

From the below link, spark is consistent with mysql, sqlserver

But inconsitent with DB2, Oracle, Postgresql

https://docs.mendix.com/refguide/null-ordering-behavior#3-overview-of-default-nulls-sort-order

There was a problem hiding this comment.

I think the current behaviour already makes sense. I wouldn't fix it. cc @dongjoon-hyun, @wangyum, @gatorsmile WDYT?

|

This is introduced at 2.1.0 via #14842 . And, we are followinging Hive's behavior. We cannot change this default behavior due to the reason @HyukjinKwon 's pointed out . @shivusondur . We can make a configuration for this instead if needed. Could you make a decision, @gatorsmile , whether closing this issue as |

@gatorsmile |

|

Hi, @shivusondur . This is a tough call. Although Spark 3.0.0 is a good chance for this, let's close this PR and the issue as |

|

Yup, let's just close - I don't particularly think this is worth, and no positive comments from others as pointed out. |

|

@shivusondur Can you submit a PR to document our current behavior? |

|

Create a subtask in https://issues.apache.org/jira/browse/SPARK-28588? |

What changes were proposed in this pull request?

changed the default null ordering for ACS and DESC, and updated the corresponding tests

How was this patch tested?

Ran sql/catalyst module UT and updated the test case