-

Notifications

You must be signed in to change notification settings - Fork 29.1k

[SPARK-29910][SQL] Optimize speculation performance by adding minimum runtime limit #26541

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

What you really want is to make |

Hi @jiangxb1987 , thanks for your comment. This PR aims to improve the behavior when Besides, increasing |

|

Gentle ping, @cloud-fan |

|

In general it's OK to make a hardcoded value configurable, but I doubt if it's really useful in this case. If you have different kinds of tasks that need different speculation min time, then a global config doesn't help. You need a per job(or even per stage) config. |

Thanks for your review @cloud-fan . As for jobs and stages, |

|

Can one of the admins verify this patch? |

|

We're closing this PR because it hasn't been updated in a while. This isn't a judgement on the merit of the PR in any way. It's just a way of keeping the PR queue manageable. |

What changes were proposed in this pull request?

The minimum runtime to speculation used to be a fixed value 100ms. It means tasks finished in seconds will also be speculated and more executors will be required.

To improve this situation, we add

spark.speculation.minRuntimeto control the minimum runtime limit of speculation.We can reduce normal tasks to be speculated by adjusting

spark.speculation.minRuntime.Example:

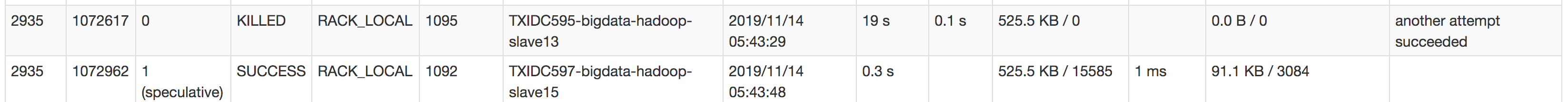

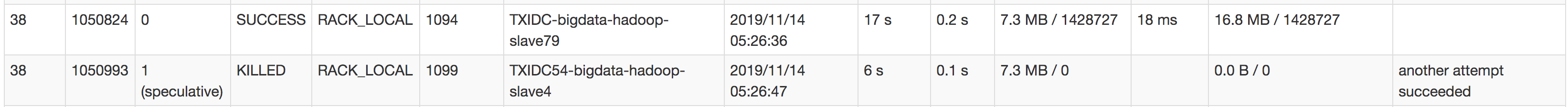

Tasks that don't need to be speculated:

and

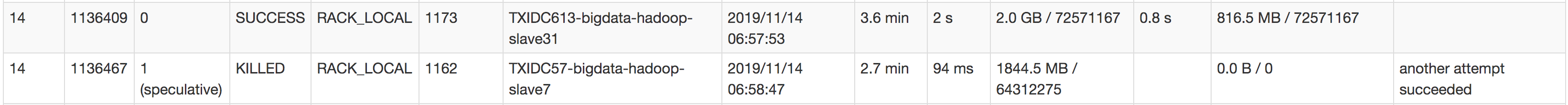

Tasks are more likely to go wrong and need to be speculated:

(especially those shuffle tasks with large amount of data and will cost minutes even hours)

Why are the changes needed?

To improve speculation performance by reducing speculated tasks which don't need to be speculated actually.

Does this PR introduce any user-facing change?

No.

How was this patch tested?

Unit tests.