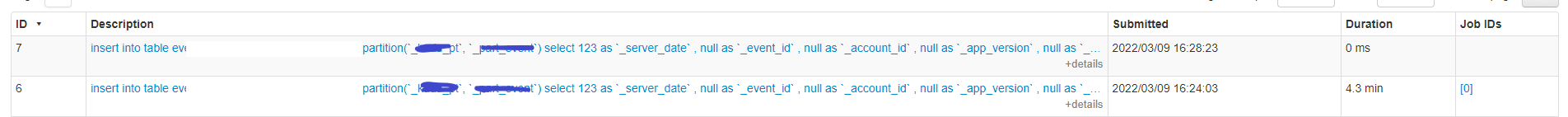

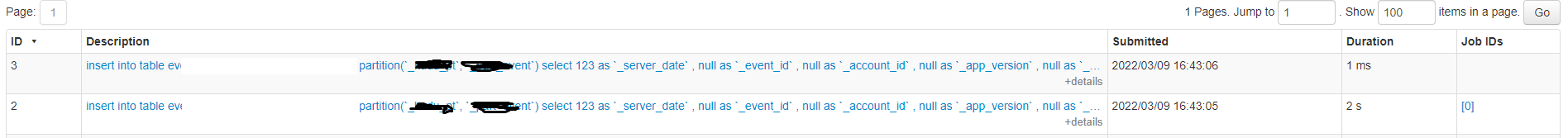

[SPARK-38230][SQL] InsertIntoHadoopFsRelationCommand unnecessarily fetches details of partitions in most cases#35549

[SPARK-38230][SQL] InsertIntoHadoopFsRelationCommand unnecessarily fetches details of partitions in most cases#35549coalchan wants to merge 1 commit intoapache:masterfrom

Conversation

… not have custom partition locations

|

Can one of the admins verify this patch? |

|

|

||

| // When partitions are tracked by the catalog, compute all custom partition locations that | ||

| // may be relevant to the insertion job. | ||

| if (partitionsTrackedByCatalog) { |

There was a problem hiding this comment.

Based the code on HiveClientImpl.getTableOption and CatalogTable, it seems that we won't run into this code while using hive metastore.

There was a problem hiding this comment.

Thanks for your response. tracksPartitionsInCatalog is set true in HiveExternalCatalog.restoreHiveSerdeTable

|

Could you fix the GA? |

|

Hm, I got your mind. After looking at the relevant logic, |

|

We're closing this PR because it hasn't been updated in a while. This isn't a judgement on the merit of the PR in any way. It's just a way of keeping the PR queue manageable. |

What changes were proposed in this pull request?

Add a spark conf in order to just fetch partitions' name instead of fetching partitions' details. This can reduce requests on hive metastore.

Why are the changes needed?

listPartitionsis order to get locations of partitions and compute custom partition locations(variablecustomPartitionLocations), but in most cases we do not have custom partition locations.listPartitionNamesjust fetchs partitions' name, it can reduce requests on hive metastore db.Does this PR introduce any user-facing change?

Yes, we should config "spark.sql.hasCustomPartitionLocations = false"

How was this patch tested?