-

Notifications

You must be signed in to change notification settings - Fork 35

Ondrej Michal - Mesh & Optimization settings #91

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Excuse the changes in: |

…Mesh&OptimizationSettings unit test: 110 passed, 15 skipped in 94.60s (0:01:34)

dogukankaratas

left a comment

dogukankaratas

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

One more thing to discuss about Optimization Settings;

It works fine even without activating the AddOn for optimization. It can cause some problems about licensing in the future.

Technically, it works well.

| 'windsimulation_mesh_config_value_keep_results_if_mesh_deleted': None, | ||

| 'windsimulation_mesh_config_value_consider_surface_thickness': None, | ||

| 'windsimulation_mesh_config_value_run_rwind_silent': None} | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

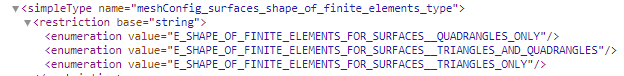

It would be better explain users, which configuration has controlled by which data type(integer, string, boolean or enumeration item).

There are two possible ways:

- Add default RFEM values instead of 'None'.

- Add DocStrings to explain configurations.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Well I can not add default values. I have to differ which parameters are set and which are not. This class is just prototype to show user, which parameters can be set. Frankly, I'm not able to describe them more eloquently than the name of the parameter itself :-)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Maybe adding type of parameters in DocStrings can help us to document this. That looks like my duty 👍🏻

| Model Check Process Object Groups Option Type | ||

| ''' | ||

| CROSS_LINES, CROSS_MEMBERS, DELETE_UNUSED_NODES, UNITE_NODES_AND_DELETE_UNUSED_NODES = range(4) | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Fiexd by adding this enum into enums.py and unit test.

|

This PR has Quantification details

Why proper sizing of changes matters

Optimal pull request sizes drive a better predictable PR flow as they strike a

What can I do to optimize my changes

How to interpret the change counts in git diff output

Was this comment helpful? 👍 :ok_hand: :thumbsdown: (Email) |

US-8268:

This part implements set and get functions for Mesh and Optimization settings incl. unit tests:

get_optimization_settings

set_optimization_settings

get_mesh_settings

set_mesh_settings

get_model_info