-

Notifications

You must be signed in to change notification settings - Fork 127

Add retries in case jenkins job has a build already enqueued #1203

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

f2264c1 to

576582f

Compare

|

/test |

9 similar comments

|

/test |

|

/test |

|

/test |

|

/test |

|

/test |

|

/test |

|

/test |

|

/test |

|

/test |

|

/test |

|

/test |

2 similar comments

|

/test |

|

/test |

|

/test |

1 similar comment

|

/test |

|

/test |

|

/test |

|

/test |

3 similar comments

|

/test |

|

/test |

|

/test |

| log.Printf("Function failed, retrying in %v", delay) | ||

|

|

||

| select { | ||

| case <-time.After(delay): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should we have some option similar to exponential backoff? maybe each time we call the retry we pass the attempt (let's assume attempt int) and have the After(attempt * delay)?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure!

I'll add two new parameters for this:

--growth-factor: factor to use in the exponential backoff (probably default value around 1.5)--max-waiting-time: maximum waiting time to wait between each attempt, so it does not get too high the duration between iterations.

I would go for something like this:

- growth factor:

f - current attempt/retry:

a - waiting time:

w - maximum waiting time:

W - delay to use per retry:

d

Using a growth factor 1.5, waiting time of 2 secs each and a maximum waiting time of 1hour:

2023/04/04 10:26:26 Function failed, retrying in 2s

2023/04/04 10:26:27 Function failed, retrying in 2s

2023/04/04 10:26:28 Function failed, retrying in 4s

2023/04/04 10:26:29 Function failed, retrying in 6s

2023/04/04 10:26:30 Function failed, retrying in 10s

2023/04/04 10:26:31 Function failed, retrying in 14s

2023/04/04 10:26:32 Function failed, retrying in 22s

2023/04/04 10:26:33 Function failed, retrying in 34s

2023/04/04 10:26:34 Function failed, retrying in 50s

2023/04/04 10:26:35 Function failed, retrying in 1m16s

2023/04/04 10:26:36 Function failed, retrying in 1m54s

2023/04/04 10:26:37 Function failed, retrying in 2m52s

2023/04/04 10:26:38 Function failed, retrying in 4m18s

2023/04/04 10:26:39 Function failed, retrying in 6m28s

2023/04/04 10:26:40 Function failed, retrying in 9m42s

2023/04/04 10:26:41 Function failed, retrying in 14m34s

2023/04/04 10:26:42 Function failed, retrying in 21m52s

2023/04/04 10:26:43 Function failed, retrying in 32m50s

2023/04/04 10:26:44 Function failed, retrying in 49m14s

2023/04/04 10:26:45 Function failed, retrying in 1h0m0s

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nice!

💚 Build Succeeded

History

cc @mrodm |

| waitingTime := flag.Duration("waiting-time", 5*time.Second, fmt.Sprintf("Waiting period between each retry")) | ||

| growthFactor := flag.Float64("growth-factor", 1.25, fmt.Sprintf("Growth-Factor used for exponential backoff delays")) | ||

| retries := flag.Int("retries", 20, fmt.Sprintf("Number of retries to trigger the job")) | ||

| maxWaitingTime := flag.Duration("max-waiting-time", 60*time.Minute, fmt.Sprintf("Maximum waiting time per each retry")) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

With these default values, the waiting times would be:

2023/04/04 11:31:02 Function failed, retrying in 5s -> 5.00s

2023/04/04 11:31:03 Function failed, retrying in 6s -> 6.25s

2023/04/04 11:31:04 Function failed, retrying in 7s -> 7.81s

2023/04/04 11:31:05 Function failed, retrying in 9s -> 9.77s

2023/04/04 11:31:06 Function failed, retrying in 12s -> 12.21s

2023/04/04 11:31:07 Function failed, retrying in 15s -> 15.26s

2023/04/04 11:31:08 Function failed, retrying in 19s -> 19.07s

2023/04/04 11:31:09 Function failed, retrying in 23s -> 23.84s

2023/04/04 11:31:10 Function failed, retrying in 29s -> 29.80s

2023/04/04 11:31:11 Function failed, retrying in 37s -> 37.25s

2023/04/04 11:31:12 Function failed, retrying in 46s -> 46.57s

2023/04/04 11:31:13 Function failed, retrying in 58s -> 58.21s

2023/04/04 11:31:14 Function failed, retrying in 1m12s -> 72.76s

2023/04/04 11:31:15 Function failed, retrying in 1m30s -> 90.95s

2023/04/04 11:31:16 Function failed, retrying in 1m53s -> 113.69s

2023/04/04 11:31:17 Function failed, retrying in 2m22s -> 142.11s

2023/04/04 11:31:18 Function failed, retrying in 2m57s -> 177.64s

2023/04/04 11:31:19 Function failed, retrying in 3m42s -> 222.04s

2023/04/04 11:31:20 Function failed, retrying in 4m37s -> 277.56s

2023/04/04 11:31:21 Function failed, retrying in 5m46s -> 346.94s

| depends_on: | ||

| - build-package | ||

| timeout_in_minutes: 30 | ||

| timeout_in_minutes: 90 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Increase up to 90 minutes, so the retries can be completed

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, Mario. LGTM

Closes #1200

Probably related because when it tries to trigger the job there is already a enqueued job pending to be started.

Example of the error:

This happens if the value

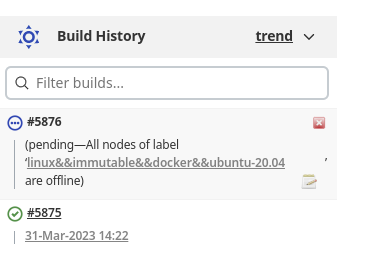

inQueueis true for a specific job. In the case of internal-ci instance, it looks like this happens if there is a job in the queue waiting for a node:Status of the job queue

inQueuevalue in Jenkins API responseExample of output when retries are used (Buildkite link):