GenQuery is an AI-powered platform designed to streamline academic research by enabling efficient article retrieval and query handling. It integrates LangChain, OpenAI's GPT-3.5 Turbo, and FAISS for rapid and cost-effective similarity searches.

- Efficient Query Handling: Process large documents by breaking them into manageable segments and minimizing token consumption.

- Fast Retrieval: Utilize FAISS for similarity-based searches and accurate query responses.

- Seamless User Interaction: Transparent query results with links to source URLs.

- Build an AI-driven platform using LangChain, OpenAI embeddings, and FAISS for efficient information retrieval.

- Implement Map Reduce Document Chaining to minimize API costs while preserving data integrity.

- LLMs (e.g., GPT-3.5 Turbo): Used for NLP tasks like query handling, summarization, and generating responses.

- Transformer Models: Self-attention mechanism for understanding context and scalability.

- FAISS: For high-dimensional data similarity searches.

- LangChain: Manages data processing and integrates models.

Illustration of system architecture: Document retrieval, text segmentation, embedding construction, and FAISS for query handling.

Illustrates how Map-Reduce Chaining efficiently processes documents.

The Stuff Method optimizes API calls by reducing token consumption.

Overview of the RAG system for enhancing query responses with context.

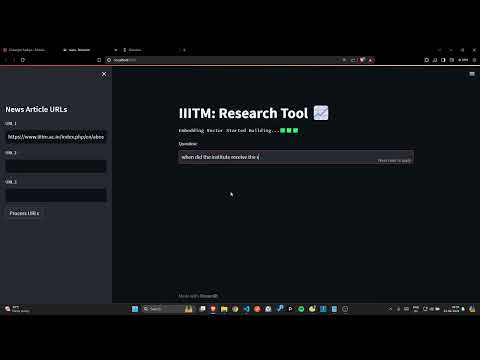

You can explore the tool in action by following this demo:

- Input: Load URLs or text files for processing.

- Process: The tool fetches, segments, and generates embeddings using OpenAI models.

- Retrieve: FAISS handles similarity searches and returns accurate results.

Clone the repository:

git clone https://github.com/yourusername/GenQuery.git