Fix tensor normalization in EmbeddingsPipeline #106

Merged

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

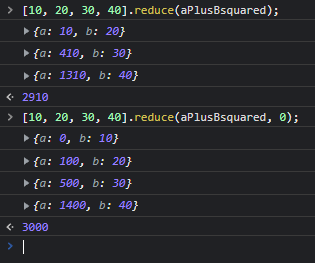

I was directly using the quantized all-MiniLM-L6-v2 onnx model to precompute some embeddings from Python, and I noticed some slight differences between those embeddings and the ones transformers.js generated. I found that the vector norm calculation wasn't squaring the first value of the vector:

let norm = Math.sqrt(batch.data.reduce((a, b) => a + b * b)).Adding an initial value of 0 to the

reducefixes this. I've verified that this fixed normalization calculation results in embeddings equal to the ones generated directly from the onnx model!