CoSTAR Plan is for deep learning with robots divided into two main parts: The CoSTAR Task Planner (CTP) library and CoSTAR Hyper.

Code for the paper Visual Robot Task Planning.

Code for the paper The CoSTAR Block Stacking Dataset: Learning with Workspace Constraints.

@article{hundt2019costar,

title={The CoSTAR Block Stacking Dataset: Learning with Workspace Constraints},

author={Andrew Hundt and Varun Jain and Chia-Hung Lin and Chris Paxton and Gregory D. Hager},

journal = {Intelligent Robots and Systems (IROS), 2019 IEEE International Conference on},

year = 2019,

url = {https://arxiv.org/abs/1810.11714}

}

Code instructions are in the CoSTAR Hyper README.md.

The CoSTAR Planner is part of the larger CoSTAR project. It integrates some learning from demonstration and task planning capabilities into the larger CoSTAR framework in different ways.

Specifically it is a project for creating task and motion planning algorithms that use machine learning to solve challenging problems in a variety of domains. This code provides a testbed for complex task and motion planning search algorithms.

The goal is to describe example problems where the actor must move around in the world and plan complex interactions with other actors or the environment that correspond to high-level symbolic states. Among these is our Visual Task Planning project, in which robots learn representations of their world and use these to imagine possible futures, then use these for planning.

To run deep learning examples, you will need TensorFlow and Keras, plus a number of Python packages. To run robot experiments, you'll need a simulator (Gazebo or PyBullet), and ROS Indigo or Kinetic. Other versions of ROS may work but have not been tested. If you want to stick to the toy examples, you do not need to use this as a ROS package.

About this repository: CTP is a single-repository project. As such, all the custom code you need should be in one place: here. There are exceptions, such as the CoSTAR Stack for real robot execution, but these are generally not necessary. The minimal installation of CTP is just to install the costar_models package as a normal python package ignoring everything else.

- PyBullet Block Stacking download tar.gz

- Sample Husky Data download tar.gz

- Classic CoSTAR Real Robot Data download tar.gz

- Early version, deprecated in lieu of the full CoSTAR Block Stacking Dataset.

- 0. Introduction

- 1. Installation Guide

- 2. Approach: about CTP

- 2.1 Software Design: high-level notes

- 3. Machine Learning Models: using the command line tool

- 3.1 Data collection: data collection with a real or simulated robot

- 3.2 MARCC instructions: learning models using JHU's MARCC cluster

- 3.3 Generative Adversarial Models

- 3.4 SLURM Utilities: tools for using slurm on MARCC

- 4. Creating and training a custom task: overview of task representations

- 4.1 Generating a task from data: generate task from demonstrations

- 4.2 Task Learning: specific details

- 5. CoSTAR Simulation: simulation intro

- 5.1 Simulation Experiments: information on experiment setup

- 5.2 PyBullet Sim: an alternative to Gazebo that may be preferrable in some situations

- 5.3 costar_bullet quick start: How to run tasks, generate datasets, train models, and extend costar_bullet with your own components.

- 5.4 Adding a robot to the ROS code: NOT using Bullet sim

- 6. Husky robot: Start the APL Husky sim

- 7. TOM robot: introducing the TOM robot from TUM

- 7.1 TOM Data: data necessary for TOM

- 7.2 The Real TOM: details about parts of the system for running on the real TOM

- 8. CoSTAR Robot: execution with a standard UR5

- CoSTAR Simulation: Gazebo simulation and ROS execution

- CoSTAR Task Plan: the high-level python planning library

- CoSTAR Gazebo Plugins: assorted plugins for integration

- CoSTAR Models: tools for learning deep neural networks

- CTP Tom: specific bringup and scenarios for the TOM robot from TU Munich

- CTP Visual: visual robot task planner

setup: contains setup scriptsslurm: contains SLURM scripts for running on MARCCcommand: contains scripts with example CTP command-line callsdocs: markdown files for information that is not specific to a particular ROS package but to all of CTPphotos: example imageslearning_planning_msgs: ROS messages for data collection when doing learning from demonstration in ROS- Others are temporary packages for various projects

Many of these sections are a work in progress; if you have any questions shoot me an email (cpaxton@jhu.edu).

This code is maintained by:

- Chris Paxton (cpaxton@jhu.edu).

- Andrew Hundt (ATHundt@gmail.com)

@article{paxton2018visual,

author = {Chris Paxton and

Yotam Barnoy and

Kapil D. Katyal and

Raman Arora and

Gregory D. Hager},

title = {Visual Robot Task Planning},

journal = {ArXiv},

year = {2018},

url = {http://arxiv.org/abs/1804.00062},

archivePrefix = {arXiv},

eprint = {1804.00062},

biburl = {https://dblp.org/rec/bib/journals/corr/abs-1804-00062},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

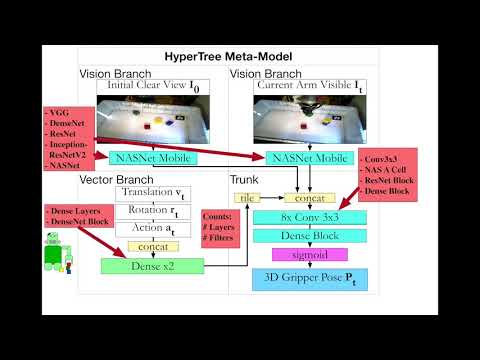

Training Frankenstein's Creature To Stack: HyperTree Architecture Search

@article{hundt2018hypertree,

author = {Andrew Hundt and Varun Jain and Chris Paxton and Gregory D. Hager},

title = "{Training Frankenstein's Creature to Stack: HyperTree Architecture Search}",

journal = {ArXiv},

archivePrefix = {arXiv},

eprint = {1810.11714},

year = 2018,

month = Oct,

url = {https://arxiv.org/abs/1810.11714}

}