A Unified Open-Source Framework for Realtime Multimodal Physiological Sensing, Edge AI, and Intervention in Closed-Loop Smart Healthcare Applications

Quickstart • Docs • GUI • Showcase • Cite • Contact

HERMES for the Greek mythology analogy of the god of communication and speed, protector of information, the gods' herald. He embodies the nature of smooth and reliable communication. His role accurately resonates with the vision of this framework: facilitate reliable and fast exchange of continuously generated multimodal physiological and external data across distributed wireless and wired multi-sensor hosts for synchronized realtime data collection, in-the-loop AI stream processing, and analysis, in intelligent med- and health-tech (wearable) applications.

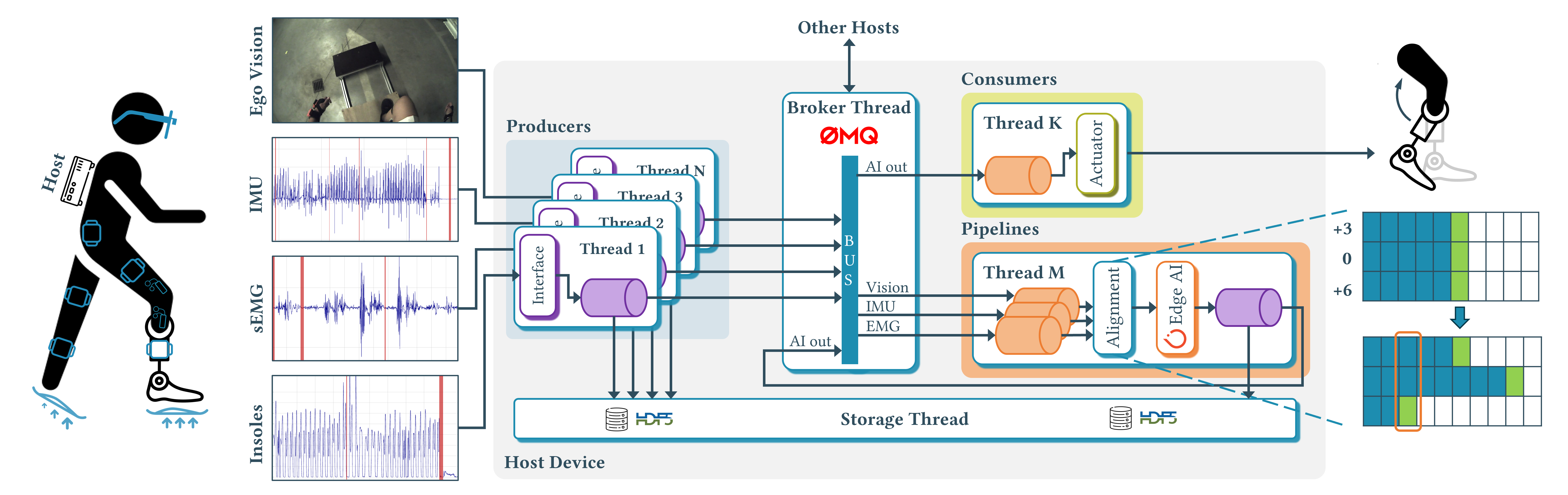

HERMES offers out-of-the-box streaming integrations to a number of commercial sensor devices and systems, high resolution cameras, templates for extension with custom user devices, and a ready-made wrapper for easy PyTorch AI model insertion. It reliably and synchronously captures heterogeneous data across distributed interconnected devices on a local network in a continuous manner, and enables realtime AI processing at the edge toward personalized intelligent closed-loop interventions of the user. All continuously acquired data is periodically flushed to disk for as long as the system has disk space, as MKV/MP4 and HDF5 files, for video and sensor data, respectively.

Create a Python 3 virtual environment python -m venv .venv (python >= 3.7).

Activate it with .venv/bin/activate for Linux or .venv\Scripts\activate for Windows.

Single-command install HERMES into your project along other dependendices.

pip install pysio-hermesAll the integrated, validated and supported sensor devices are separately installable as pysio-hermes-<subpackage_name>, like:

pip install pysio-hermes-torchWill install the AI processing subpackage to wrap user-specified PyTorch models.

List of supported devices (continuously updated)

Some subpackages require OEM software installation, check each below for detailed prerequisites.torchWrapper for PyTorch AI modelspupillabsPupil Labs Core smartglassesbaslerBasler camerasdotsMovella DOTs IMUsmvnXsens MVN Analyze MoCap suitawindaXsens Awinda IMUscometaCometa WavePlus sEMGmoticonMoticon OpenGo pressure insolestmsiTMSi SAGA physiological signalsviconVicon Nexus capture systemmoxyMoxy muscle oxygenation monitor

The following subpackages are in development.

Check out the full documentation site for more usage examples, architecture overview, detailed extension guide, and FAQs.

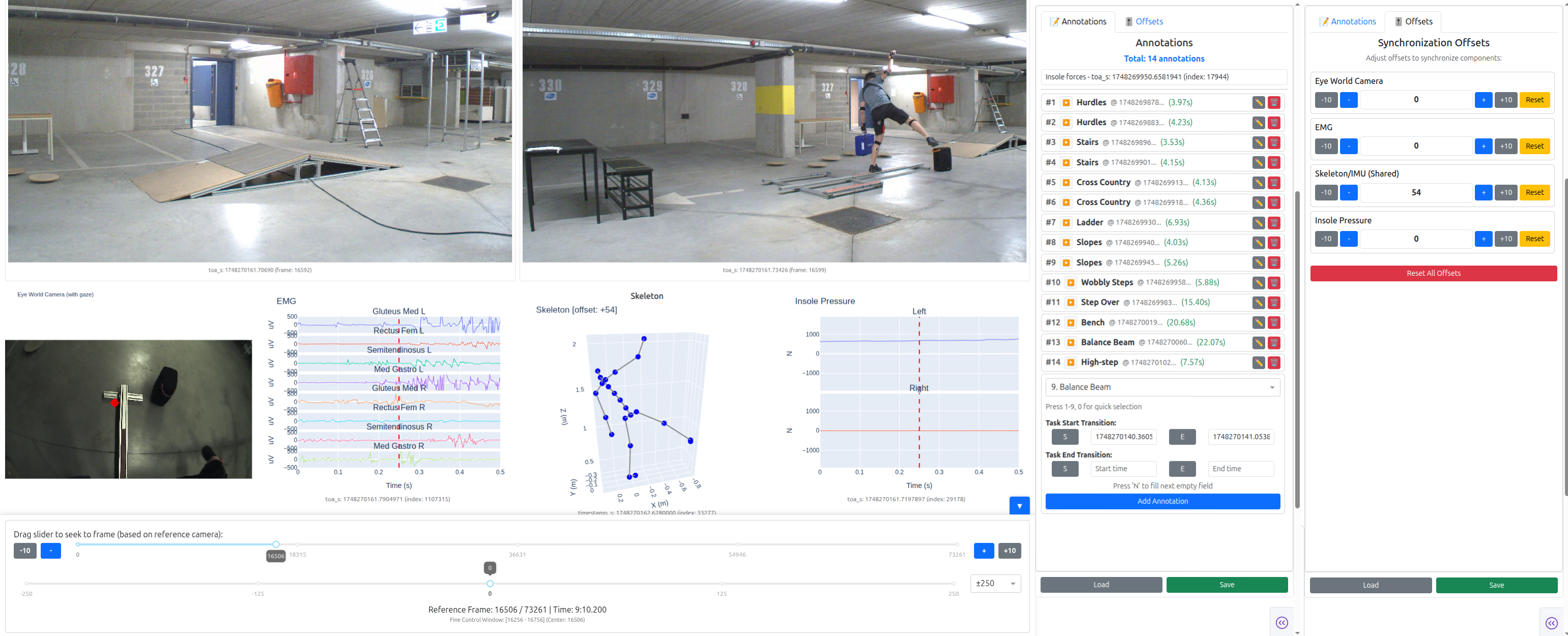

We developed PysioViz a complementary dashboard based on Dash Plotly for analysis and annotation of the collected multimodal data. We use it ourselves to generate ground truth labels for the AI training workflows. Check it out and leave feedback!

These are some of our own projects enabled by HERMES to excite you to adopt it in your smart closed-looop healthtech usecases.

AI-enabled intent prediction for high-level locomotion mode selection in a smart leg prosthesis

Realtime automated cueing for freezing-of-gait Parkinson's patients in free-living conditions

Personalized level of assistance in prolong use rehabilitation and support exoskeletons

This sourcecode is licensed under the MIT license - see the LICENSE file for details.

The project's logo is distributed under the CC BY-NC-ND 4.0 license - see the LOGO-LICENSE.

When using in your project, research, or product, please cite the following and notify us so we can update the index of success stories enabled by HERMES.

This project was primarily written by Maxim Yudayev while at the Department of Electrical Engineering, KU Leuven.

This study was funded, in part, by the AidWear project funded by the Federal Public Service for Policy and Support, the AID-FOG project by the Michael J. Fox Foundation for Parkinson’s Research under Grant No.: MJFF-024628, and the Flemish Government under the Flanders AI Research Program (FAIR).

HERMES is a "Ship of Theseus"[1] of ActionSense that started as a fork and became a complete architectural rewrite of the system from the ground up to bridge the fundamental gaps in the state-of-the-art, and to match our research group's needs in realtime deployments and reliable data acquisition. Although there is no part of ActionSense in HERMES, we believe that its authors deserve recognition as inspiration for our system.

Special thanks for contributions, usage, bug reports, good times, and feature requests to Juha Carlon (KU Leuven), Stefano Nuzzo (VUB), Diwas Lamsal (KU Leuven), Vayalet Stefanova (KU Leuven), Léonore Foguenne (ULiège).