-

Notifications

You must be signed in to change notification settings - Fork 3.6k

Description

Describe the bug

This bug is related to the QDQ flow of static quantization. For the asymmetric quantization case, the quantization tool correctly implements a code optimization by replacing the RELU activation function with proper quantization of the output of the previous operator. However for the case of symmetric quantization, the elimination of the RELU operator, which is the implemented strategy, results in incorrect results. This is due to the fact that the symmetric quantization is not performing the clipping at zero.

The registry.py file sets independent of the symmetric/asymmetric argument Relu to be a RemovableAction

This is incorrect. A programmatic way to specify Relu as either QDQRemovableActivation or QDQDirect8BitOp is needed.

Urgency

As a workaround has been identified (i.e. hardcoding the registry.py entry to the required setting), it is not urgent. However as Relu Activation functions are very common, it should be addressed in a timely way.

System information

- Linux Ubuntu 18.04

- pip3 install

- ONNX Runtime version:

- onnx 1.11.0

- onnx-simplifier 0.3.10

- onnxoptimizer 0.2.7

- onnxruntime 1.11.1

- Python 3.7.5

To Reproduce

Attached is a onnx file of an MLP with 2 GEMM layers, each followed by an Relu activation function. A script to generate static symmetric quantization (activations and weights) with int8 activation and int8 weighs is also attached. Further the resulting onnx files generated with the current tool (indicated by postfix _incorrect) and a correct onnx (indicated by _correct) are given.

Expected behavior

proper expectation is that the qdq onnx files contains the relu operator and when running the network the output contains only positive values and zero values.

Screenshots

the original onnx graph

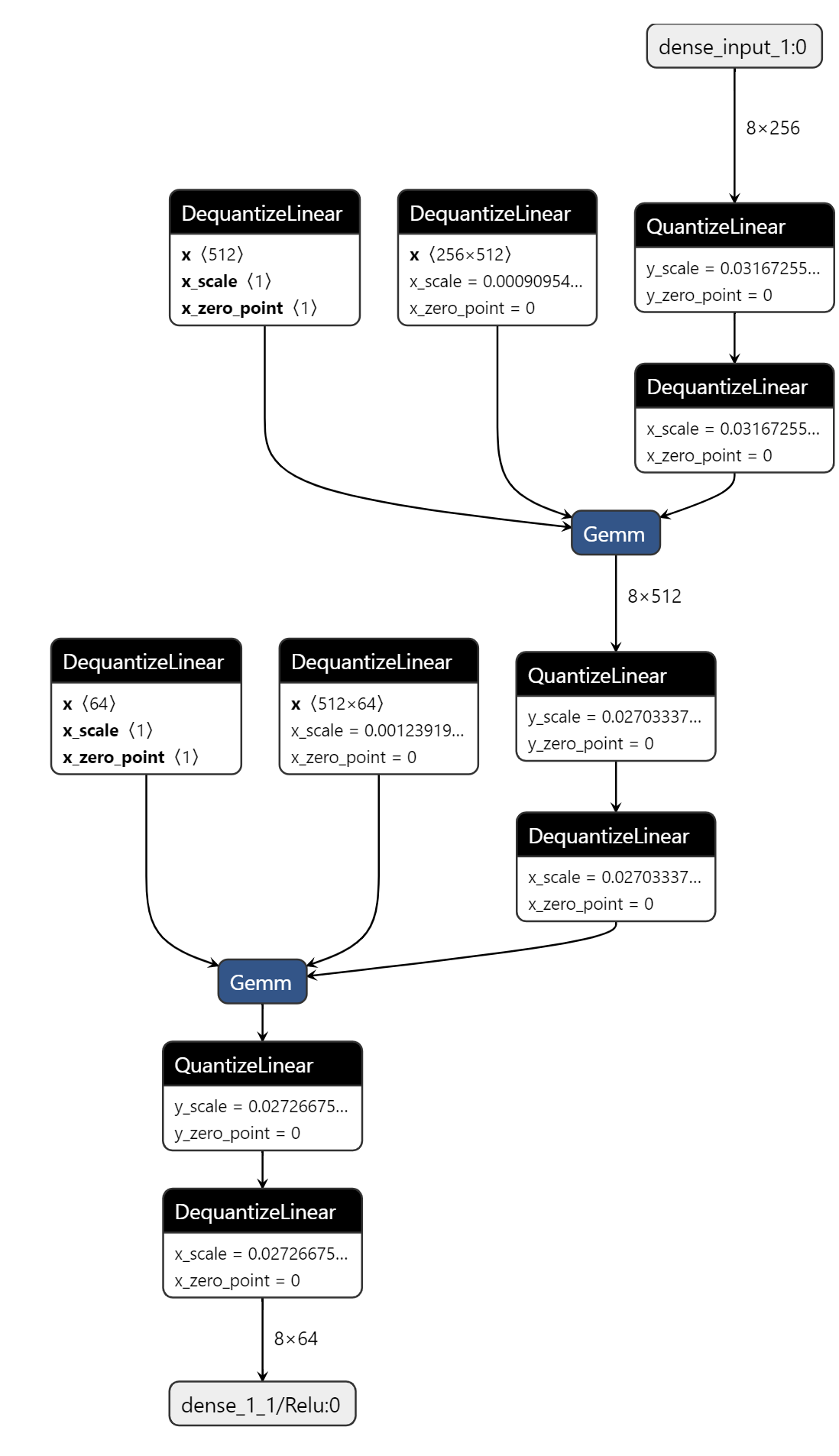

incorrectly generated qdq graph (static symmetric quantization)

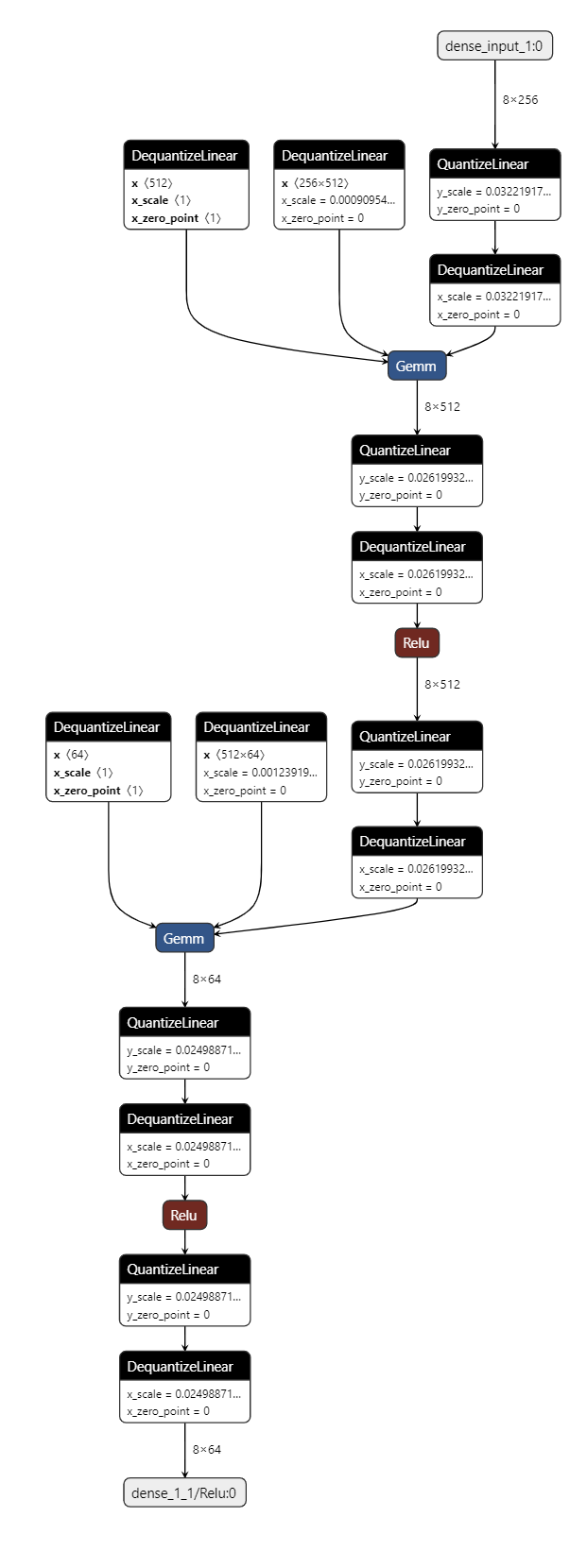

the expected graph

Additional context

Certainly using symmetric quantization on activations that have passed Rely is not utilizing the full 8bit range. Ideally one would like to have a 3rd option (not supported by the quantization tool at the moment) which allows for activation functions within a single onnx model to be either int8 or uint8 (which is equivalent to uint8 with either zero_point 128 or 0). Currently using the asymmetric quantization option will generate optimal zero_point that can be any value. As a future feature request, having a "restricted asymmetric" option would be highly desirable.

onnxruntime_bug.zip