-

Notifications

You must be signed in to change notification settings - Fork 3.6k

Description

Describe the bug

Hi, I built and installed Onnxruntime+TensorRT from scratch by following this wiki: https://onnxruntime.ai/docs/build/eps.html#tensorrt

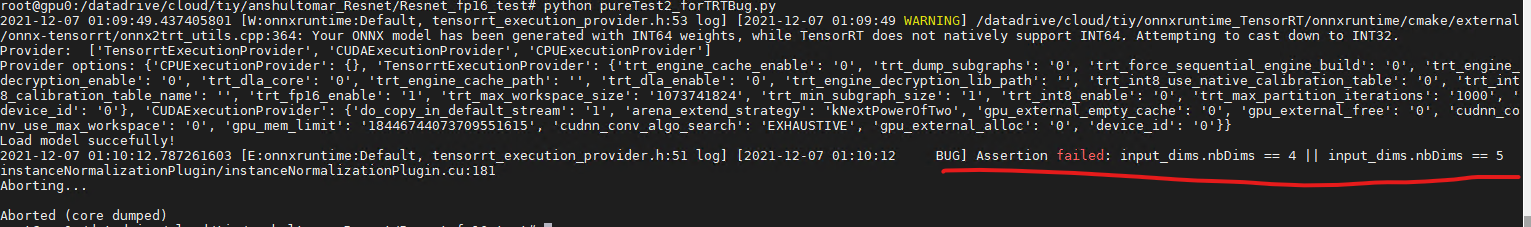

But, when I used it to run a fp16 model with "TensorrtExecutionProvider", it failed with below error msg:

2021-12-07 00:43:42.752827823 [E:onnxruntime:Default, tensorrt_execution_provider.h:51 log] [2021-12-07 00:43:42 BUG] Assertion failed: input_dims.nbDims == 4 || input_dims.nbDims == 5

instanceNormalizationPlugin/instanceNormalizationPlugin.cu:181

Aborting...

Aborted (core dumped)

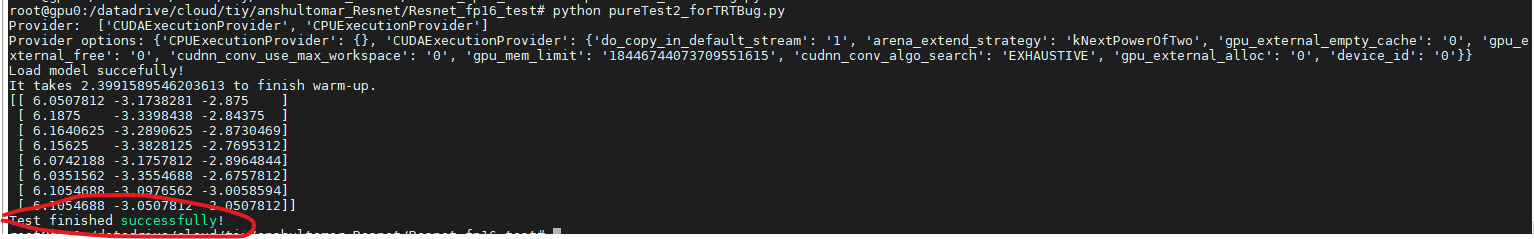

However, if I run the same model with "CUDAExecutionProvider". It works fine.

So, is it a bug in Onnxruntime+TensorRT? How to solve it?

Urgency

This bug prevents me from using ORT+TRT.

System information

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): 18.04

- ONNX Runtime installed from (source or binary): latest source code

- ONNX Runtime version: 1.10.0

- Python version: 3.6

- Visual Studio version (if applicable): N/A

- GCC/Compiler version (if compiling from source): 7.5.0

- CUDA/cuDNN version: CUDA 11.5, CuDNN 8.3.1, TensorRT 8.2.1

- GPU model and memory: V100, 16GB

To Reproduce

To reproduce, use below code and model:

-model: https://drive.google.com/file/d/1lvoLHigqiWaB3eFPoStQ22L5Avh316h8/view?usp=sharing

-code:

import numpy as np

import time

np.random.seed(123)

input = np.random.rand(8, 3, 480, 480).astype(np.float32)

def onnx_evaluate():

import onnxruntime as ort

sess_options = ort.SessionOptions()

sess_options.graph_optimization_level = ort.GraphOptimizationLevel.ORT_ENABLE_ALL

sess = ort.InferenceSession("graph_opset12_optimized_fp16_keep_io_True_unblockInstanceNormalization.onnx", sess_options,

providers=[

("TensorrtExecutionProvider", {

'trt_fp16_enable': True,

}),

"CUDAExecutionProvider"

]

)

input_name = sess.get_inputs()[0].name

label_name = sess.get_outputs()[0].name

options = sess.get_provider_options()

print("Provider: ", sess.get_providers())

print("Provider options:", options)

print("Load model succefully!")

warm_up_start_stamp = time.time()

pred = sess.run([label_name], {input_name: input})[0]

print(f"It takes {time.time()-warm_up_start_stamp} to finish warm-up.")

print(pred)

print("Test finished successfully!")

onnx_evaluate()But, if we change providers to CUDAExecutionProvider only, like below:

sess = ort.InferenceSession("graph_opset12_optimized_fp16_keep_io_True_unblockInstanceNormalization.onnx", sess_options,

providers=[

#("TensorrtExecutionProvider", {

# 'trt_fp16_enable': True,

# }),

"CUDAExecutionProvider"

]

)Expected behavior

I expect TensorrtExecutionProvider should at least work.