-

Notifications

You must be signed in to change notification settings - Fork 2.8k

Description

Describe the bug

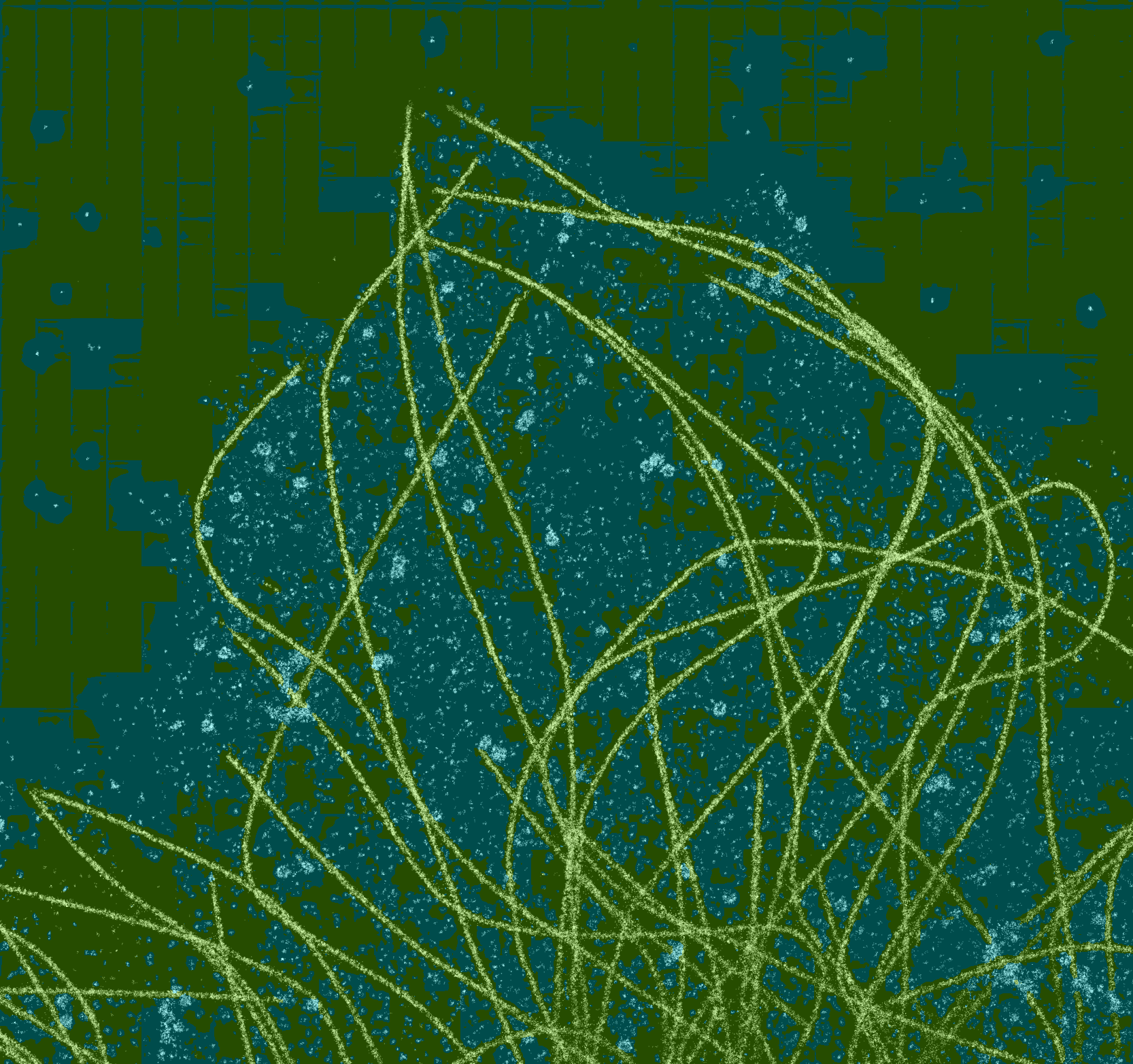

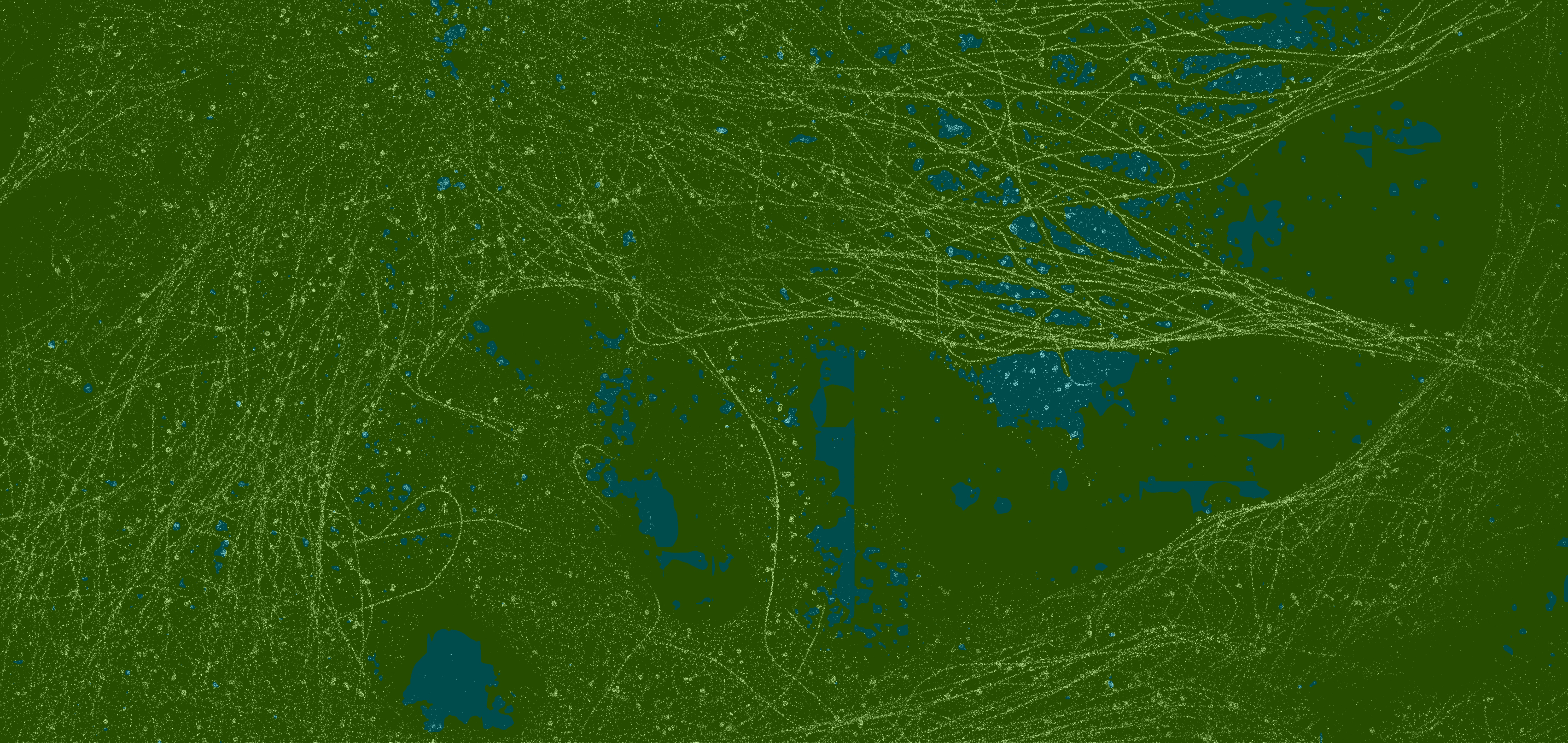

I trained a model that has an extremely small image features (around 5-20px in size), while having a 6000px sized images. Overall the situation is similar to satellite image segmentation. The bug is that test_cfg mode='slide' performs inadequately during inference -- the performance is worse than mode='whole' almost all the time. I tested difference crop_size and stride combinations, but it does not change the situation much.

I used 256px crops in training, taking random crops from the 6000px images. It would be logical that test_cfg slide with crop_size of 256 and stride around 150-200 would perform the best on similar 6000px test images. But it completely loses the details and performs worse than 'whole' method.

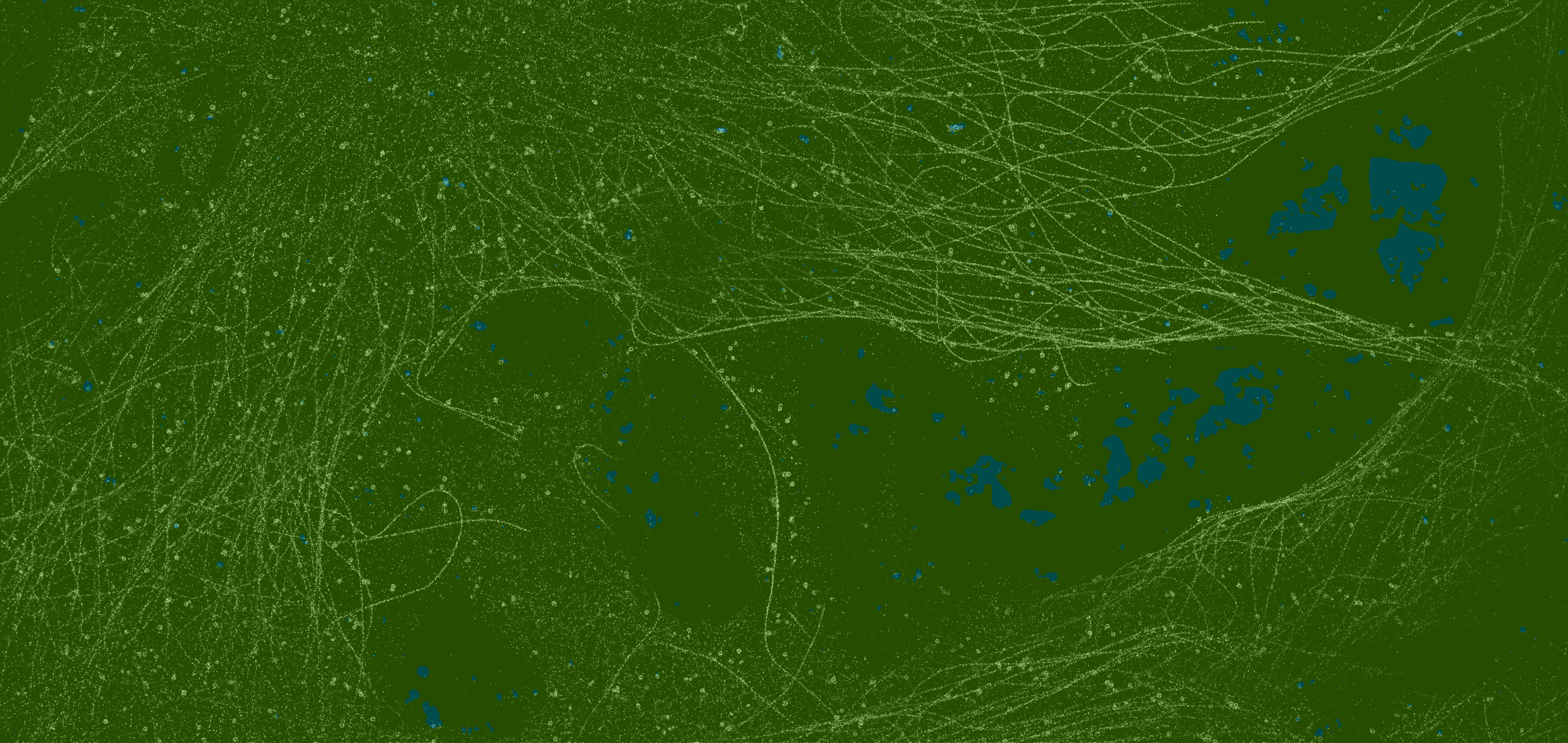

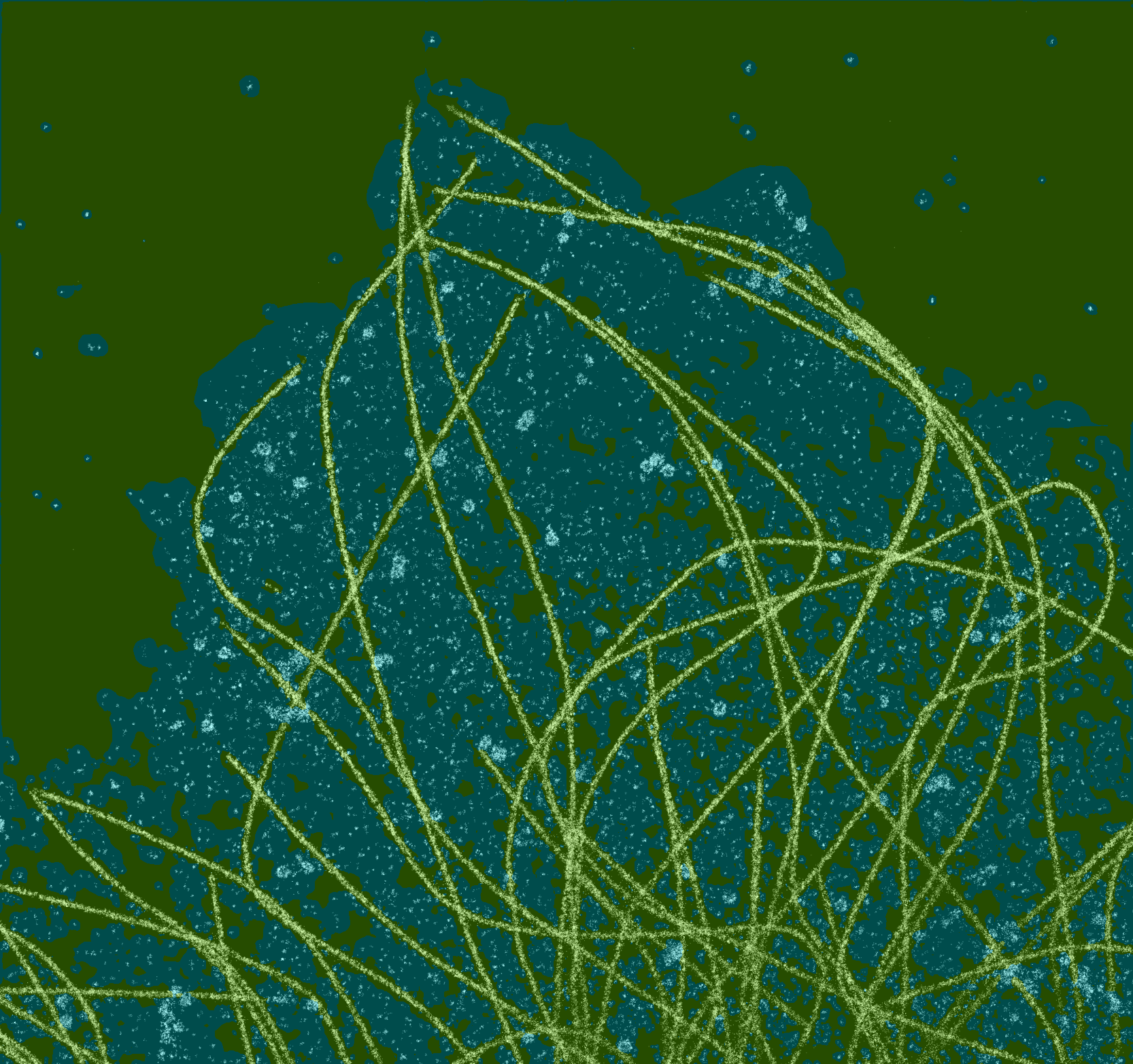

If I crop the test inference image to around 1000-1500px size, and use 'whole' method -- the segmentation is perfect, almost 100% accuracy (so the model is indeed trained correctly). On the other hand, with the same crop, if i use 'slide' method, it still performs worse than 'whole' method.

Do you have any idea what is wrong? Have you experienced this during training the iSAID dataset, or other satellite datasets? The main question is - why doesn't the 'slide' method perform better at big images?

I've added examples of what i described above:

6000px_slide

6000px_whole

1000px_whole

1000px_slide. See how the inference with this method missed marking 'blobs' with blue in the left bottom corner, while it worked with 'whole' method.