-

Notifications

You must be signed in to change notification settings - Fork 60

ODSC-39737 : allow to use GenericModel.predict() locally

#127

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

…science into ODSC-39737/allow_to_use_predict_locally

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

As a followup, I have a proposal -

If user calls predict and the url is None, when we throw an error, we can provide a message saying, Error invoking the remote endpoint as the model is not deployed yet or the endpoint information is not available. If you intend to invoke inference using locally available model artifact, set parameter local=True

d61245a

That's a good idea! I just improved the error message as suggested. Thanks Mayoor. |

…hub.com/oracle/accelerated-data-science into ODSC-39737/allow_to_use_predict_locally

Description

Related JIRA:https://jira.oci.oraclecorp.com/browse/ODSC-39737

Currently, the GenericModel supports two methods that can be used to test the model:

The difference between these two methods is that verify is used for local testing, where predict sends the request to the ML endpoint. Having two different methods confuses users, they don't really understand why we need two different methods for inferring the model.

Changed

local=True/Falseto the predict method to be able to invoke the prediction locally.User Experience

Test

Int test in TC

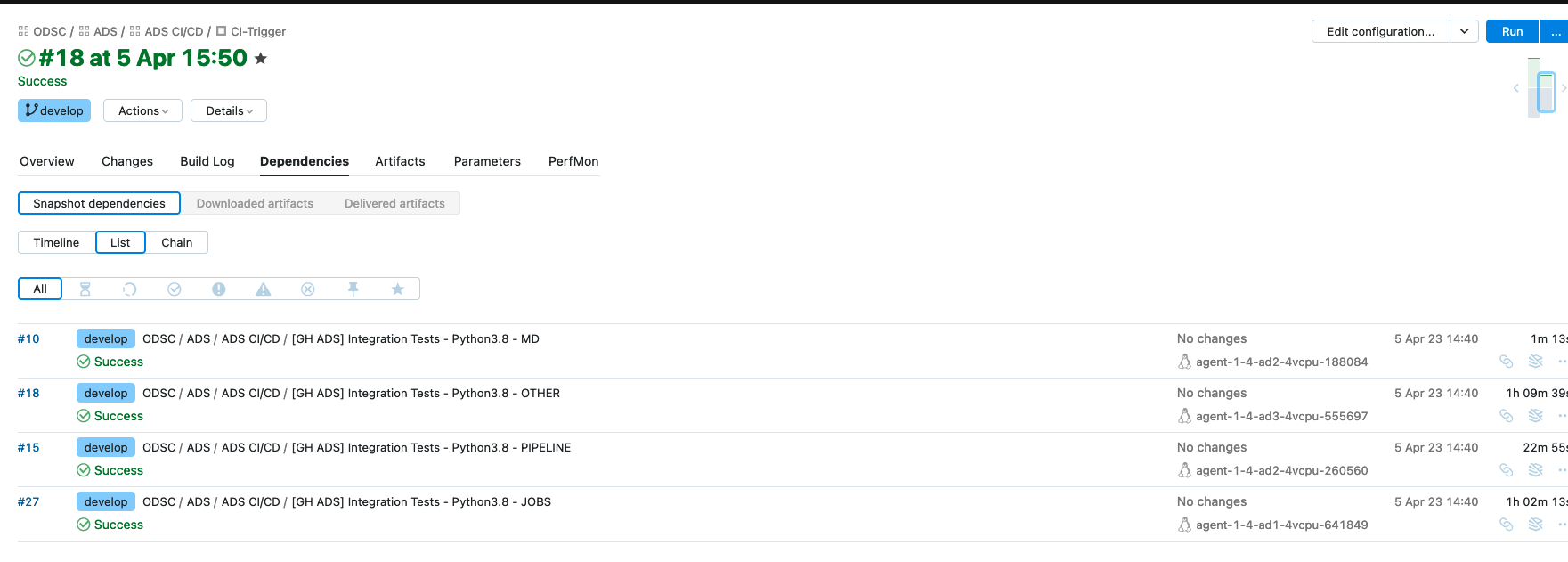

TC: https://teamcity.oci.oraclecorp.com/buildConfiguration/Odsc_Ads_AdsCiCd_CiTrigger/38127161?buildTab=buildParameters