Performance Tuning & Optimization

The mission for this project is to redesign and implement REST API services of Question&Answer module for an appareal eCommerce platform to support production level traffic. The newly inplemented service is able to handle up to 3K randomized Request per second with only 0.1% error rate and reduced response time by 96%(to 67ms).

This project was developed with NodeJS, Express and MongoDB, deployed on AWS EC2 with load-balancer NGINX(cache feature enabled), and load tested with various tools such as: Artillery, K6, loader.io, etc.

The Q&A API services includes following endpoints:

Retrieves a list of questions for a particular product. This list does not include any reported questions.

GET /qa/questions

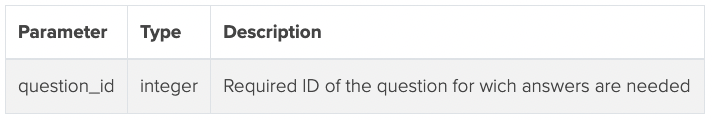

GET /qa/questions/:question_id/answers

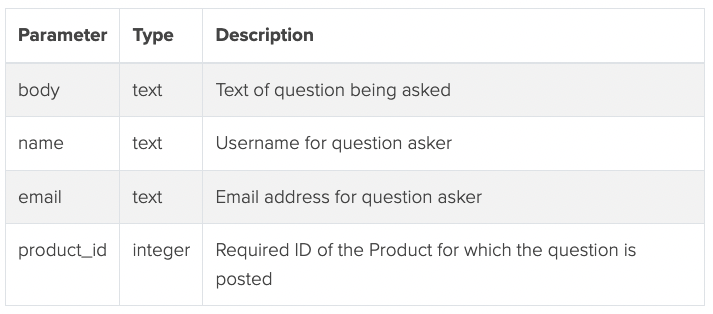

POST /qa/questions

POST /qa/questions/:question_id/answers

PUT /qa/questions/:question_id/helpful

Updates a question to show it was reported. Note, this action does not delete the question, but the question will not be returned in the above GET request.

PUT /qa/questions/:question_id/report

PUT /qa/answers/:answer_id/helpful

Updates an answer to show it has been reported. Note, this action does not delete the answer, but the answer will not be returned in the above GET request

PUT /qa/answers/:answer_id/report

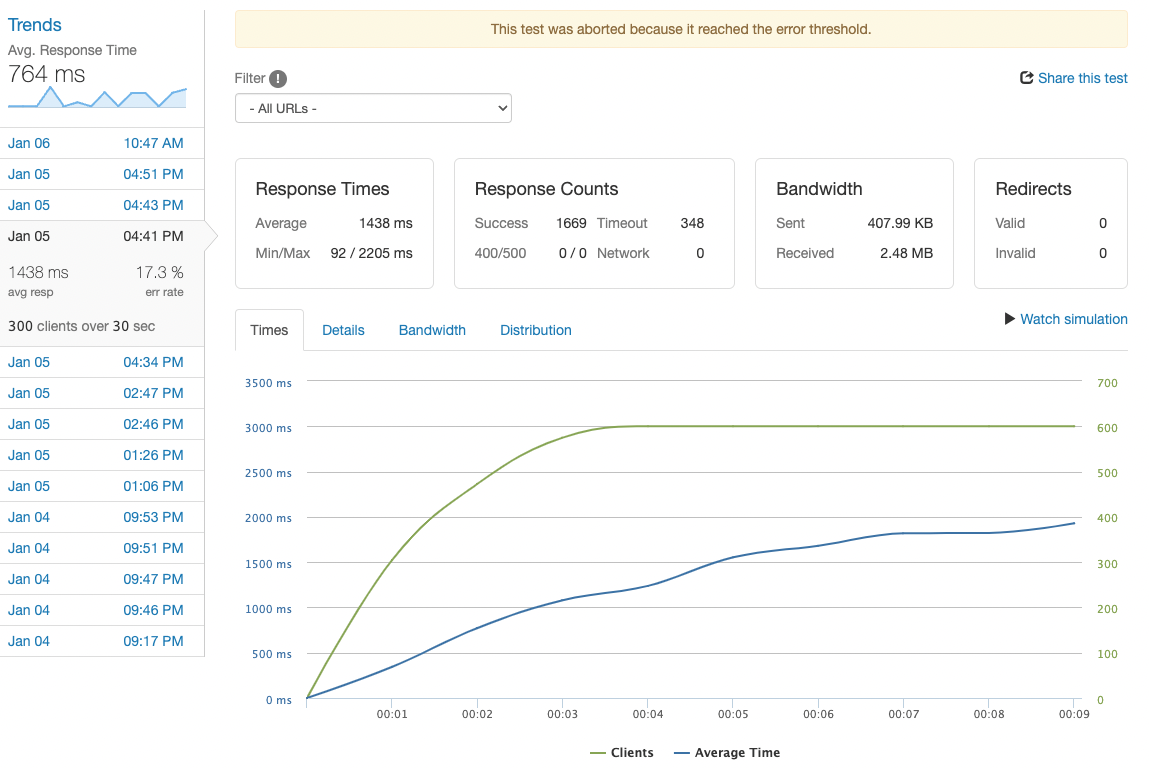

The goal of load testing is to determine new API server's capacity, scalability, and stability under different levels of load, record system's response time, throughput, and resource utilization to identify any performance bottlenecks or other issues that may affect the system's reliability or user experience.

It composes of 2 different phases: local testing and cloud-based testing, local testing aims to set up a baseline for server's capacity in a local setting, while Cloud-based load testing focus on testing deployed server's capacity and push it to its maximum for future optimization.

During local testing, the load is increased incrementally until the system reaches its maximum capacity using Autocannon and K6, and the maximum load the server can handle is around 1k.

autocannon -c 1000 http://localhost:3000/qa/questions?product_id=7&count=5After deployment onf AWS EC2, I utilized loader.io to incrementally test the server and find out the maximum load capacity was around 300 RPS, which is the baseline for future improvement

Performance Tuning is the most important part of this project, it helped the server from only supporting 300RPS to 3000RPS by adding additional EC2 servers, implementing NGINX load balancer, setting up Cache on reverse proxy and adding more worker connection on NGINX, etc.

- Adding additional servers

- Setup NGINX

- Setup Cache

- Increase amount of worker connection

To build and install all the dependencies

npm install

To start backend

npm run server-dev

To test

npm run test

To check test coverage

npm run test-coverage

-

Development

- Express

- Node.js

- MongoDB

- Docker

-

Load & Pressure Testing

- Autocannon

- K6

- Loader.io

-

Deployment

- AWS EC2

-

Performance Tuning & Optimization

- NGINX

- Caching

- NewRelic

Trilingual Software engineer professional based in Mountain View, CA.

8 years international working experience in high tech and luxury retail, proficient in Full-stack development, with creativity and cross-cultural communication skills.

Start-up experience with multitasking abilities, working with client across Gaming, Media, eCommerce and Telecom industries.