-

-

Notifications

You must be signed in to change notification settings - Fork 657

Extend sync_all_reduce API

#1823

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Extend sync_all_reduce API

#1823

Conversation

|

Already tested these updates on #1813 and it works fine |

|

Thank you @KickItLikeShika, it looks good. However, unitary tests of this improvement are needed

|

|

I will work on tests once we agree on the implementation

That's already done in

|

|

Thank you !

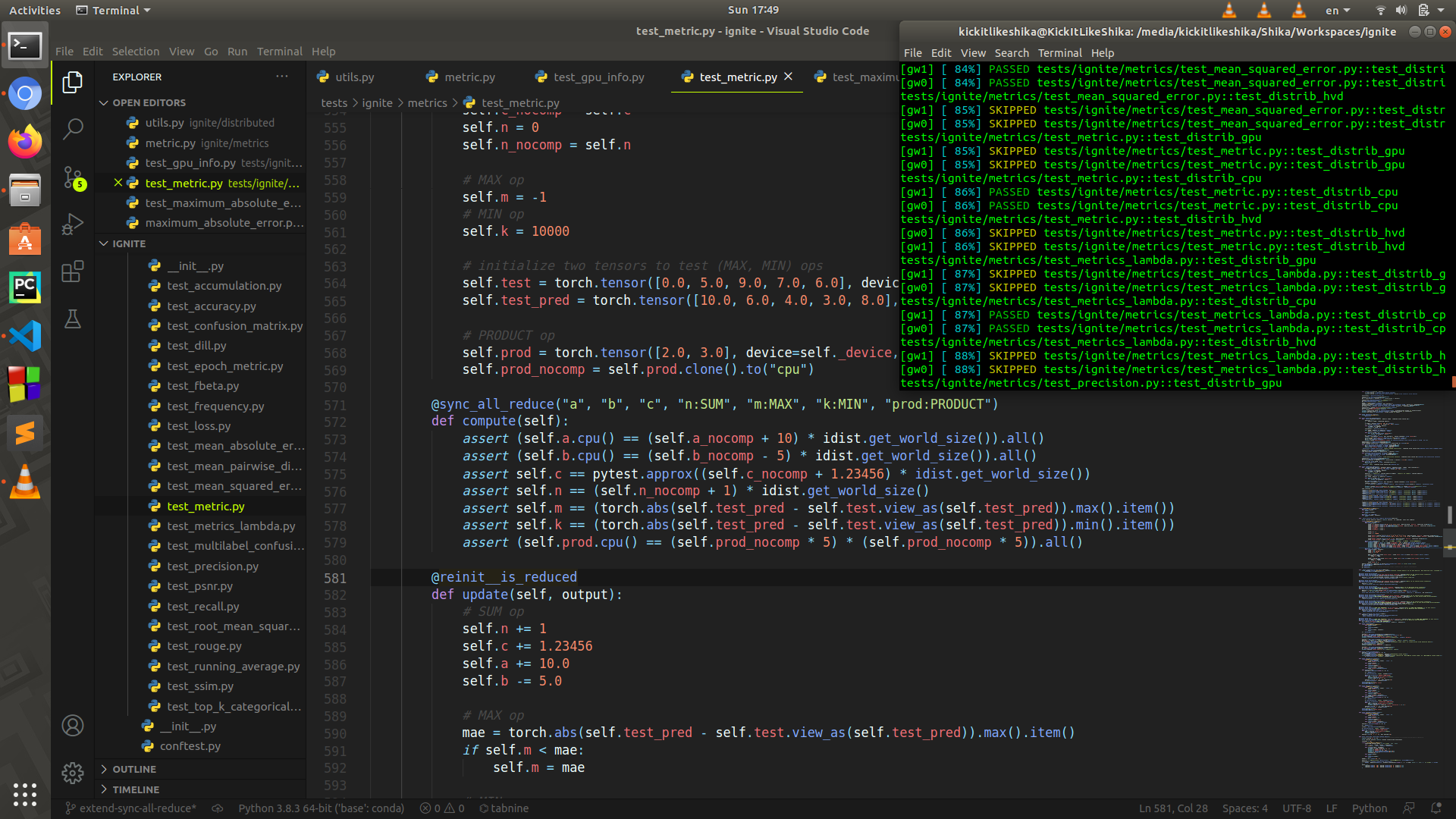

Tests to update are here ignite/tests/ignite/metrics/test_metric.py Line 541 in eca41b9

We must not forgot the update of the documentation too. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the PR @KickItLikeShika ! A question about implementation.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM!

@sdesrozis your thoughts and your turn to review :)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@KickItLikeShika Thank you ! LGTM

|

@vfdev-5 @sdesrozis please check the CI failures, we get an assertion error here |

|

@KickItLikeShika do you mean XLA tests? Have you tested it with xla cpu locally ? |

|

@vfdev-5 the CPU tests also fail https://github.com/pytorch/ignite/pull/1823/checks?check_run_id=2160328500#step:10:963 |

|

@vfdev-5 I realized the error, in the |

e3a607a to

242ecea

Compare

|

@vfdev-5 can you check this please? https://github.com/pytorch/ignite/pull/1823/checks?check_run_id=2160568207#step:8:1226 |

|

@sdesrozis can you please check this? https://github.com/pytorch/ignite/pull/1823/checks?check_run_id=2160568207#step:8:1226 |

a9516dc to

4fb6a68

Compare

Fixes #1813

Extending

sync_all_reduceAPI, addedoparg to allow more reduction operations (SUM,PRODUCT,MAX,MIN)Check list: