The overall objective of the work is to develop model to preditc sign languages from the user.

Communication with those with hearing disabilities is crucial; a computer's ability to recognize and interpret these signs will make life easier. Ths is an image-to-text machine learning model using state-of-art models.

- Clone this repo

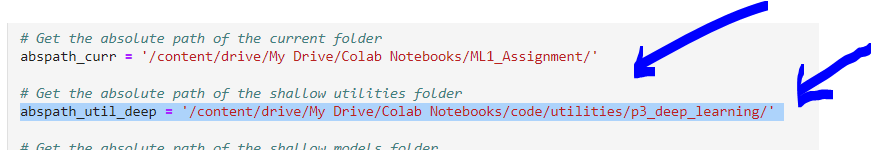

- You need to use Google Colab, set up your Google Colab with the folder names like image below

- Put the p3_deep_learning.ipynb file from this repository to the p3_deep_learning folder in your Google Collab: https://github.com/yuxiaohuang/teaching/blob/master/gwu/machine_learning_I/spring_2022/code/utilities/p3_deep_learning/pmlm_utilities_deep.ipynb

- Get your Kaggle Api Key and have it at hand.

- Run ML1_Final_Project.ipynb

- Ignore the Predictions tap :)

- Convolutional neural model.

ML_Final_Project.ipynb: the files contains the data preprocessing, modeling and the user input to test our cnn model. ML1_ProjectPresentation_Group2.pptx: a powerpoint presentation of our insights, recommendations and models results.