-

-

Notifications

You must be signed in to change notification settings - Fork 1.2k

Fix wrong consumer bandwidth estimation under producer packet loss #962

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

There is an ongoing BWE refactor in a separate branch. Please comment or target that PR instead. |

Is that going to be merged soon and it is stable enough? This looks like a huge issue to be honest so maybe you want to have this fix independently of the other one. For sure we will use a fork with this patch for now and we are only providing it so that other people can also benefit from having a quick fix and dramatically improve the video quality in common user networks. Thank you! |

|

And looking at the other PR it looks completely orthogonal to this change. That other PR doesn't change this setting or the TransportCongestionControlClient at all: https://github.com/versatica/mediasoup/pull/922/files#diff-503258e0a413224c755f234372fcaac2bdced8b81c5547936f4783758855da94L42 |

That's exactly what I ask you to comment about this in that PR to be taken into consideration. |

|

@sarumjanuch this PR is being merged in current v3. Please take it into account in your branch. |

|

Released in 3.11.5. Thanks. |

Ignore please, I see it's already done in your branch: https://github.com/versatica/mediasoup/pull/922/files#diff-7970ea9cf2c8d0acf7c47c4f86ea6cb8cb753f91bc2b661c467f3fed32a418c7 |

…ersatica#962) Authored-by: Gustavo Garcia <gustavo.garcia@Gustavos-MBP.localdomain>

When there is packet loss in the client -> SFU path (the producer) and that SAME packet loss is also detected in the SFU -> client path (the consumer) the bandwidth estimation shouldn't be affected because that's not some packet loss related to the congestion in that downlink path.

With the current TCCClient configuration used in mediasoup that packet loss in the RRs is used to detect congestion creating big bandwidth estimation drops when the network of rest of the users is perfectly fine.

This is configurable in webrtc gcc implementation with the

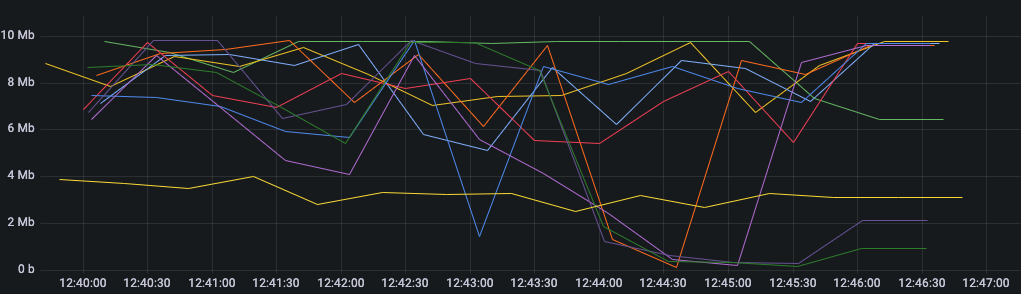

feedback_onlyflag. I think it makes sense to have "feedback_only = false" in a browser but I don't think it is reasonable for a SFU. For a SFU it would be better to use the packet loss detected only with the FeedbackControl RTCP messages and use RR messages just to estimate the RTT.I'm attaching the graphs of a real users session where you can see how the bandwidth estimation for almost EVERYBODY went down quickly just because ONE sender was having some packet loss in its uplink. Hope it helps to understand the issue.