-

Notifications

You must be signed in to change notification settings - Fork 1

Make scene detection faster and more accurate #3

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

Change mean absolute error to MSE, downscale frame images, use CIE LAB

e9d222e to

a04d0a9

Compare

|

Looks great! I will use the |

|

Evaluation with the withheld @xbankov, if you remove the line that says |

The recording was captured in 2019, but the last annotations for screen positions are from 2016. I would expect that the cameras have moved since then and the annotations are therefore imperfect.

Switching the pages may cause overexposure and it takes a few seconds for the camera to readjust. The training dataset does not contain these moments. Since the scene detector only uses the screen detector during these moments, it may be that the screen detector is unable to detect the screens correctly.

If fastai with the annotated page detector received < 100% accuracy, then it could be because fastai does not detect any screens or detects too many screens. However, I am baffled as to why fastai with the annotated page detector received less than fastai with vgg16. If you could delete the file diff --git a/docs/notebooks/__main__/annotated.py b/docs/notebooks/__main__/annotated.py

index 0dd2652..5c21d08 100644

--- a/docs/notebooks/__main__/annotated.py

+++ b/docs/notebooks/__main__/annotated.py

@@ -141,10 +141,14 @@ def evaluate_event_detector(event_detector):

if page_number is None:

if not detected_page_dict:

num_successes += 1

+ else:

+ print(f'Frame {frame_number}: Expected no pages, but detected {detected_page_dict}')

else:

detected_page_numbers = set(page.number for page in detected_page_dict.values())

if len(detected_page_dict) <= 2 and detected_page_numbers == set([page_number]):

num_successes += 1

+ else:

+ print(f'Frame {frame_number}: Expected at most 2 screens with page {page_number}, but detected {len(detected_page_dict)} screens with pages {detected_page_numbers}')

if isinstance(event, (ScreenAppearedEvent, ScreenChangedContentEvent)):

detected_page_dict[event.screen_id] = event.pageThe notebook is written so that only the table cell of fastai with the annotated page detector will be rerun. |

|

Thi print statement looks like the following: |

|

@xbankov It is unexpected that the frame numbers should repeat. Is this the raw output you are getting? If so, this indicates errors in the evaluation code. I will need to troubleshoot this further, thank you for investigating. What we can say for sure is that the screen detector seems to be detecting three screens instead of two in the first two cases. At the moment, this is not too troubling and we can relax the conditions for success to all screens showing the expected page, no matter the number of detected screens. |

|

I received a very different output and an accuracy of 75% (instead of 25%) after removing file It seems that the only issue is an extra detected screen: Do you have any idea why such output would be produced, @xbankov? |

|

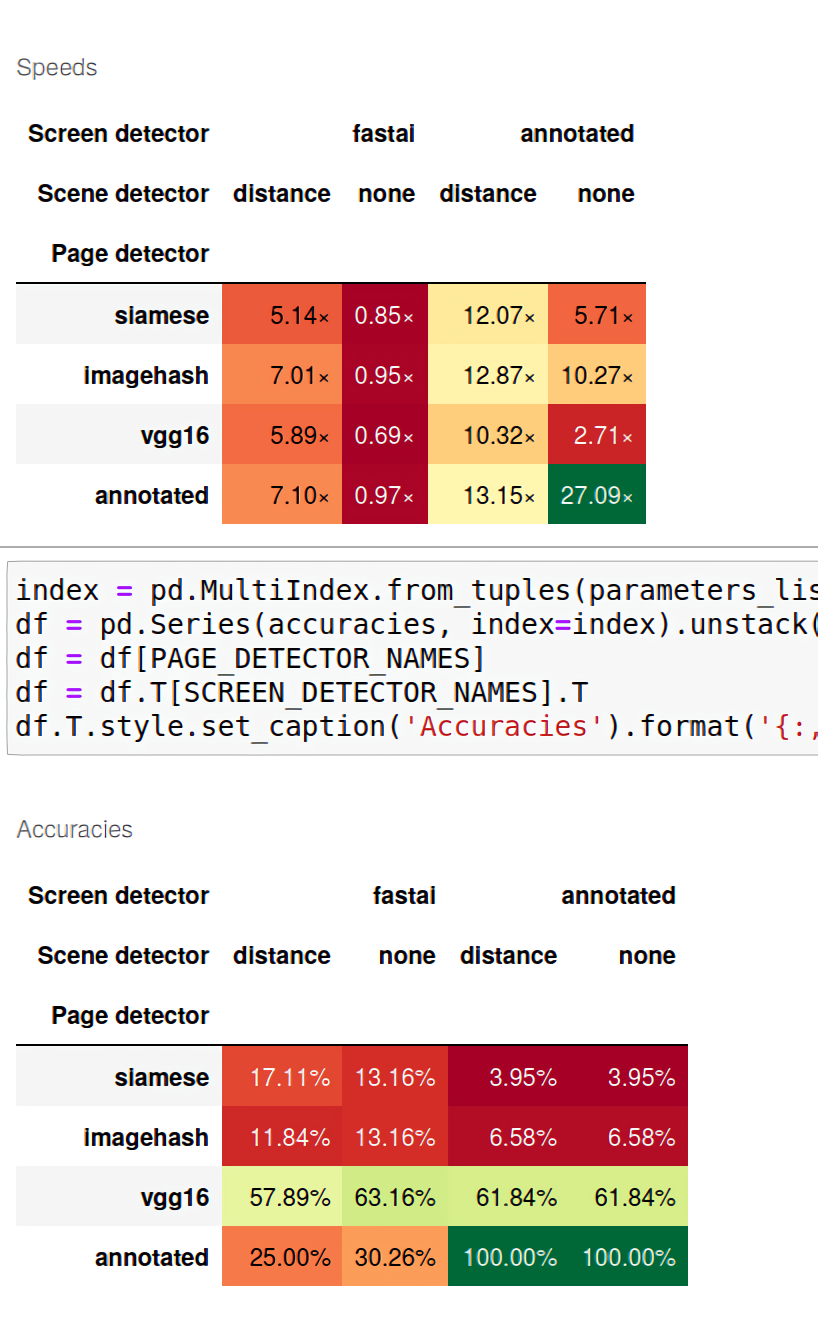

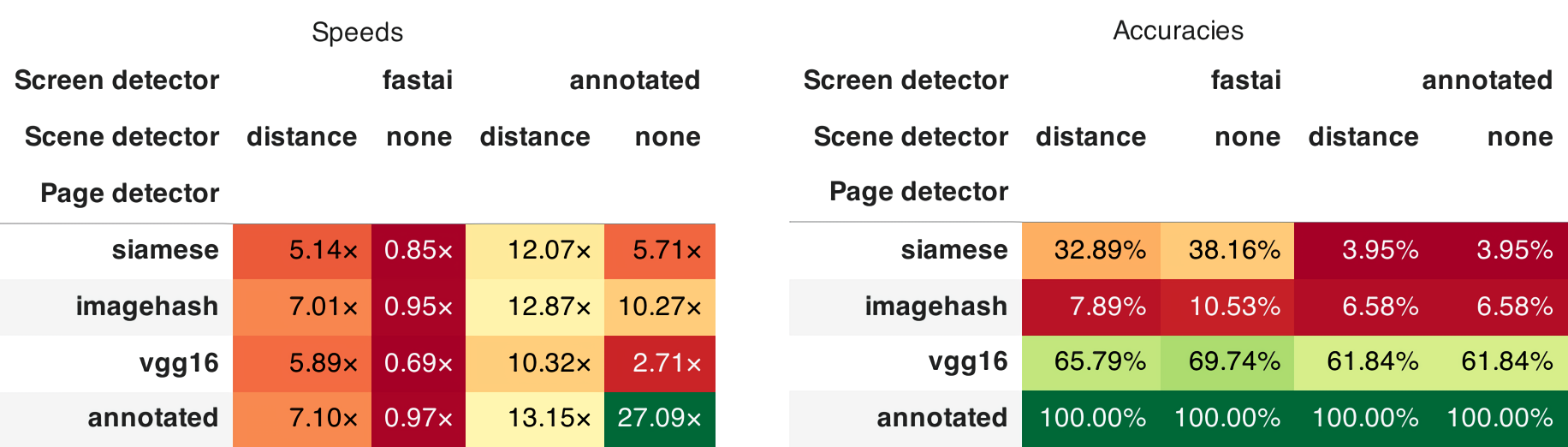

@xbankov Below are the updated accuracies: It seems that using the scene detector leads to some loss of accuracy afterall, although in is not clear whether it is the page detector or the screen detector that is responsible for this. We should be able to tell after I have fixed the faulty screen annotations and recomputed the accuracies. |

|

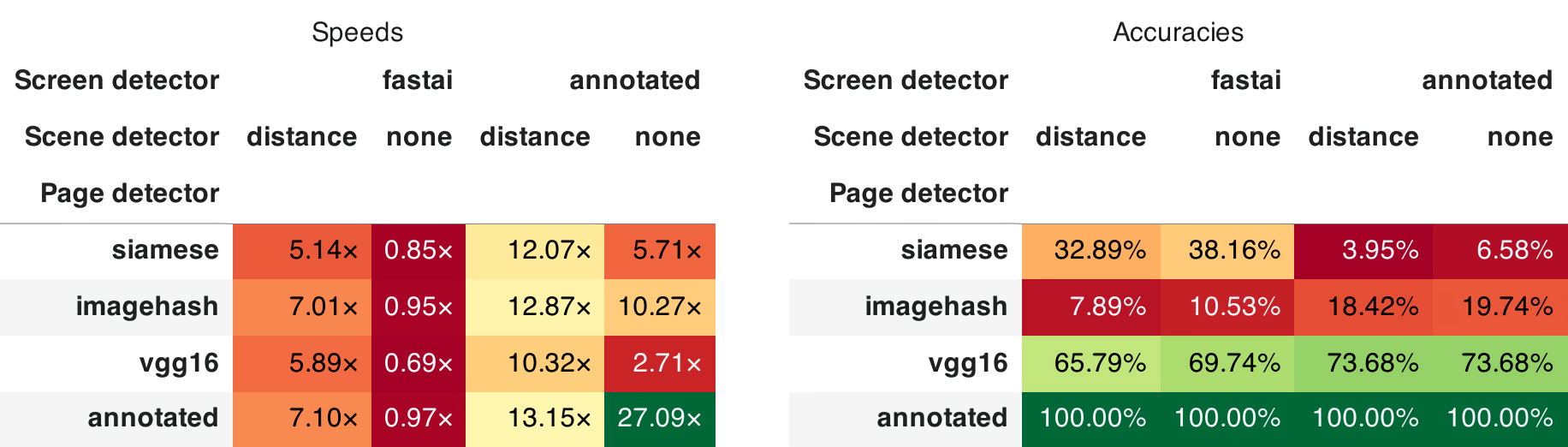

Here are the accuracies after I have fixed the screen annotations: With The |

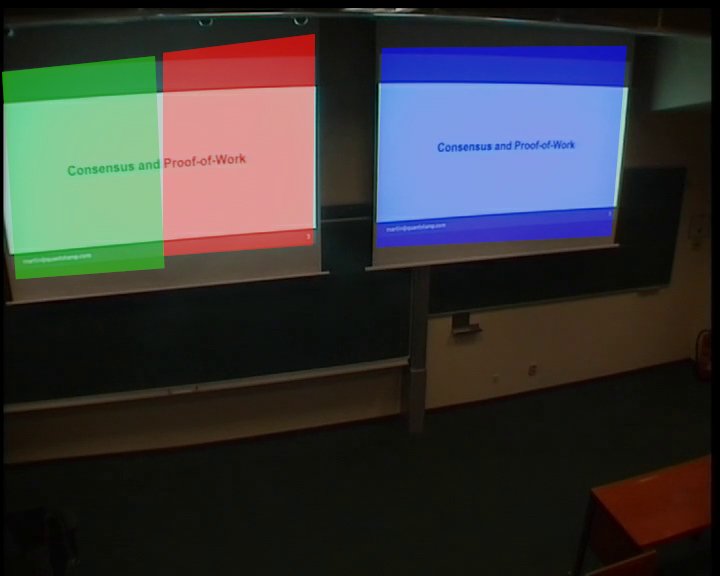

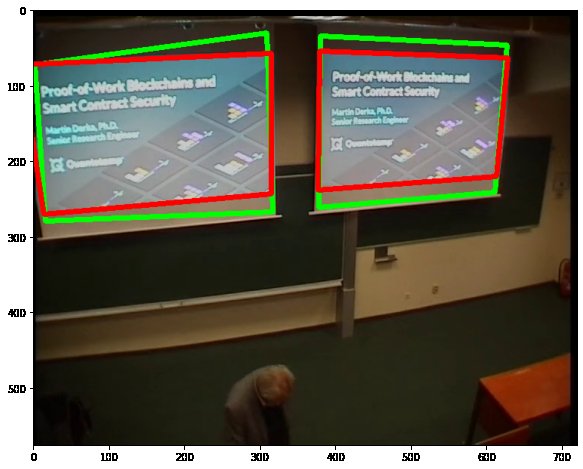

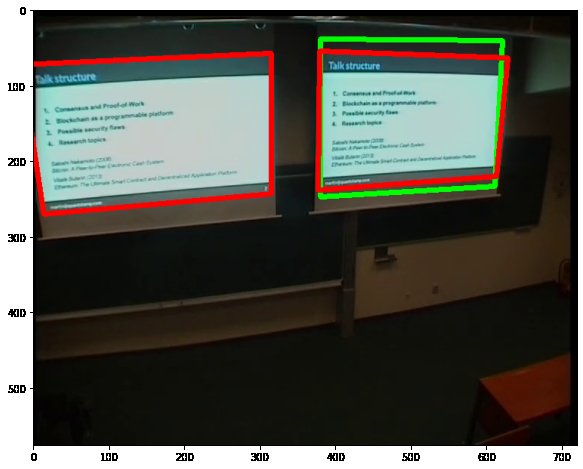

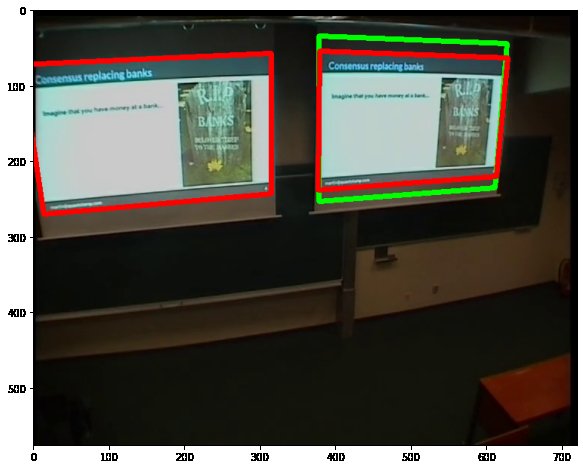

Below is the output of the Below is the output of the As you can see in the output of the Below, you can see the detected screens from frames 346, 1077, and 1623, where As you can see, the screens detected by |

This pull request aims to make video processing faster by increasing the accuracy and the speed of scene detection:

As a result of these changes, we were able to increase threshold from 0.12 MAE to 0.22 MSE while retaining 100% accuracy on the training set. Just replacing MAE with MSE decreases the threshold from 0.12 MAE to 0.1 MSE, i.e. the increased threshold is due to the denoising and the perceptual color distance, which increase separability. For further speed improvement, the threshold could be increased to 0.25 MSE for ≥ 95% accuracy on the training set.

@xbankov, when you are testing new models, can you please write new code on top of the

speed-up-scene-detectionbranch to see if this helps the conversion speed? If so, I will merge this and close #2.