-

Notifications

You must be signed in to change notification settings - Fork 16.4k

Description

Apache Airflow version: 2.0.1

Environment:

- OS (e.g. from /etc/os-release): CentOS Linux 7 (Core)

- Kernel (e.g.

uname -a): Linux 3.10.0-957.27.2.el7.x86_64 - Install tools: conda install airflow airflow-with-ldap psycopg2 sqlalchemy=1.3

What happened:

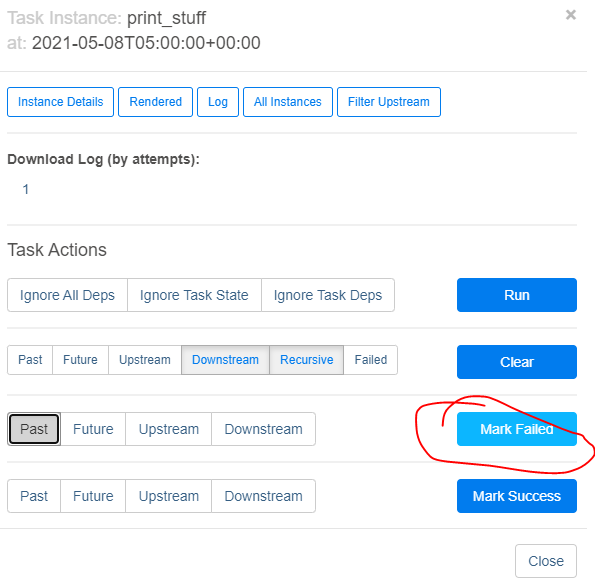

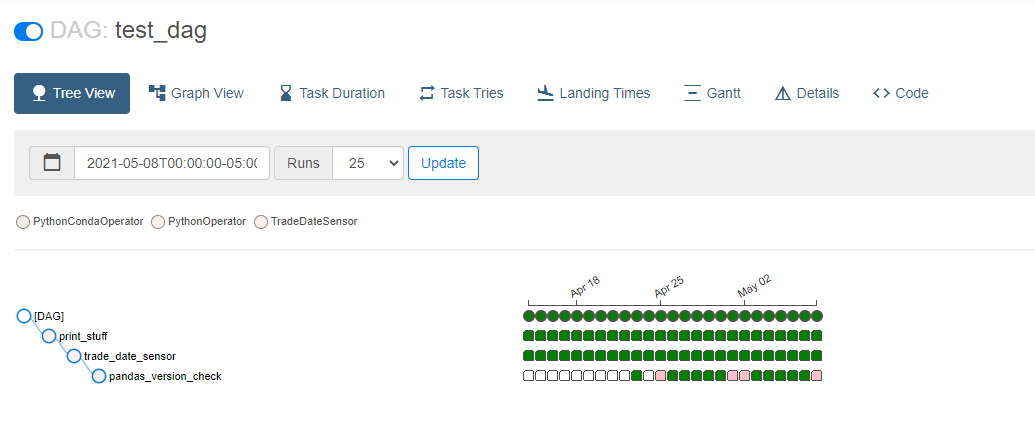

When I want to backfill tasks using only the UI, I usually pick how far I want to backfill to, mark as failed with the "future" option selected, then clear with the "future" option selected (with various dependency options as well). After upgrading our production server to 2.x, the confirmation prompt after marking with past / future selected only shows the selected task when there are multiple executions dates following it.

One interesting thing to note is that this behavior works as expected when clearing tasks.

What you expected to happen:

I expected all the task instances for on and after the selected execution dates to be affected. Instead I have to manually fail each task-date or find another workaround, but this is how our non-power-users have been backfilling processes.

How to reproduce it:

We created a fresh conda environment with Python 3.8, ran conda install airflow airflow-with-ldap psycopg2 sqlalchemy=1.3 and continued the setup for the scheduler and webservice. Python package environment is airflow-centric, not much else in there.

We are using the default timezone "America/Chicago" and cron expression schedule_interval "0 0 * * *" to ensure our dags run every night at midnight local time, instead of 11pm/12am/1am depending on daylight savings time / start_date. Here are the non-sensitive airflow.cfg changes:

sql_alchemy_conn = postgresql+psycopg2://localhost/airflow

executor = LocalExecutor

default_timezone = America/Chicago

default_ui_timezone = America/Chicago

For the DAG/task start_date I have tried passing the following:

datetime.datetimedatetime.datetimewithtzinfo=pedulum.timezone("America/Chicago")pendulum.datetimewithtz="America/Chicago"airflow.utils.timezone.datetime

All suffer from the same issue. Here is an example DAG suffering from this:

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

from airflow.utils.timezone import datetime

default_args = {

'owner': 'wahsmail',

'depends_on_past': False,

'start_date': datetime(2021, 3, 1),

'email_on_failure': False,

'email_on_retry': False,

'retries': 0,

}

dag = DAG('test_dag', default_args=default_args, schedule_interval='0 0 * * *', catchup=True)

def print_stuff_func(**context):

print('---- airflow macros ----')

print(str(context).replace(',', ',\n'))

print_stuff = PythonOperator(

task_id='print_stuff',

python_callable=print_stuff_func,

dag=dag

)

Step-by-step screenshots:

Actually in this example, not even the selected date itself was marked as failed... not sure what's going on here. My apologies if this has been covered before. I searched for an hour or so but didn't find anything in 2.x matching my issue.