-

Notifications

You must be signed in to change notification settings - Fork 16.4k

Description

** Apache Airflow version **:

AIRFLOW_IMAGE_NAME: -apache / airflow: 2.1.2-python3.7

Environment:

- ** running in docker on ubuntu 18.04**

here is the config

curl -LfO 'https://airflow.apache.org/docs/apache-airflow/2.1.2/docker-compose.yaml'

What happened:

I run on one server a docker compose without workers, and on another server a worker using docker.

When the task has been counted, I cannot get the logs from another server.

** What did you expect **:

something in the docker worker run settings on the second server.

** How to reproduce this **:

<! ---

run in server1:

mkdir tmp-airflow

cd tmp-airflow

curl -LfO 'https://airflow.apache.org/docs/apache-airflow/2.1.2/docker-compose.yaml'

mkdir ./dags ./logs ./plugins

echo -e "AIRFLOW_UID=$(id -u)\nAIRFLOW_GID=0" > .env

chamge imade name to apache/airflow:2.1.2-python3.7

add to docker-compose.yaml file open ports for postgres:

postgres:

image: postgres: 13

ports:

- 5432:5432

and change output ports for redis to 6380:

redis:

image: redis:latest

ports:

- 6380:6379

add to webserver ports 8793 for logs (not sure what is needed)

airflow-webserver:

<<: *airflow-common

command: webserver

ports:

- 8080:8080

- 8793:8793

Comment out or delete the worker description. like:

# airflow-worker:

# <<: *airflow-common

# command: celery worker

# healthcheck:

# test:

# - "CMD-SHELL"

# - 'celery --app airflow.executors.celery_executor.app inspect ping -d "celery@$${HOSTNAME}"'

# interval: 10s

# timeout: 10s

# retries: 5

# restart: always

next, run in terminal

docker-compose up airflow-init

docker-compose up

then run in server2 worker:

mkdir tmp-airflow

cd tmp-airflow

curl -LfO 'https://airflow.apache.org/docs/apache-airflow/2.1.2/docker-compose.yaml'

mkdir ./dags ./logs ./plugins

echo -e "AIRFLOW_UID=$(id -u)\nAIRFLOW_GID=0" > .env

cat ./.env

docker run --rm -it -e AIRFLOW__CORE__EXECUTOR="CeleryExecutor" -e AIRFLOW__CORE__SQL_ALCHEMY_CONN="postgresql+psycopg2://airflow:airflow@10.0.0.197:5432/airflow" e AIRFLOW__CELERY__RESULT_BACKEND="db+postgresql://airflow:airflow@10.0.0.197:5432/airflow" -e AIRFLOW__CELERY__BROKER_URL="redis://:@10.0.0.197:6380/0" -e AIRFLOW__CORE__FERNET_KEY="" -e AIRFLOW__CORE__DAGS_ARE_PAUSED_AT_CREATION="true" -e AIRFLOW__CORE__LOAD_EXAMPLES="true" -e AIRFLOW__API__AUTH_BACKEND="airflow.api.auth.backend.basic_auth" -e _PIP_ADDITIONAL_REQUIREMENTS="" -v /home/apatshin/tmp/airflow-worker/dags:/opt/airflow/dags -v /home/apatshin/tmp/airflow-worker/logs:/opt/airflow/logs -v /home/apatshin/tmp/airflow-worker/plugins:/opt/airflow/plugins -p "6380:6379" -p "5432:5432" -p "8793:8793" -e DB_HOST="10.0.0.197" --user 1012:0 --hostname="host197" "apache/airflow:2.1.2-python3.7" celery worker

10.0.0.197 -- ip server1

host197 -- naming in local network for server2

--user 1012:0 -- 1012 it is in file ./.env in "AIRFLOW_UID"

see in you files and replace:

cat ./.env

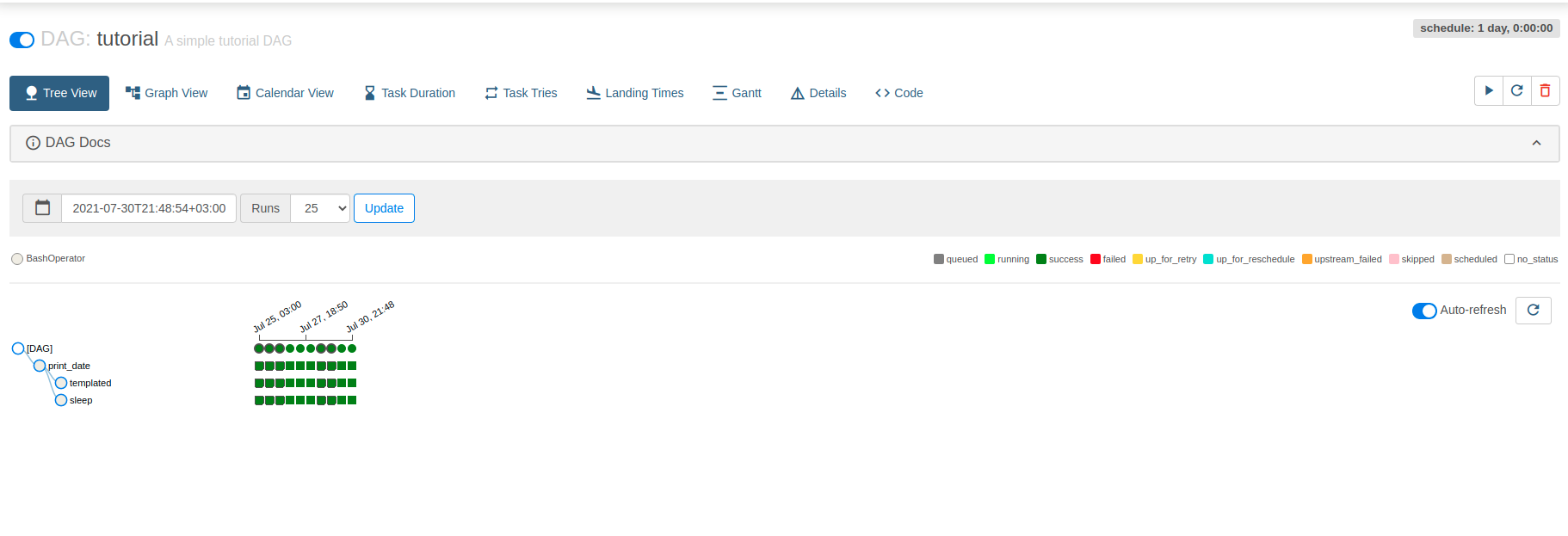

next, run DAG in webserver on server1:

then go to in browser to 10.0.0.197:8080 or 0.0.0.0:8080

and run DAG "tutorial"

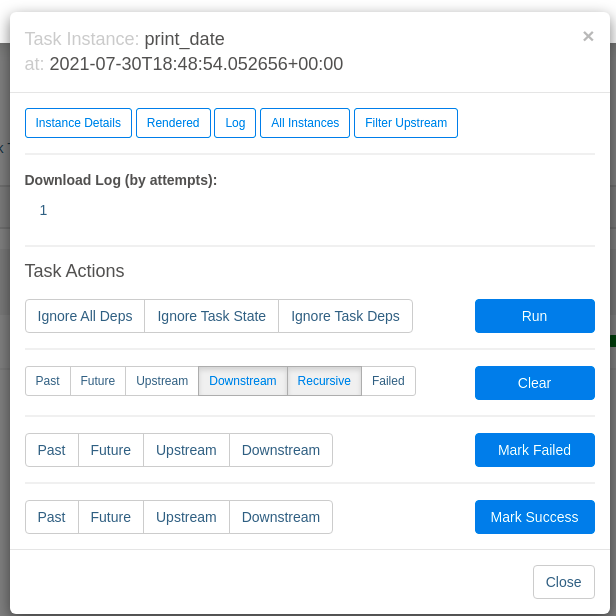

and then see log on ferst tasks

errors

and see next:

*** Log file does not exist: /opt/airflow/logs/tutorial/print_date/2021-07-30T18:48:54.052656+00:00/1.log

*** Fetching from: http://host197:8793/log/tutorial/print_date/2021-07-30T18:48:54.052656+00:00/1.log

*** Failed to fetch log file from worker. 403 Client Error: FORBIDDEN for url: http://host197:8793/log/tutorial/print_date/2021-07-30T18:48:54.052656+00:00/1.log

For more information check: https://httpstatuses.com/403

in terminal in server2:

[2021-07-30 18:49:12,966] {_internal.py:113} INFO - 10.0.0.197 - - [30/Jul/2021 18:49:12] "GET /log/tutorial/print_date/2021-07-30T18:48:54.052656+00:00/1.log HTTP/1.1" 403 -