-

Notifications

You must be signed in to change notification settings - Fork 16.4k

Closed

Labels

Description

Apache Airflow version: 2.1.0

OS: macOS 11.3.1

Apache Airflow Provider versions: No relevant provider.

Deployment: Docker-compose version 1.29.1

What happened:

The string 'airflow' in bash_command was Rendered to '***'.

In Task Instance Details

Attribute: bash_command

| 1 | pip3 install -r /opt/airflow/dags/tw-financial-report-analysis/dag/af_requirements.txt |

|---|

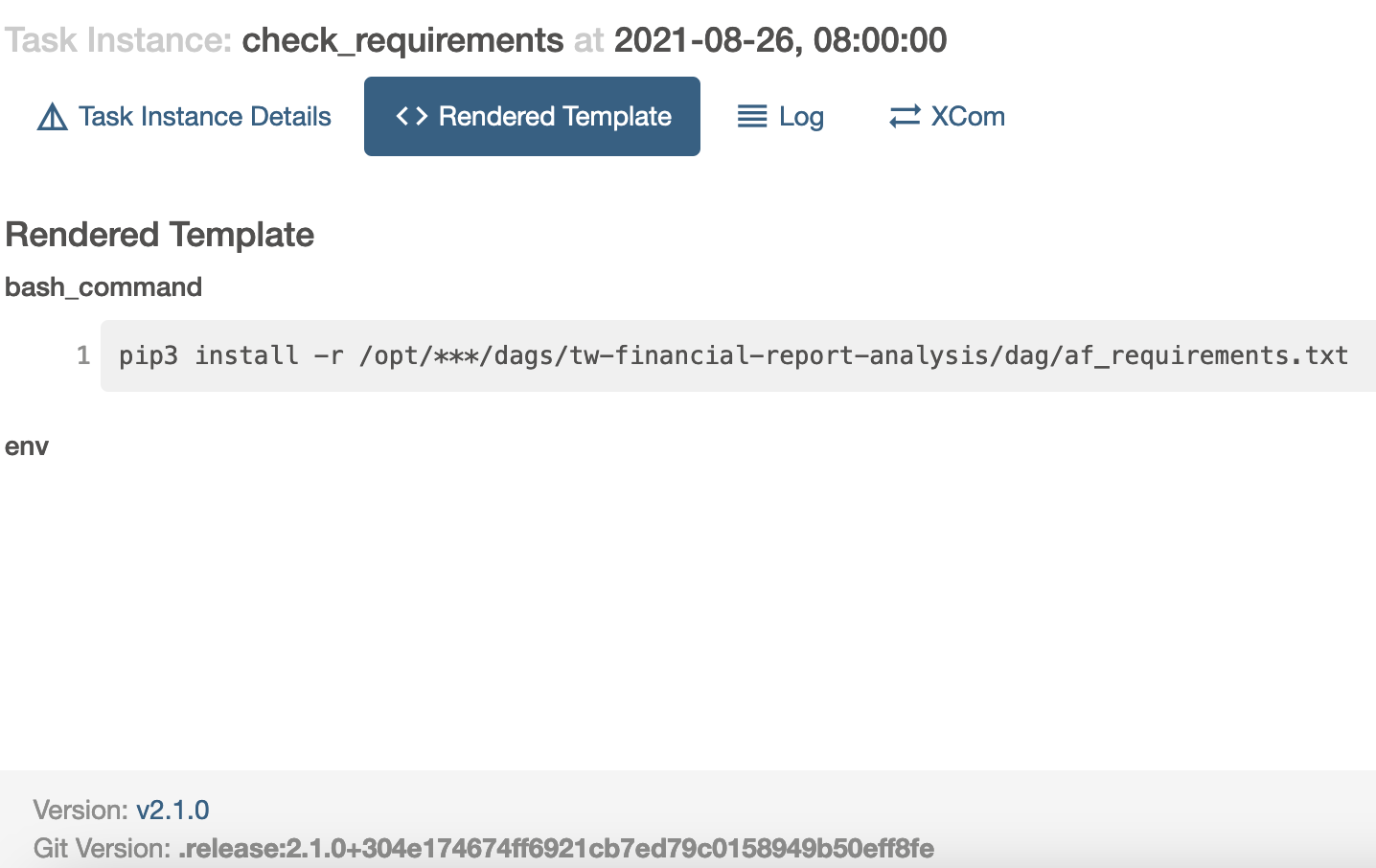

In Rendered Template

bash_command

| 1 | pip3 install -r /opt/***/dags/tw-financial-report-analysis/dag/af_requirements.txt |

|---|

What you expected to happen:

The rendered string shouldn't be changed.

How to reproduce it:

Run the bashOperartor with Attribute, bash_command, includes string 'airflow'.

Anything else we need to know:

The log of the error task.

--------------------------------------------------------------------------------

[2021-08-27 14:09:16,193] {taskinstance.py:1068} INFO - Starting attempt 4 of 4

[2021-08-27 14:09:16,196] {taskinstance.py:1069} INFO -

--------------------------------------------------------------------------------

[2021-08-27 14:09:16,206] {taskinstance.py:1087} INFO - Executing <Task(BashOperator): check_requirements> on 2021-08-26T00:00:00+00:00

[2021-08-27 14:09:16,212] {standard_task_runner.py:52} INFO - Started process 41 to run task

[2021-08-27 14:09:16,217] {standard_task_runner.py:76} INFO - Running: ['***', 'tasks', 'run', 'setup_parse_financial_report', 'check_requirements', '2021-08-26T00:00:00+00:00', '--job-id', '7', '--pool', 'default_pool', '--raw', '--subdir', 'DAGS_FOLDER/tw-financial-report-analysis/dags/parse_financial_report/setup_project.py', '--cfg-path', '/tmp/tmp8e3r9kye', '--error-file', '/tmp/tmpvcm3zeju']

[2021-08-27 14:09:16,218] {standard_task_runner.py:77} INFO - Job 7: Subtask check_requirements

[2021-08-27 14:09:16,280] {logging_mixin.py:104} INFO - Running <TaskInstance: setup_parse_financial_report.check_requirements 2021-08-26T00:00:00+00:00 [running]> on host f5719825fcd2

[2021-08-27 14:09:16,341] {taskinstance.py:1280} INFO - Exporting the following env vars:

AIRFLOW_CTX_DAG_OWNER=sean

AIRFLOW_CTX_DAG_ID=setup_parse_financial_report

AIRFLOW_CTX_TASK_ID=check_requirements

AIRFLOW_CTX_EXECUTION_DATE=2021-08-26T00:00:00+00:00

AIRFLOW_CTX_DAG_RUN_ID=scheduled__2021-08-26T00:00:00+00:00

[2021-08-27 14:09:16,343] {subprocess.py:52} INFO - Tmp dir root location:

/tmp

[2021-08-27 14:09:16,345] {subprocess.py:63} INFO - Running command: ['bash', '-c', 'pip3 install -r /opt/***/dags/tw-financial-report-analysis/dag/af_requirements.txt']

[2021-08-27 14:09:16,356] {subprocess.py:75} INFO - Output:

[2021-08-27 14:09:16,916] {subprocess.py:79} INFO - WARNING: The directory '/home/***/.cache/pip' or its parent directory is not owned or is not writable by the current user. The cache has been disabled. Check the permissions and owner of that directory. If executing pip with sudo, you should use sudo's -H flag.

[2021-08-27 14:09:16,919] {subprocess.py:79} INFO - Defaulting to user installation because normal site-packages is not writeable

[2021-08-27 14:09:16,980] {subprocess.py:79} INFO - ERROR: Could not open requirements file: [Errno 2] No such file or directory: '/opt/***/dags/tw-financial-report-analysis/dag/af_requirements.txt'

[2021-08-27 14:09:17,297] {subprocess.py:79} INFO - WARNING: You are using pip version 21.1.1; however, version 21.2.4 is available.

[2021-08-27 14:09:17,299] {subprocess.py:79} INFO - You should consider upgrading via the '/usr/local/bin/python -m pip install --upgrade pip' command.

[2021-08-27 14:09:17,357] {subprocess.py:83} INFO - Command exited with return code 1

[2021-08-27 14:09:17,370] {taskinstance.py:1481} ERROR - Task failed with exception

Traceback (most recent call last):

File "/home/airflow/.local/lib/python3.8/site-packages/airflow/models/taskinstance.py", line 1137, in _run_raw_task

self._prepare_and_execute_task_with_callbacks(context, task)

File "/home/airflow/.local/lib/python3.8/site-packages/airflow/models/taskinstance.py", line 1311, in _prepare_and_execute_task_with_callbacks

result = self._execute_task(context, task_copy)

File "/home/airflow/.local/lib/python3.8/site-packages/airflow/models/taskinstance.py", line 1341, in _execute_task

result = task_copy.execute(context=context)

File "/home/airflow/.local/lib/python3.8/site-packages/airflow/operators/bash.py", line 180, in execute

raise AirflowException('Bash command failed. The command returned a non-zero exit code.')

airflow.exceptions.AirflowException: Bash command failed. The command returned a non-zero exit code.

[2021-08-27 14:09:17,373] {taskinstance.py:1524} INFO - Marking task as FAILED. dag_id=setup_parse_financial_report, task_id=check_requirements, execution_date=20210826T000000, start_date=20210827T140916, end_date=20210827T140917

[2021-08-27 14:09:17,401] {local_task_job.py:151} INFO - Task exited with return code 1

The compose yaml.

version: '3'

x-airflow-common:

&airflow-common

image: ${AIRFLOW_IMAGE_NAME:-apache/airflow:2.1.0-python3.8}

environment:

&airflow-common-env

AIRFLOW__CORE__EXECUTOR: CeleryExecutor

AIRFLOW__CORE__SQL_ALCHEMY_CONN: postgresql+psycopg2://airflow:airflow@postgres/airflow

AIRFLOW__CELERY__RESULT_BACKEND: db+postgresql://airflow:airflow@postgres/airflow

AIRFLOW__CELERY__BROKER_URL: redis://:@redis:6379/0

AIRFLOW__CORE__FERNET_KEY: ''

AIRFLOW__CORE__DAGS_ARE_PAUSED_AT_CREATION: 'true'

AIRFLOW__CORE__LOAD_EXAMPLES: 'false'

volumes:

- ./dags:/opt/airflow/dags

- ./logs:/opt/airflow/logs

- ./plugins:/opt/airflow/plugins

user: "${AIRFLOW_UID:-50000}:${AIRFLOW_GID:-50000}"

depends_on:

redis:

condition: service_healthy

postgres:

condition: service_healthy

services:

mongo:

image: mongo

hostname: stock_mongo

restart: always

ports:

- 27017:27017

environment:

MONGO_INITDB_ROOT_USERNAME: root

MONGO_INITDB_ROOT_PASSWORD: example

postgres:

image: postgres:13

environment:

POSTGRES_USER: airflow

POSTGRES_PASSWORD: airflow

POSTGRES_DB: airflow

volumes:

- postgres-db-volume:/var/lib/postgresql/data

healthcheck:

test: ["CMD", "pg_isready", "-U", "airflow"]

interval: 5s

retries: 5

restart: always

redis:

image: redis:latest

ports:

- 6379:6379

healthcheck:

test: ["CMD", "redis-cli", "ping"]

interval: 5s

timeout: 30s

retries: 50

restart: always

airflow-webserver:

<<: *airflow-common

command: webserver

ports:

- 8080:8080

healthcheck:

test: ["CMD", "curl", "--fail", "http://localhost:8080/health"]

interval: 10s

timeout: 10s

retries: 5

restart: always

airflow-scheduler:

<<: *airflow-common

command: scheduler

restart: always

airflow-worker:

<<: *airflow-common

command: celery worker

restart: always

airflow-init:

<<: *airflow-common

command: version

environment:

<<: *airflow-common-env

_AIRFLOW_DB_UPGRADE: 'true'

_AIRFLOW_WWW_USER_CREATE: 'true'

_AIRFLOW_WWW_USER_USERNAME: ${_AIRFLOW_WWW_USER_USERNAME:-airflow}

_AIRFLOW_WWW_USER_PASSWORD: ${_AIRFLOW_WWW_USER_PASSWORD:-airflow}

flower:

<<: *airflow-common

command: celery flower

ports:

- 5555:5555

healthcheck:

test: ["CMD", "curl", "--fail", "http://localhost:5555/"]

interval: 10s

timeout: 10s

retries: 5

restart: always

volumes:

postgres-db-volume:

Are you willing to submit a PR?

I'm not ready for this.