-

Notifications

You must be signed in to change notification settings - Fork 16.5k

Description

Apache Airflow Provider(s)

elasticsearch

Versions of Apache Airflow Providers

apache-airflow-providers-amazon==3.0.0

apache-airflow-providers-celery==2.1.0

apache-airflow-providers-cncf-kubernetes==3.0.2

apache-airflow-providers-docker==2.4.1

apache-airflow-providers-elasticsearch==2.2.0

apache-airflow-providers-ftp==2.0.1

apache-airflow-providers-google==6.4.0

apache-airflow-providers-grpc==2.0.1

apache-airflow-providers-hashicorp==2.1.1

apache-airflow-providers-http==2.0.3

apache-airflow-providers-imap==2.2.0

apache-airflow-providers-microsoft-azure==3.6.0

apache-airflow-providers-mysql==2.2.0

apache-airflow-providers-odbc==2.0.1

apache-airflow-providers-postgres==4.1.0

apache-airflow-providers-redis==2.0.1

apache-airflow-providers-sendgrid==2.0.1

apache-airflow-providers-sftp==2.4.1

apache-airflow-providers-slack==4.2.0

apache-airflow-providers-sqlite==2.1.0

apache-airflow-providers-ssh==2.4.0

Apache Airflow version

2.2.4

Operating System

"Debian GNU/Linux 10 (buster)"

Deployment

Official Apache Airflow Helm Chart

Deployment details

Using the official helm chart with a custom image. The custom image is based off of the official image but with some plugins and python packages added.

What happened

Airflow is deployed in Kubernetes

The DAGs are configured to log to stdout

The logs are picked up by fluentbit and forwarded to opensearch

When I view the logs for a completed DAG in the airflow web interface I can see the logs exactly as expected but also they seem to be constantly refreshing / checking for more logs.

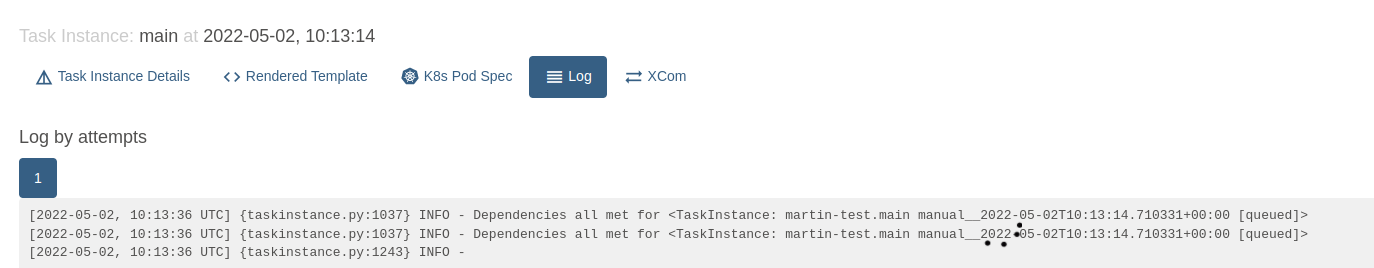

I can see this loading spinner constantly moving (the dots that are partially covering up the first 2 log lines half way along):

and in the airflow logs I see it constantly trying to query elasticsearch for these DAG logs:

[2022-05-02 14:18:55,496] {base.py:270} INFO - POST https://URL-for-our-opensearch-instance.es.amazonaws.com:443/_count [status:200 request:0.049s]

[02/May/2022:14:18:55 +0000] "GET /get_logs_with_metadata?dag_id=martin-test&task_id=main&execution_date=2022-05-02T10%3A13%3A14.710331%2B00%3A00&try_number=1&metada....

What you think should happen instead

This DAG is completed and shows as such in the web interface, there will be no more logs so we don't need to keep searching for more / tail the logs.

If there is a DAG that is only part way complete then I would like for it to keep tailing / refreshing the logs

How to reproduce

Deploy with the following config:

AIRFLOW__LOGGING__REMOTE_LOGGING: True

AIRFLOW__ELASTICSEARCH__HOST: "URL-for-our-opensearch-instance.es.amazonaws.com:443"

AIRFLOW__ELASTICSEARCH__JSON_FIELDS: "asctime, filename, lineno, levelname, message"

AIRFLOW__ELASTICSEARCH__JSON_FORMAT: True

AIRFLOW__ELASTICSEARCH__LOG_ID_TEMPLATE: "{dag_id}_{task_id}_{execution_date}_{try_number}"

AIRFLOW__ELASTICSEARCH__WRITE_STDOUT: True

AIRFLOW__ELASTICSEARCH_CONFIGS__USE_SSL: True

Run a simple DAG that just outputs some logs to stdout, these will need to be sent to opensearch buy fluentbit, fluentd or similar

When the DAG has successfully completed click into it to view the logs

Anything else

No response

Are you willing to submit PR?

- Yes I am willing to submit a PR!

Code of Conduct

- I agree to follow this project's Code of Conduct