Add Amazon Redshift-data to S3<>RS Transfer Operators#27947

Add Amazon Redshift-data to S3<>RS Transfer Operators#27947eladkal merged 17 commits intoapache:mainfrom

Conversation

|

For avoid any further regression error I would suggest move tests this methods from operator |

Thanks, yes I copied the tests from operator to hook, and left operator tests in place while we still have the deprecated public methods there. |

Although this is fine, as mentioned by @Taragolis I would update operator tests to only check that the hook is correctly called. We should not check in operators tests, the implementation of the hook |

vincbeck

left a comment

vincbeck

left a comment

There was a problem hiding this comment.

Good changes overall! Just some minor comments

| secret_arn: str | None = None, | ||

| statement_name: str | None = None, | ||

| with_event: bool = False, | ||

| await_result: bool = True, |

There was a problem hiding this comment.

By convention, wait_for_completion is usually used as name for this kind of flag

There was a problem hiding this comment.

This was the flag name in the existing code, should we really change it?

There was a problem hiding this comment.

Oh yeah good point. We can either rename it but it has to go through deprecation pattern first (since it is breaking change) or leave it as is. I am fine keeping it as is

There was a problem hiding this comment.

This is net new code (as far as the hook is concerned) so we can rename it here to wait_for_completion without any back compat issues and then leave the name in the Operator as await_completion for back compat (since that was publicly available before). The names will be inconsistent between the two classes, but this could be seen as a step in the right direction.

There was a problem hiding this comment.

Yes please lets rename. better to stay consistent

|

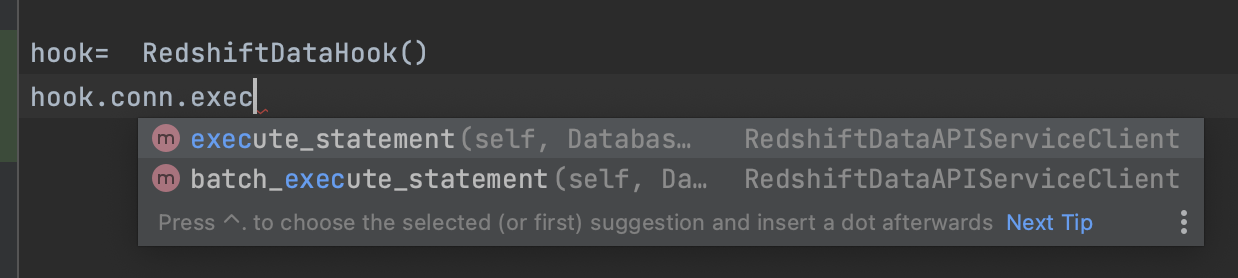

one thing to keep in mind.... we more or less have a convention, especially with aws since we have the helpful boto3 stubs library, that we should not add "alias methods" to hooks... i.e. when the underlying client method works perfectly well by itself, we should not add a method to the hook that simply forwards the kwargs to boto3. that just adds maintenance and a layer that users may need to decypher these aws hooks are designed in such a way that autocomplete works for boto3 client methods e.g. see here which means, in many cases, no need to add the hook method -- just use the client. and that's what this operator does and that's a good thing. more code sharing isn't always better because it means it's tougher to make changes. if we add meaningful functionality to the hook then sure, add a method. but i think in this case i can see wait_for_result potentially being useful but, if you would not mind, i would recommend simply going straight to your transfer operator and then doing any necessary refactors as part of that, rather than saying "this is needed for this other pr" because... verifying that is easier to do when we have the actual PR. |

|

This pull request has been automatically marked as stale because it has not had recent activity. It will be closed in 5 days if no further activity occurs. Thank you for your contributions. |

|

Hey @yehoshuadimarsky, Any updates on this one, specifically regarding the feedback from Daniel? |

|

Will take a look |

|

@dstandish I think there are two approaches we can do here.

Not sure what the optimal approach is here going forward. As you suggested, I indeed started the work on the actual transfer operators with S3, and quickly ran into this question of how to model these "extra" connection params that the data api needs. What do you (and the greater Airflow community) think or suggest? |

Not a problem at all, it is how it intends to use, Single Operator -> Single Hook -> Single Credentials

And actually users could use |

|

So does that mean you think that it actually makes sense to add this new thin client wrapper on top of the hook? |

|

Thin IMHO and personal thought: "The last think that we want it is add additional arguments in Hook and make them Thick Wrapper". There is couple of hooks which accept additional arguments and it it turned into the hell to support, test and use them. You could check Redshift.Client and found that not every method required to ClusterIdentifier as well as other fields you described. |

|

Ok, per comments above added the RS data api to the S3 -> RS transfer operator |

|

Also update the reverse transfer RS -> S3 with option to use the RS data api |

|

@Taragolis @o-nikolas @dstandish @eladkal @vincbeck anyone able to review the new changes? For the full PR of adding Redshift data api to all the transfer operators... Thanks |

| secret_arn: str | None = None, | ||

| statement_name: str | None = None, | ||

| with_event: bool = False, | ||

| await_result: bool = True, |

There was a problem hiding this comment.

This is net new code (as far as the hook is concerned) so we can rename it here to wait_for_completion without any back compat issues and then leave the name in the Operator as await_completion for back compat (since that was publicly available before). The names will be inconsistent between the two classes, but this could be seen as a step in the right direction.

|

@eladkal @Taragolis @dstandish anyone able to merge this? |

| secret_arn: str | None = None, | ||

| statement_name: str | None = None, | ||

| with_event: bool = False, | ||

| await_result: bool = True, |

There was a problem hiding this comment.

Yes please lets rename. better to stay consistent

|

updated with requested changes |

|

@eladkal are you satisfied with the changes in response to your request? |

Refactored the Amazon Redshift Data API hook and operator to move the core logic into the hook instead of the operator. This will allow me in the future to implement a Redshift Data API version of the S3 to Redshift transfer operator without having to duplicate the core logic.

^ Add meaningful description above

Read the Pull Request Guidelines for more information.

In case of fundamental code changes, an Airflow Improvement Proposal (AIP) is needed.

In case of a new dependency, check compliance with the ASF 3rd Party License Policy.

In case of backwards incompatible changes please leave a note in a newsfragment file, named

{pr_number}.significant.rstor{issue_number}.significant.rst, in newsfragments.