Conversation

…tions ### What changes were proposed in this pull request? This PR adds a doc builder for Spark SQL's configuration options. Here's what the new Spark SQL config docs look like ([configuration.html.zip](https://github.com/apache/spark/files/4172109/configuration.html.zip)):  Compare this to the [current docs](http://spark.apache.org/docs/3.0.0-preview2/configuration.html#spark-sql):  ### Why are the changes needed? There is no visibility into the various Spark SQL configs on [the config docs page](http://spark.apache.org/docs/3.0.0-preview2/configuration.html#spark-sql). ### Does this PR introduce any user-facing change? No, apart from new documentation. ### How was this patch tested? I tested this manually by building the docs and reviewing them in my browser. Closes apache#27459 from nchammas/SPARK-30510-spark-sql-options. Authored-by: Nicholas Chammas <nicholas.chammas@liveramp.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit 339c0f9) Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request? Simplify the changes for adding metrics description for WholeStageCodegen in apache#27405 ### Why are the changes needed? In apache#27405, the UI changes can be made without using the function `adjustPositionOfOperationName` to adjust the position of operation name and mark as an operation-name class. I suggest we make simpler changes so that it would be easier for future development. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Manual test with the queries provided in apache#27405 ``` sc.parallelize(1 to 10).toDF.sort("value").filter("value > 1").selectExpr("value * 2").show sc.parallelize(1 to 10).toDF.sort("value").filter("value > 1").selectExpr("value * 2").write.format("json").mode("overwrite").save("/tmp/test_output") sc.parallelize(1 to 10).toDF.write.format("json").mode("append").save("/tmp/test_output") ```  Closes apache#27490 from gengliangwang/wholeCodegenUI. Authored-by: Gengliang Wang <gengliang.wang@databricks.com> Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com> (cherry picked from commit b877aac) Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

…roperty at a time ### What changes were proposed in this pull request? The current ALTER COLUMN syntax allows to change multiple properties at a time: ``` ALTER TABLE table=multipartIdentifier (ALTER | CHANGE) COLUMN? column=multipartIdentifier (TYPE dataType)? (COMMENT comment=STRING)? colPosition? ``` The SQL standard (section 11.12) only allows changing one property at a time. This is also true on other recent SQL systems like [snowflake](https://docs.snowflake.net/manuals/sql-reference/sql/alter-table-column.html) and [redshift](https://docs.aws.amazon.com/redshift/latest/dg/r_ALTER_TABLE.html). (credit to cloud-fan) This PR proposes to change ALTER COLUMN to follow SQL standard, thus allows altering only one column property at a time. Note that ALTER COLUMN syntax being changed here is newly added in Spark 3.0, so it doesn't affect Spark 2.4 behavior. ### Why are the changes needed? To follow SQL standard (and other recent SQL systems) behavior. ### Does this PR introduce any user-facing change? Yes, now the user can update the column properties only one at a time. For example, ``` ALTER TABLE table1 ALTER COLUMN a.b.c TYPE bigint COMMENT 'new comment' ``` should be broken into ``` ALTER TABLE table1 ALTER COLUMN a.b.c TYPE bigint ALTER TABLE table1 ALTER COLUMN a.b.c COMMENT 'new comment' ``` ### How was this patch tested? Updated existing tests. Closes apache#27444 from imback82/alter_column_one_at_a_time. Authored-by: Terry Kim <yuminkim@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request? Add round-trip tests for CSV and JSON functions as apache#27317 (comment) asked. ### Why are the changes needed? improve test coverage ### Does this PR introduce any user-facing change? no ### How was this patch tested? add uts Closes apache#27510 from yaooqinn/SPARK-30592-F. Authored-by: Kent Yao <yaooqinn@hotmail.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit 58b9ca1) Signed-off-by: HyukjinKwon <gurwls223@apache.org>

…ehavior ### What changes were proposed in this pull request? This PR updates the documentation on `TableCatalog.alterTable`s behavior on the order by which the requested changes are applied. It now explicitly mentions that the changes are applied in the order given. ### Why are the changes needed? The current documentation on `TableCatalog.alterTable` doesn't mention which order the requested changes are applied. It will be useful to explicitly document this behavior so that the user can expect the behavior. For example, `REPLACE COLUMNS` needs to delete columns before adding new columns, and if the order is guaranteed by `alterTable`, it's much easier to work with the catalog API. ### Does this PR introduce any user-facing change? Yes, document change. ### How was this patch tested? Not added (doc changes). Closes apache#27496 from imback82/catalog_table_alter_table. Authored-by: Terry Kim <yuminkim@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 70e545a) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

… intentionally skip AQE ### What changes were proposed in this pull request? This is a follow up in [apache#27452](apache#27452). Add a unit test to verify whether the log warning is print when intentionally skip AQE. ### Why are the changes needed? Add unit test ### Does this PR introduce any user-facing change? No ### How was this patch tested? adding unit test Closes apache#27515 from JkSelf/aqeLoggingWarningTest. Authored-by: jiake <ke.a.jia@intel.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 5a24060) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request? Enhance RuleExecutor strategy to take different actions when exceeding max iterations. And raise exception if analyzer exceed max iterations. ### Why are the changes needed? Currently, both analyzer and optimizer just log warning message if rule execution exceed max iterations. They should have different behavior. Analyzer should raise exception to indicates the plan is not fixed after max iterations, while optimizer just log warning to keep the current plan. This is more feasible after SPARK-30138 was introduced. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Add test in AnalysisSuite Closes apache#26977 from Eric5553/EnhanceMaxIterations. Authored-by: Eric Wu <492960551@qq.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit b2011a2) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…ate without Project This reverts commit a0e63b6. ### What changes were proposed in this pull request? This reverts the patch at apache#26978 based on gatorsmile's suggestion. ### Why are the changes needed? Original patch apache#26978 has not considered a corner case. We may need to put more time on ensuring we can cover all cases. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Unit test. Closes apache#27504 from viirya/revert-SPARK-29721. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Xiao Li <gatorsmile@gmail.com>

…REATE TABLE ### What changes were proposed in this pull request? This is a follow-up for apache#24938 to tweak error message and migration doc. ### Why are the changes needed? Making user know workaround if SHOW CREATE TABLE doesn't work for some Hive tables. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Existing unit tests. Closes apache#27505 from viirya/SPARK-27946-followup. Authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Liang-Chi Hsieh <liangchi@uber.com> (cherry picked from commit acfdb46) Signed-off-by: Liang-Chi Hsieh <liangchi@uber.com>

….withThreadLocalCaptured ### What changes were proposed in this pull request? Follow up for apache#27267, reset the status changed in SQLExecution.withThreadLocalCaptured. ### Why are the changes needed? For code safety. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Existing UT. Closes apache#27516 from xuanyuanking/SPARK-30556-follow. Authored-by: Yuanjian Li <xyliyuanjian@gmail.com> Signed-off-by: herman <herman@databricks.com> (cherry picked from commit a6b91d2) Signed-off-by: herman <herman@databricks.com>

… Streaming API docs ### What changes were proposed in this pull request? - Fix the scope of `Logging.initializeForcefully` so that it doesn't appear in subclasses' public methods. Right now, `sc.initializeForcefully(false, false)` is allowed to called. - Don't show classes under `org.apache.spark.internal` package in API docs. - Add missing `since` annotation. - Fix the scope of `ArrowUtils` to remove it from the API docs. ### Why are the changes needed? Avoid leaking APIs unintentionally in Spark 3.0.0. ### Does this PR introduce any user-facing change? No. All these changes are to avoid leaking APIs unintentionally in Spark 3.0.0. ### How was this patch tested? Manually generated the API docs and verified the above issues have been fixed. Closes apache#27528 from zsxwing/audit-ss-apis. Authored-by: Shixiong Zhu <zsxwing@gmail.com> Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request? Fix PySpark test failures for using Pandas >= 1.0.0. ### Why are the changes needed? Pandas 1.0.0 has recently been released and has API changes that result in PySpark test failures, this PR fixes the broken tests. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Manually tested with Pandas 1.0.1 and PyArrow 0.16.0 Closes apache#27529 from BryanCutler/pandas-fix-tests-1.0-SPARK-30777. Authored-by: Bryan Cutler <cutlerb@gmail.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit 07a9885) Signed-off-by: HyukjinKwon <gurwls223@apache.org>

…ranch-3.0-test-sbt-hadoop-2.7-hive-2.3 ### What changes were proposed in this pull request? This PR tries apache#26710 (comment) way to fix the test. ### Why are the changes needed? To make the tests pass. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Jenkins will test first, and then `on spark-branch-3.0-test-sbt-hadoop-2.7-hive-2.3` will test it out. Closes apache#27513 from HyukjinKwon/test-SPARK-30756. Authored-by: HyukjinKwon <gurwls223@apache.org> Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request? This brings apache#26324 back. It was reverted basically because, firstly Hive compatibility, and the lack of investigations in other DBMSes and ANSI. - In case of PostgreSQL seems coercing NULL literal to TEXT type. - Presto seems coercing `array() + array(1)` -> array of int. - Hive seems `array() + array(1)` -> array of strings Given that, the design choices have been differently made for some reasons. If we pick one of both, seems coercing to array of int makes much more sense. Another investigation was made offline internally. Seems ANSI SQL 2011, section 6.5 "<contextually typed value specification>" states: > If ES is specified, then let ET be the element type determined by the context in which ES appears. The declared type DT of ES is Case: > > a) If ES simply contains ARRAY, then ET ARRAY[0]. > > b) If ES simply contains MULTISET, then ET MULTISET. > > ES is effectively replaced by CAST ( ES AS DT ) From reading other related context, doing it to `NullType`. Given the investigation made, choosing to `null` seems correct, and we have a reference Presto now. Therefore, this PR proposes to bring it back. ### Why are the changes needed? When empty array is created, it should be declared as array<null>. ### Does this PR introduce any user-facing change? Yes, `array()` creates `array<null>`. Now `array(1) + array()` can correctly create `array(1)` instead of `array("1")`. ### How was this patch tested? Tested manually Closes apache#27521 from HyukjinKwon/SPARK-29462. Lead-authored-by: HyukjinKwon <gurwls223@apache.org> Co-authored-by: Aman Omer <amanomer1996@gmail.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit 0045be7) Signed-off-by: HyukjinKwon <gurwls223@apache.org>

…UNCACHE TABLE ### What changes were proposed in this pull request? Document updated for `CACHE TABLE` & `UNCACHE TABLE` ### Why are the changes needed? Cache table creates a temp view while caching data using `CACHE TABLE name AS query`. `UNCACHE TABLE` does not remove this temp view. These things were not mentioned in the existing doc for `CACHE TABLE` & `UNCACHE TABLE`. ### Does this PR introduce any user-facing change? Document updated for `CACHE TABLE` & `UNCACHE TABLE` command. ### How was this patch tested? Manually Closes apache#27090 from iRakson/SPARK-27545. Lead-authored-by: root1 <raksonrakesh@gmail.com> Co-authored-by: iRakson <raksonrakesh@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit b20754d) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…neHiveTablePartitions ### What changes were proposed in this pull request? Add class document for PruneFileSourcePartitions and PruneHiveTablePartitions. ### Why are the changes needed? To describe these two classes. ### Does this PR introduce any user-facing change? no ### How was this patch tested? no Closes apache#27535 from fuwhu/SPARK-15616-FOLLOW-UP. Authored-by: fuwhu <bestwwg@163.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit f1d0dce) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request? Exclude hive-service-rpc from build. ### Why are the changes needed? hive-service-rpc 2.3.6 and spark sql's thrift server module have duplicate classes. Leaving hive-service-rpc 2.3.6 in the class path means that spark can pick up classes defined in hive instead of its thrift server module, which can cause hard to debug runtime errors due to class loading order and compilation errors for applications depend on spark. If you compare hive-service-rpc 2.3.6's jar (https://search.maven.org/remotecontent?filepath=org/apache/hive/hive-service-rpc/2.3.6/hive-service-rpc-2.3.6.jar) and spark thrift server's jar (e.g. https://repository.apache.org/content/groups/snapshots/org/apache/spark/spark-hive-thriftserver_2.12/3.0.0-SNAPSHOT/spark-hive-thriftserver_2.12-3.0.0-20200207.021914-364.jar), you will see that all of classes provided by hive-service-rpc-2.3.6.jar are covered by spark thrift server's jar. https://issues.apache.org/jira/browse/SPARK-30783 has output of jar tf for both jars. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Existing tests. Closes apache#27533 from yhuai/SPARK-30783. Authored-by: Yin Huai <yhuai@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit ea626b6) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…sql.defaultUrlStreamHandlerFactory.enabled' documentation ### What changes were proposed in this pull request? This PR adds some more information and context to `spark.sql.defaultUrlStreamHandlerFactory.enabled`. ### Why are the changes needed? It is a bit difficult to understand the documentation of `spark.sql.defaultUrlStreamHandlerFactory.enabled`. ### Does this PR introduce any user-facing change? Nope, internal doc only fix. ### How was this patch tested? Nope. I only tested linter. Closes apache#27541 from HyukjinKwon/SPARK-29462-followup. Authored-by: HyukjinKwon <gurwls223@apache.org> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 99bd59f) Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

…ions ### What changes were proposed in this pull request? This is a small follow-up for apache#27400. This PR makes an empty `LocalTableScanExec` return an `RDD` without partitions. ### Why are the changes needed? It is a bit unexpected that the RDD contains partitions if there is not work to do. It also can save a bit of work when this is used in a more complex plan. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Added test to `SparkPlanSuite`. Closes apache#27530 from hvanhovell/SPARK-30780. Authored-by: herman <herman@databricks.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit b25359c) Signed-off-by: HyukjinKwon <gurwls223@apache.org>

…Python type hints ### What changes were proposed in this pull request? This PR targets to document the Pandas UDF redesign with type hints introduced at SPARK-28264. Mostly self-describing; however, there are few things to note for reviewers. 1. This PR replace the existing documentation of pandas UDFs to the newer redesign to promote the Python type hints. I added some words that Spark 3.0 still keeps the compatibility though. 2. This PR proposes to name non-pandas UDFs as "Pandas Function API" 3. SCALAR_ITER become two separate sections to reduce confusion: - `Iterator[pd.Series]` -> `Iterator[pd.Series]` - `Iterator[Tuple[pd.Series, ...]]` -> `Iterator[pd.Series]` 4. I removed some examples that look overkill to me. 5. I also removed some information in the doc, that seems duplicating or too much. ### Why are the changes needed? To document new redesign in pandas UDF. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Existing tests should cover. Closes apache#27466 from HyukjinKwon/SPARK-30722. Authored-by: HyukjinKwon <gurwls223@apache.org> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit aa6a605) Signed-off-by: HyukjinKwon <gurwls223@apache.org>

…is not static" ### What changes were proposed in this pull request? This reverts commit 8ce7962. There's variable name conflicts with apache@8aebc80#diff-39298b470865a4cbc67398a4ea11e767. This can be cleanly ported back to branch-3.0. ### Why are the changes needed? Performance investigation were not made enough and it's not clear if it really beneficial or now. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Jenkins tests. Closes apache#27514 from HyukjinKwon/revert-cache-PR. Authored-by: HyukjinKwon <gurwls223@apache.org> Signed-off-by: Xiao Li <gatorsmile@gmail.com>

… `like` function In the PR, I propose to revert the commit 8aebc80. See the concerns apache#27355 (comment) No By existing test suites. Closes apache#27531 from MaxGekk/revert-like-3-args. Authored-by: Maxim Gekk <max.gekk@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

…at escapes like Scala's StringContext.s()

### What changes were proposed in this pull request?

This PR proposes to make the `code` string interpolator treat escapes the same way as Scala's builtin `StringContext.s()` string interpolator. This will remove the need for an ugly workaround in `Like` expression's codegen.

### Why are the changes needed?

The `code()` string interpolator in Spark SQL's code generator should treat escapes like Scala's builtin `StringContext.s()` interpolator, i.e. it should treat escapes in the code parts, and should not treat escapes in the input arguments.

For example,

```scala

val arg = "This is an argument."

val str = s"This is string part 1. $arg This is string part 2."

val code = code"This is string part 1. $arg This is string part 2."

assert(code.toString == str)

```

We should expect the `code()` interpolator to produce the same result as the `StringContext.s()` interpolator, where only escapes in the string parts should be treated, while the args should be kept verbatim.

But in the current implementation, due to the eager folding of code parts and literal input args, the escape treatment is incorrectly done on both code parts and literal args.

That causes a problem when an arg contains escape sequences and wants to preserve that in the final produced code string. For example, in `Like` expression's codegen, there's an ugly workaround for this bug:

```scala

// We need double escape to avoid org.codehaus.commons.compiler.CompileException.

// '\\' will cause exception 'Single quote must be backslash-escaped in character literal'.

// '\"' will cause exception 'Line break in literal not allowed'.

val newEscapeChar = if (escapeChar == '\"' || escapeChar == '\\') {

s"""\\\\\\$escapeChar"""

} else {

escapeChar

}

```

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added a new unit test case in `CodeBlockSuite`.

Closes apache#27544 from rednaxelafx/fix-code-string-interpolator.

Authored-by: Kris Mok <kris.mok@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

… tuning guide be consistent with that in SQLConf ### What changes were proposed in this pull request? This pr is a follow up of apache#26200. In this PR, I modify the description of spark.sql.files.* in sql-performance-tuning.md to keep consistent with that in SQLConf. ### Why are the changes needed? To keep consistent with the description in SQLConf. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Existed UT. Closes apache#27545 from turboFei/SPARK-29542-follow-up. Authored-by: turbofei <fwang12@ebay.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit 8b18397) Signed-off-by: HyukjinKwon <gurwls223@apache.org>

… legacy date/timestamp formatters ### What changes were proposed in this pull request? In the PR, I propose to add legacy date/timestamp formatters based on `SimpleDateFormat` and `FastDateFormat`: - `LegacyFastTimestampFormatter` - uses `FastDateFormat` and supports parsing/formatting in microsecond precision. The code was borrowed from Spark 2.4, see apache#26507 & apache#26582 - `LegacySimpleTimestampFormatter` uses `SimpleDateFormat`, and support the `lenient` mode. When the `lenient` parameter is set to `false`, the parser become much stronger in checking its input. ### Why are the changes needed? Spark 2.4.x uses the following parsers for parsing/formatting date/timestamp strings: - `DateTimeFormat` in CSV/JSON datasource - `SimpleDateFormat` - is used in JDBC datasource, in partitions parsing. - `SimpleDateFormat` in strong mode (`lenient = false`), see https://github.com/apache/spark/blob/branch-2.4/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeUtils.scala#L124. It is used by the `date_format`, `from_unixtime`, `unix_timestamp` and `to_unix_timestamp` functions. The PR aims to make Spark 3.0 compatible with Spark 2.4.x in all those cases when `spark.sql.legacy.timeParser.enabled` is set to `true`. ### Does this PR introduce any user-facing change? This shouldn't change behavior with default settings. If `spark.sql.legacy.timeParser.enabled` is set to `true`, users should observe behavior of Spark 2.4. ### How was this patch tested? - Modified tests in `DateExpressionsSuite` to check the legacy parser - `SimpleDateFormat`. - Added `CSVLegacyTimeParserSuite` and `JsonLegacyTimeParserSuite` to run `CSVSuite` and `JsonSuite` with the legacy parser - `FastDateFormat`. Closes apache#27524 from MaxGekk/timestamp-formatter-legacy-fallback. Authored-by: Maxim Gekk <max.gekk@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit c198620) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…n EXPLAIN FORMATTED

### What changes were proposed in this pull request?

Currently `EXPLAIN FORMATTED` only report input attributes of HashAggregate/ObjectHashAggregate/SortAggregate, while `EXPLAIN EXTENDED` provides more information of Keys, Functions, etc. This PR enhanced `EXPLAIN FORMATTED` to sync with original explain behavior.

### Why are the changes needed?

The newly added `EXPLAIN FORMATTED` got less information comparing to the original `EXPLAIN EXTENDED`

### Does this PR introduce any user-facing change?

Yes, taking HashAggregate explain result as example.

**SQL**

```

EXPLAIN FORMATTED

SELECT

COUNT(val) + SUM(key) as TOTAL,

COUNT(key) FILTER (WHERE val > 1)

FROM explain_temp1;

```

**EXPLAIN EXTENDED**

```

== Physical Plan ==

*(2) HashAggregate(keys=[], functions=[count(val#6), sum(cast(key#5 as bigint)), count(key#5)], output=[TOTAL#62L, count(key) FILTER (WHERE (val > 1))#71L])

+- Exchange SinglePartition, true, [id=apache#89]

+- HashAggregate(keys=[], functions=[partial_count(val#6), partial_sum(cast(key#5 as bigint)), partial_count(key#5) FILTER (WHERE (val#6 > 1))], output=[count#75L, sum#76L, count#77L])

+- *(1) ColumnarToRow

+- FileScan parquet default.explain_temp1[key#5,val#6] Batched: true, DataFilters: [], Format: Parquet, Location: InMemoryFileIndex[file:/Users/XXX/spark-dev/spark/spark-warehouse/explain_temp1], PartitionFilters: [], PushedFilters: [], ReadSchema: struct<key:int,val:int>

```

**EXPLAIN FORMATTED - BEFORE**

```

== Physical Plan ==

* HashAggregate (5)

+- Exchange (4)

+- HashAggregate (3)

+- * ColumnarToRow (2)

+- Scan parquet default.explain_temp1 (1)

...

...

(5) HashAggregate [codegen id : 2]

Input: [count#91L, sum#92L, count#93L]

...

...

```

**EXPLAIN FORMATTED - AFTER**

```

== Physical Plan ==

* HashAggregate (5)

+- Exchange (4)

+- HashAggregate (3)

+- * ColumnarToRow (2)

+- Scan parquet default.explain_temp1 (1)

...

...

(5) HashAggregate [codegen id : 2]

Input: [count#91L, sum#92L, count#93L]

Keys: []

Functions: [count(val#6), sum(cast(key#5 as bigint)), count(key#5)]

Results: [(count(val#6)#84L + sum(cast(key#5 as bigint))#85L) AS TOTAL#78L, count(key#5)#86L AS count(key) FILTER (WHERE (val > 1))#87L]

Output: [TOTAL#78L, count(key) FILTER (WHERE (val > 1))#87L]

...

...

```

### How was this patch tested?

Three tests added in explain.sql for HashAggregate/ObjectHashAggregate/SortAggregate.

Closes apache#27368 from Eric5553/ExplainFormattedAgg.

Authored-by: Eric Wu <492960551@qq.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

(cherry picked from commit 5919bd3)

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…ime API ### What changes were proposed in this pull request? In the PR, I propose to rewrite the `millisToDays` and `daysToMillis` of `DateTimeUtils` using Java 8 time API. I removed `getOffsetFromLocalMillis` from `DateTimeUtils` because it is a private methods, and is not used anymore in Spark SQL. ### Why are the changes needed? New implementation is based on Proleptic Gregorian calendar which has been already used by other date-time functions. This changes make `millisToDays` and `daysToMillis` consistent to rest Spark SQL API related to date & time operations. ### Does this PR introduce any user-facing change? Yes, this might effect behavior for old dates before 1582 year. ### How was this patch tested? By existing test suites `DateTimeUtilsSuite`, `DateFunctionsSuite`, DateExpressionsSuite`, `SQLQuerySuite` and `HiveResultSuite`. Closes apache#27494 from MaxGekk/millis-2-days-java8-api. Authored-by: Maxim Gekk <max.gekk@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit aa0d136) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…ntries when setting up ACLs in truncate table ### What changes were proposed in this pull request? This is a follow-up to the PR apache#26956. In apache#26956, the patch proposed to preserve path permission when truncating table. When setting up original ACLs, we need to set user/group/other permission as ACL entries too, otherwise if the path doesn't have default user/group/other ACL entries, ACL API will complain an error `Invalid ACL: the user, group and other entries are required.`. In short this change makes sure: 1. Permissions for user/group/other are always kept into ACLs to work with ACL API. 2. Other custom ACLs are still kept after TRUNCATE TABLE (apache#26956 did this). ### Why are the changes needed? Without this fix, `TRUNCATE TABLE` will get an error when setting up ACLs if there is no default default user/group/other ACL entries. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Update unit test. Manual test on dev Spark cluster. Set ACLs for a table path without default user/group/other ACL entries: ``` hdfs dfs -setfacl --set 'user:liangchi:rwx,user::rwx,group::r--,other::r--' /user/hive/warehouse/test.db/test_truncate_table hdfs dfs -getfacl /user/hive/warehouse/test.db/test_truncate_table # file: /user/hive/warehouse/test.db/test_truncate_table # owner: liangchi # group: supergroup user::rwx user:liangchi:rwx group::r-- mask::rwx other::r-- ``` Then run `sql("truncate table test.test_truncate_table")`, it works by normally truncating the table and preserve ACLs. Closes apache#27548 from viirya/fix-truncate-table-permission. Lead-authored-by: Liang-Chi Hsieh <liangchi@uber.com> Co-authored-by: Liang-Chi Hsieh <viirya@gmail.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 5b76367) Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

`spark.sql("select map()")` returns {}.

After these changes it will return map<null,null>

### Why are the changes needed?

After changes introduced due to apache#27521, it is important to maintain consistency while using map().

### Does this PR introduce any user-facing change?

Yes. Now map() will give map<null,null> instead of {}.

### How was this patch tested?

UT added. Migration guide updated as well

Closes apache#27542 from iRakson/SPARK-30790.

Authored-by: iRakson <raksonrakesh@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

(cherry picked from commit 926e3a1)

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…mage ### What changes were proposed in this pull request? This PR aims to replace JDK to JRE in K8S integration test docker images. ### Why are the changes needed? This will save some resources and make it sure that we only need JRE at runtime and testing. - https://lists.apache.org/thread.html/3145150b711d7806a86bcd3ab43e18bcd0e4892ab5f11600689ba087%40%3Cdev.spark.apache.org%3E ### Does this PR introduce any user-facing change? No. This is a dev-only test environment. ### How was this patch tested? Pass the Jenkins K8s Integration Test. - apache#27469 (comment) Closes apache#27469 from dongjoon-hyun/SPARK-30743. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> (cherry picked from commit 9d907bc) Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

…ns with CoalesceShufflePartitions in comment ### What changes were proposed in this pull request? Replace legacy `ReduceNumShufflePartitions` with `CoalesceShufflePartitions` in comment. ### Why are the changes needed? Rule `ReduceNumShufflePartitions` has renamed to `CoalesceShufflePartitions`, we should update related comment as well. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? N/A. Closes apache#27865 from Ngone51/spark_31037_followup. Authored-by: yi.wu <yi.wu@databricks.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 34be83e) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request? ### Why are the changes needed? Based on [JIRA 30962](https://issues.apache.org/jira/browse/SPARK-30962), we want to add all the support `Alter Table` syntax for V1 table. ### Does this PR introduce any user-facing change? Yes ### How was this patch tested? Before: The documentation looks like [Alter Table](apache#25590) After: <img width="850" alt="Screen Shot 2020-03-03 at 2 02 23 PM" src="https://user-images.githubusercontent.com/7550280/75824837-168c7e00-5d59-11ea-9751-d1dab0f5a892.png"> <img width="977" alt="Screen Shot 2020-03-03 at 2 02 41 PM" src="https://user-images.githubusercontent.com/7550280/75824859-21dfa980-5d59-11ea-8b49-3adf6eb55fc6.png"> <img width="1028" alt="Screen Shot 2020-03-03 at 2 02 59 PM" src="https://user-images.githubusercontent.com/7550280/75824884-2e640200-5d59-11ea-81ef-d77d0a8efee2.png"> <img width="864" alt="Screen Shot 2020-03-03 at 2 03 14 PM" src="https://user-images.githubusercontent.com/7550280/75824910-39b72d80-5d59-11ea-84d0-bffa2499f086.png"> <img width="823" alt="Screen Shot 2020-03-03 at 2 03 28 PM" src="https://user-images.githubusercontent.com/7550280/75824937-45a2ef80-5d59-11ea-932c-314924856834.png"> <img width="811" alt="Screen Shot 2020-03-03 at 2 03 42 PM" src="https://user-images.githubusercontent.com/7550280/75824965-4cc9fd80-5d59-11ea-815b-8c1ebad310b1.png"> <img width="827" alt="Screen Shot 2020-03-03 at 2 03 53 PM" src="https://user-images.githubusercontent.com/7550280/75824978-518eb180-5d59-11ea-8a55-2fa26376b9c1.png"> <img width="783" alt="Screen Shot 2020-03-03 at 2 04 03 PM" src="https://user-images.githubusercontent.com/7550280/75825001-5bb0b000-5d59-11ea-8dd9-dcfbfa1b4330.png"> Notes: Those syntaxes are not supported by v1 Table. - `ALTER TABLE .. RENAME COLUMN` - `ALTER TABLE ... DROP (COLUMN | COLUMNS)` - `ALTER TABLE ... (ALTER | CHANGE) COLUMN? alterColumnAction` only support change comments, not other actions: `datatype, position, (SET | DROP) NOT NULL` - `ALTER TABLE .. CHANGE COLUMN?` - `ALTER TABLE .... REPLACE COLUMNS` - `ALTER TABLE ... RECOVER PARTITIONS` - Closes apache#27779 from kevinyu98/spark-30962-alterT. Authored-by: Qianyang Yu <qyu@us.ibm.com> Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org> (cherry picked from commit 0f54dc7) Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request? This PR aims to bring the bug fixes from the latest netty-all. ### Why are the changes needed? - 4.1.47.Final: https://github.com/netty/netty/milestone/222?closed=1 (15 patches or issues) - 4.1.46.Final: https://github.com/netty/netty/milestone/221?closed=1 (80 patches or issues) - 4.1.45.Final: https://github.com/netty/netty/milestone/220?closed=1 (23 patches or issues) - 4.1.44.Final: https://github.com/netty/netty/milestone/218?closed=1 (113 patches or issues) - 4.1.43.Final: https://github.com/netty/netty/milestone/217?closed=1 (63 patches or issues) ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Pass the Jenkins with the existing tests. Closes apache#27869 from dongjoon-hyun/SPARK-31095. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 93def95) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

<!-- Thanks for sending a pull request! Here are some tips for you: 1. If this is your first time, please read our contributor guidelines: https://spark.apache.org/contributing.html 2. Ensure you have added or run the appropriate tests for your PR: https://spark.apache.org/developer-tools.html 3. If the PR is unfinished, add '[WIP]' in your PR title, e.g., '[WIP][SPARK-XXXX] Your PR title ...'. 4. Be sure to keep the PR description updated to reflect all changes. 5. Please write your PR title to summarize what this PR proposes. 6. If possible, provide a concise example to reproduce the issue for a faster review. 7. If you want to add a new configuration, please read the guideline first for naming configurations in 'core/src/main/scala/org/apache/spark/internal/config/ConfigEntry.scala'. --> ### What changes were proposed in this pull request? <!-- Please clarify what changes you are proposing. The purpose of this section is to outline the changes and how this PR fixes the issue. If possible, please consider writing useful notes for better and faster reviews in your PR. See the examples below. 1. If you refactor some codes with changing classes, showing the class hierarchy will help reviewers. 2. If you fix some SQL features, you can provide some references of other DBMSes. 3. If there is design documentation, please add the link. 4. If there is a discussion in the mailing list, please add the link. --> There are two problems when splitting skewed partitions: 1. It's impossible that we can't split the skewed partition, then we shouldn't create a skew join. 2. When splitting, it's possible that we create a partition for very small amount of data.. This PR fixes them 1. don't create `PartialReducerPartitionSpec` if we can't split. 2. merge small partitions to the previous partition. ### Why are the changes needed? <!-- Please clarify why the changes are needed. For instance, 1. If you propose a new API, clarify the use case for a new API. 2. If you fix a bug, you can clarify why it is a bug. --> make skew join split skewed partitions more evenly ### Does this PR introduce any user-facing change? <!-- If yes, please clarify the previous behavior and the change this PR proposes - provide the console output, description and/or an example to show the behavior difference if possible. If no, write 'No'. --> no ### How was this patch tested? <!-- If tests were added, say they were added here. Please make sure to add some test cases that check the changes thoroughly including negative and positive cases if possible. If it was tested in a way different from regular unit tests, please clarify how you tested step by step, ideally copy and paste-able, so that other reviewers can test and check, and descendants can verify in the future. If tests were not added, please describe why they were not added and/or why it was difficult to add. --> updated test Closes apache#27833 from cloud-fan/aqe. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: gatorsmile <gatorsmile@gmail.com> (cherry picked from commit d5f5720) Signed-off-by: gatorsmile <gatorsmile@gmail.com>

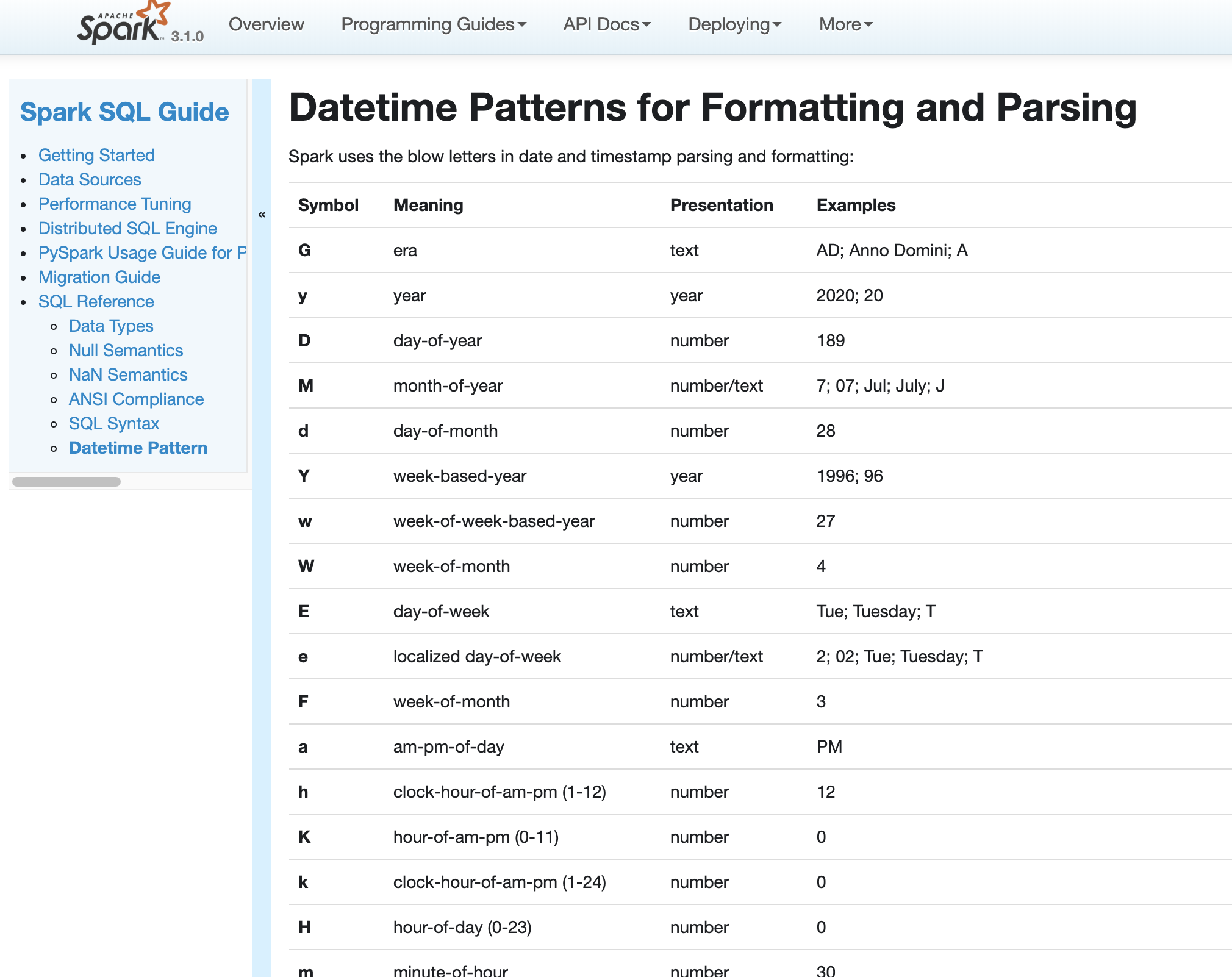

…Datetime In Spark version 2.4 and earlier, datetime parsing, formatting and conversion are performed by using the hybrid calendar (Julian + Gregorian). Since the Proleptic Gregorian calendar is de-facto calendar worldwide, as well as the chosen one in ANSI SQL standard, Spark 3.0 switches to it by using Java 8 API classes (the java.time packages that are based on ISO chronology ). The switching job is completed in SPARK-26651. But after the switching, there are some patterns not compatible between Java 8 and Java 7, Spark needs its own definition on the patterns rather than depends on Java API. In this PR, we achieve this by writing the document and shadow the incompatible letters. See more details in [SPARK-31030](https://issues.apache.org/jira/browse/SPARK-31030) For backward compatibility. No. After we define our own datetime parsing and formatting patterns, it's same to old Spark version. Existing and new added UT. Locally document test:  Closes apache#27830 from xuanyuanking/SPARK-31030. Authored-by: Yuanjian Li <xyliyuanjian@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 3493162) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request? fix the error caused by interval output in ExtractBenchmark ### Why are the changes needed? fix a bug in the test ```scala [info] Running case: cast to interval [error] Exception in thread "main" org.apache.spark.sql.AnalysisException: Cannot use interval type in the table schema.;; [error] OverwriteByExpression RelationV2[] noop-table, true, true [error] +- Project [(subtractdates(cast(cast(id#0L as timestamp) as date), -719162) + subtracttimestamps(cast(id#0L as timestamp), -30610249419876544)) AS ((CAST(CAST(id AS TIMESTAMP) AS DATE) - DATE '0001-01-01') + (CAST(id AS TIMESTAMP) - TIMESTAMP '1000-01-01 01:02:03.123456'))apache#2] [error] +- Range (1262304000, 1272304000, step=1, splits=Some(1)) [error] [error] at org.apache.spark.sql.catalyst.util.TypeUtils$.failWithIntervalType(TypeUtils.scala:106) [error] at org.apache.spark.sql.catalyst.analysis.CheckAnalysis.$anonfun$checkAnalysis$25(CheckAnalysis.scala:389) [error] at org.a ``` ### Does this PR introduce any user-facing change? no ### How was this patch tested? re-run benchmark Closes apache#27867 from yaooqinn/SPARK-31111. Authored-by: Kent Yao <yaooqinn@hotmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 2b46662) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…estamp via local date-time

In the PR, I propose to change conversion of java.sql.Timestamp/Date values to/from internal values of Catalyst's TimestampType/DateType before cutover day `1582-10-15` of Gregorian calendar. I propose to construct local date-time from microseconds/days since the epoch. Take each date-time component `year`, `month`, `day`, `hour`, `minute`, `second` and `second fraction`, and construct java.sql.Timestamp/Date using the extracted components.

This will rebase underlying time/date offset in the way that collected java.sql.Timestamp/Date values will have the same local time-date component as the original values in Gregorian calendar.

Here is the example which demonstrates the issue:

```sql

scala> sql("select date '1100-10-10'").collect()

res1: Array[org.apache.spark.sql.Row] = Array([1100-10-03])

```

Yes, after the changes:

```sql

scala> sql("select date '1100-10-10'").collect()

res0: Array[org.apache.spark.sql.Row] = Array([1100-10-10])

```

By running `DateTimeUtilsSuite`, `DateFunctionsSuite` and `DateExpressionsSuite`.

Closes apache#27807 from MaxGekk/rebase-timestamp-before-1582.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

(cherry picked from commit 3d3e366)

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

`DateTimeUtilsSuite.daysToMicros and microsToDays` takes 30 seconds, which is too long for a UT. This PR changes the test to check random data, to reduce testing time. Now this test takes 1 second. make test faster no N/A Closes apache#27873 from cloud-fan/test. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 5be0d04) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…efault ### What changes were proposed in this pull request? This PR reverts apache#26051 and apache#26066 ### Why are the changes needed? There is no standard requiring that `size(null)` must return null, and returning -1 looks reasonable as well. This is kind of a cosmetic change and we should avoid it if it breaks existing queries. This is similar to reverting TRIM function parameter order change. ### Does this PR introduce any user-facing change? Yes, change the behavior of `size(null)` back to be the same as 2.4. ### How was this patch tested? N/A Closes apache#27834 from cloud-fan/revert. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 8efb710) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request? A few improvements to the sql ref SELECT doc: 1. correct the syntax of SELECT query 2. correct the default of null sort order 3. correct the GROUP BY syntax 4. several minor fixes ### Why are the changes needed? refine document ### Does this PR introduce any user-facing change? N/A ### How was this patch tested? N/A Closes apache#27866 from cloud-fan/doc. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 0f0ccda) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request? This PR (SPARK-31126) aims to upgrade Kafka library to bring a client-side bug fix like KAFKA-8933 ### Why are the changes needed? The following is the full release note. - https://downloads.apache.org/kafka/2.4.1/RELEASE_NOTES.html ### Does this PR introduce any user-facing change? No ### How was this patch tested? Pass the Jenkins with the existing test. Closes apache#27881 from dongjoon-hyun/SPARK-KAFKA-2.4.1. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 614323d) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

…essionState initialization parts ### What changes were proposed in this pull request? As a common usage and according to the spark doc, users may often just copy their `hive-site.xml` to Spark directly from hive projects. Sometimes, the config file is not that clean for spark and may cause some side effects. for example, `hive.session.history.enabled` will create a log for the hive jobs but useless for spark and also it will not be deleted on JVM exit. this pr 1) disable `hive.session.history.enabled` explicitly to disable creating `hive_job_log` file, e.g. ``` Hive history file=/var/folders/01/h81cs4sn3dq2dd_k4j6fhrmc0000gn/T//kentyao/hive_job_log_79c63b29-95a4-4935-a9eb-2d89844dfe4f_493861201.txt ``` 2) set `hive.execution.engine` to `spark` explicitly in case the config is `tez` and casue uneccesary problem like this: ``` Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/tez/dag/api/SessionNotRunning at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:529) ``` ### Why are the changes needed? reduce overhead of internal complexity and users' hive cognitive load for running spark ### Does this PR introduce any user-facing change? yes, `hive_job_log` file will not be created even enabled, and will not try to initialize tez kinds of stuff ### How was this patch tested? add ut and verify manually Closes apache#27827 from yaooqinn/SPARK-31066. Authored-by: Kent Yao <yaooqinn@hotmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 18f2730) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…error message In the error message, adding an example for typed Scala UDF. Help user to know how to migrate to typed Scala UDF. No, it's a new error message in Spark 3.0. Pass Jenkins. Closes apache#27884 from Ngone51/spark_31010_followup. Authored-by: yi.wu <yi.wu@databricks.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request? AQE has a perf regression when using the default settings: if we coalesce the shuffle partitions into one or few partitions, we may leave many CPU cores idle and the perf is worse than with AQE off (which leverages all CPU cores). Technically, this is not a bad thing. If there are many queries running at the same time, it's better to coalesce shuffle partitions into fewer partitions. However, the default settings of AQE should try to avoid any perf regression as possible as we can. This PR changes the default value of minPartitionNum when coalescing shuffle partitions, to be `SparkContext.defaultParallelism`, so that AQE can leverage all the CPU cores. ### Why are the changes needed? avoid AQE perf regression ### Does this PR introduce any user-facing change? No ### How was this patch tested? existing tests Closes apache#27879 from cloud-fan/aqe. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 77c49cb) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request? This PR (SPARK-31130) aims to pin `Commons IO` version to `2.4` in SBT build like Maven build. ### Why are the changes needed? [HADOOP-15261](https://issues.apache.org/jira/browse/HADOOP-15261) upgraded `commons-io` from 2.4 to 2.5 at Apache Hadoop 3.1. In `Maven`, Apache Spark always uses `Commons IO 2.4` based on `pom.xml`. ``` $ git grep commons-io.version pom.xml: <commons-io.version>2.4</commons-io.version> pom.xml: <version>${commons-io.version}</version> ``` However, `SBT` choose `2.5`. **branch-3.0** ``` $ build/sbt -Phadoop-3.2 "core/dependencyTree" | grep commons-io:commons-io | head -n1 [info] | | +-commons-io:commons-io:2.5 ``` **branch-2.4** ``` $ build/sbt -Phadoop-3.1 "core/dependencyTree" | grep commons-io:commons-io | head -n1 [info] | | +-commons-io:commons-io:2.5 ``` ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Pass the Jenkins with `[test-hadoop3.2]` (the default PR Builder is `SBT`) and manually do the following locally. ``` build/sbt -Phadoop-3.2 "core/dependencyTree" | grep commons-io:commons-io | head -n1 ``` Closes apache#27886 from dongjoon-hyun/SPARK-31130. Authored-by: Dongjoon Hyun <dongjoon@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 972e23d) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

…eParser.enabled spark.sql.legacy.timeParser.enabled should be removed from SQLConf and the migration guide spark.sql.legacy.timeParsePolicy is the right one fix doc no Pass the jenkins Closes apache#27889 from yaooqinn/SPARK-31131. Authored-by: Kent Yao <yaooqinn@hotmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 7b4b29e) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request? This PR aims to recover `IntervalBenchmark` and `DataTimeBenchmark` due to banning intervals as output. ### Why are the changes needed? This PR recovers the benchmark suite. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Manually, re-run the benchmark. Closes apache#27885 from yaooqinn/SPARK-31111-2. Authored-by: Kent Yao <yaooqinn@hotmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit fbc9dc7) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

…f the operands" This reverts commit 47d6e80. There is no standard requiring that `div` must return the type of the operand, and always returning long type looks fine. This is kind of a cosmetic change and we should avoid it if it breaks existing queries. This is similar to reverting TRIM function parameter order change. Yes, change the behavior of `div` back to be the same as 2.4. N/A Closes apache#27835 from cloud-fan/revert2. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit b27b3c9) Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request? Since we reverted the original change in apache#27540, this PR is to remove the corresponding migration guide made in the commit apache#24948 ### Why are the changes needed? N/A ### Does this PR introduce any user-facing change? N/A ### How was this patch tested? N/A Closes apache#27896 from gatorsmile/SPARK-28093Followup. Authored-by: gatorsmile <gatorsmile@gmail.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit 1c8526d) Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request? There is a minor issue in apache#26201 In the streaming statistics page, there is such error ``` streaming-page.js:211 Uncaught TypeError: Cannot read property 'top' of undefined at SVGCircleElement.<anonymous> (streaming-page.js:211) at SVGCircleElement.__onclick (d3.min.js:1) ``` in the console after clicking the timeline graph.  This PR is to fix it. ### Why are the changes needed? Fix the error of javascript execution. ### Does this PR introduce any user-facing change? No, the error shows up in the console. ### How was this patch tested? Manual test. Closes apache#27883 from gengliangwang/fixSelector. Authored-by: Gengliang Wang <gengliang.wang@databricks.com> Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com> (cherry picked from commit 0f46325) Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

…tead of empty timelines and histograms ### What changes were proposed in this pull request? `StreamingQueryStatisticsPage` shows a message "No visualization information available because there is no batches" instead of showing empty timelines and histograms for empty streaming queries. [Before this change applied]  [After this change applied]  ### Why are the changes needed? Empty charts are ugly and a little bit confusing. It's better to clearly say "No visualization information available". Also, this change fixes a JS error shown in the capture above. This error occurs because `drawTimeline` in `streaming-page.js` is called even though `formattedDate` will be `undefined` for empty streaming queries. ### Does this PR introduce any user-facing change? Yes. screen captures are shown above. ### How was this patch tested? Manually tested by creating an empty streaming query like as follows. ``` val df = spark.readStream.format("socket").options(Map("host"->"<non-existing hostname>", "port"->"...")).load df.writeStream.format("console").start ``` This streaming query will fail because of `non-existing hostname` and has no batches. Closes apache#27755 from sarutak/fix-for-empty-batches. Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com> Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com> (cherry picked from commit 6809815) Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

…QueryExecutionListener ### What changes were proposed in this pull request? This PR manually reverts changes in apache#25292 and then wraps java.lang.Error with `QueryExecutionException` to notify `QueryExecutionListener` to send it to `QueryExecutionListener.onFailure` which only accepts `Exception`. The bug fix PR for 2.4 is apache#27904. It needs a separate PR because the touched codes were changed a lot. ### Why are the changes needed? Avoid API changes and fix a bug. ### Does this PR introduce any user-facing change? Yes. Reverting an API change happening in 3.0. QueryExecutionListener APIs will be the same as 2.4. ### How was this patch tested? The new added test. Closes apache#27907 from zsxwing/SPARK-31144. Authored-by: Shixiong Zhu <zsxwing@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 1ddf44d) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request? Before: -  -  -  After: -  -  -  ### Why are the changes needed? To render the code block properly in the documentation ### Does this PR introduce any user-facing change? Yes, code rendering in documentation. ### How was this patch tested? Manually built the doc via `SKIP_API=1 jekyll build`. Closes apache#27899 from HyukjinKwon/minor-docss. Authored-by: HyukjinKwon <gurwls223@apache.org> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit 9628aca) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request? The current migration guide of SQL is too long for most readers to find the needed info. This PR is to group the items in the migration guide of Spark SQL based on the corresponding components. Note. This PR does not change the contents of the migration guides. Attached figure is the screenshot after the change.  ### Why are the changes needed? The current migration guide of SQL is too long for most readers to find the needed info. ### Does this PR introduce any user-facing change? No ### How was this patch tested? N/A Closes apache#27909 from gatorsmile/migrationGuideReorg. Authored-by: gatorsmile <gatorsmile@gmail.com> Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org> (cherry picked from commit 4d4c00c) Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

Backport of apache#27910 / 0ce5519. --- This PR cleans up several failures -- most of them silent -- in `dev/lint-python`. I don't understand how we haven't been bitten by these yet. Perhaps we've been lucky? Fixes include: * Fix how we compare versions. All the version checks currently in `master` silently fail with: ``` File "<string>", line 2 print(LooseVersion("""2.3.1""") >= LooseVersion("""2.4.0""")) ^ IndentationError: unexpected indent ``` Another problem is that `distutils.version` is undocumented and unsupported. * Fix some basic bugs. e.g. We have an incorrect reference to `$PYDOCSTYLEBUILD`, which doesn't exist, which was causing the doc style test to silently fail with: ``` ./dev/lint-python: line 193: --version: command not found ``` * Stop suppressing error output! It's hiding problems and serves no purpose here. `lint-python` is part of our CI build and is currently doing any combination of the following: silently failing; incorrectly skipping tests; incorrectly downloading libraries when a suitable library is already available. Closes apache#27917 from nchammas/SPARK-31153-lint-python-branch-3.0. Authored-by: Nicholas Chammas <nicholas.chammas@liveramp.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request? Upgrdade `docker-client` version. ### Why are the changes needed? `docker-client` what Spark uses is super old. Snippet from the project page: ``` Spotify no longer uses recent versions of this project internally. The version of docker-client we're using is whatever helios has in its pom.xml. => 8.14.1 ``` ### Does this PR introduce any user-facing change? No. ### How was this patch tested? ``` build/mvn install -DskipTests build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 -Dtest=none -DwildcardSuites=org.apache.spark.sql.jdbc.DB2IntegrationSuite test` build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 -Dtest=none -DwildcardSuites=org.apache.spark.sql.jdbc.MsSqlServerIntegrationSuite test` build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 -Dtest=none -DwildcardSuites=org.apache.spark.sql.jdbc.PostgresIntegrationSuite test` ``` Closes apache#27892 from gaborgsomogyi/docker-client. Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> (cherry picked from commit b0d2956) Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request? It's not needed at all as now we replace "y" with "u" if there is no "G". So the era is either explicitly specified (e.g. "yyyy G") or can be inferred from the year (e.g. "uuuu"). ### Why are the changes needed? By default we use "uuuu" as the year pattern, which indicates the era already. If we set a default era, it can get conflicted and fail the parsing. ### Does this PR introduce any user-facing change? yea, now spark can parse date/timestamp with negative year via the "yyyy" pattern, which will be converted to "uuuu" under the hood. ### How was this patch tested? new tests Closes apache#27707 from cloud-fan/bug. Authored-by: Wenchen Fan <wenchen@databricks.com> Signed-off-by: HyukjinKwon <gurwls223@apache.org> (cherry picked from commit 50a2967) Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request? Move the code related to days rebasing from/to Julian calendar from `HiveInspectors` to new class `DaysWritable`. ### Why are the changes needed? To improve maintainability of the `HiveInspectors` trait which is already pretty complex. ### Does this PR introduce any user-facing change? No ### How was this patch tested? By `HiveOrcHadoopFsRelationSuite`. Closes apache#27890 from MaxGekk/replace-DateWritable-by-DaysWritable. Authored-by: Maxim Gekk <max.gekk@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit 57854c7) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.This suggestion is invalid because no changes were made to the code.Suggestions cannot be applied while the pull request is closed.Suggestions cannot be applied while viewing a subset of changes.Only one suggestion per line can be applied in a batch.Add this suggestion to a batch that can be applied as a single commit.Applying suggestions on deleted lines is not supported.You must change the existing code in this line in order to create a valid suggestion.Outdated suggestions cannot be applied.This suggestion has been applied or marked resolved.Suggestions cannot be applied from pending reviews.Suggestions cannot be applied on multi-line comments.Suggestions cannot be applied while the pull request is queued to merge.Suggestion cannot be applied right now. Please check back later.

What changes were proposed in this pull request?

Why are the changes needed?

Does this PR introduce any user-facing change?

How was this patch tested?