-

Notifications

You must be signed in to change notification settings - Fork 299

dev: simplify #18

dev: simplify #18

Changes from all commits

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -1,2 +1,3 @@ | ||

| .venv | ||

| ml-25m* | ||

| dev/ml-25m* |

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,11 @@ | ||

| FROM python:3.10 | ||

| RUN apt-get update && apt-get install -y \ | ||

| python3-dev libpq-dev wget unzip \ | ||

| python3-setuptools gcc bc | ||

| RUN pip install --no-cache-dir poetry==1.1.13 | ||

| COPY . /app | ||

| WORKDIR /app | ||

| # For now while we are in heavy development we install the latest with Poetry | ||

| # and execute directly with Poetry. Later, we'll move to the released Pip package. | ||

| RUN poetry install -E preql -E mysql -E pgsql -E snowflake | ||

| ENTRYPOINT ["poetry", "run", "python3", "-m", "data_diff"] | ||

|

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. This avoids having to type out all the arguments

Collaborator

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. So this would work and

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. They'd both work. Just been bit many times by string syntax from quoting, so in this habit now. It's what they recommend too.

Collaborator

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Did you test this so that it works? How are you providing it with the arguments like DB1_URI etc?

Contributor

Author

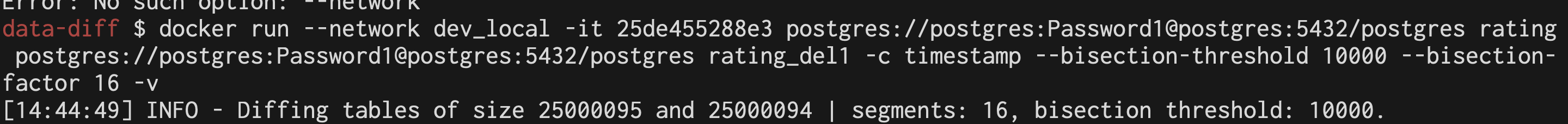

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. |

||

| Original file line number | Diff line number | Diff line change | ||||

|---|---|---|---|---|---|---|

| @@ -1,27 +1,74 @@ | ||||||

| # Data Diff | ||||||

|

|

||||||

| A cross-database, efficient diff between mostly-similar database tables. | ||||||

| A cross-database, efficient diff using checksums between mostly-similar database | ||||||

| tables. | ||||||

|

|

||||||

| Use cases: | ||||||

| - Validate that a table was copied properly | ||||||

| - Be alerted before your customer finds out, or your report is wrong | ||||||

| - Validate that your replication mechnism is working correctly | ||||||

|

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Spelling error (mechnism) |

||||||

| - Find changes between two versions of the same table | ||||||

|

|

||||||

| - Quickly validate that a table was copied correctly | ||||||

| It uses a bisection algorithm to efficiently check if e.g. a table is the same | ||||||

| between MySQL and Postgres, or Postgres and Snowflake, or MySQL and RDS! | ||||||

|

|

||||||

| - Find changes between two versions of the same table | ||||||

| ```python | ||||||

| $ data-diff postgres:/// Original postgres:/// Original_1diff -v --bisection-factor=4 | ||||||

| [16:55:19] INFO - Diffing tables of size 25000095 and 25000095 | segments: 4, bisection threshold: 1048576. | ||||||

| [16:55:36] INFO - Diffing segment 0/4 of size 8333364 and 8333364 | ||||||

| [16:55:45] INFO - . Diffing segment 0/4 of size 2777787 and 2777787 | ||||||

| [16:55:52] INFO - . . Diffing segment 0/4 of size 925928 and 925928 | ||||||

| [16:55:54] INFO - . . . Diff found 2 different rows. | ||||||

| + (20000, 942013020) | ||||||

| - (20000, 942013021) | ||||||

| [16:55:54] INFO - . . Diffing segment 1/4 of size 925929 and 925929 | ||||||

| [16:55:55] INFO - . . Diffing segment 2/4 of size 925929 and 925929 | ||||||

| [16:55:55] INFO - . . Diffing segment 3/4 of size 1 and 1 | ||||||

| [16:55:56] INFO - . Diffing segment 1/4 of size 2777788 and 2777788 | ||||||

| [16:55:58] INFO - . Diffing segment 2/4 of size 2777788 and 2777788 | ||||||

| [16:55:59] INFO - . Diffing segment 3/4 of size 1 and 1 | ||||||

| [16:56:00] INFO - Diffing segment 1/4 of size 8333365 and 8333365 | ||||||

| [16:56:06] INFO - Diffing segment 2/4 of size 8333365 and 8333365 | ||||||

| [16:56:11] INFO - Diffing segment 3/4 of size 1 and 1 | ||||||

| [16:56:11] INFO - Duration: 53.51 seconds. | ||||||

| ``` | ||||||

|

|

||||||

| We currently support the following databases: | ||||||

|

|

||||||

| - PostgreSQL | ||||||

|

|

||||||

| - MySQL | ||||||

|

|

||||||

| - Oracle | ||||||

|

|

||||||

| - Snowflake | ||||||

|

|

||||||

| - BigQuery | ||||||

|

|

||||||

| - Redshift | ||||||

|

|

||||||

| We plan to add more, including NoSQL, and even APIs like Shopify! | ||||||

|

|

||||||

| # How to install | ||||||

|

|

||||||

| Requires Python 3.7+ with pip. | ||||||

|

|

||||||

| ```pip install data-diff``` | ||||||

|

|

||||||

| or when you need extras like mysql and postgres | ||||||

|

|

||||||

| ```pip install "data-diff[mysql,pgsql]"``` | ||||||

|

|

||||||

| # How to use | ||||||

|

|

||||||

| Usage: `data-diff DB1_URI TABLE1_NAME DB2_URI TABLE2_NAME [OPTIONS]` | ||||||

|

|

||||||

| Options: | ||||||

|

|

||||||

| - `--help` - Show help message and exit. | ||||||

| - `-k` or `--key_column` - Name of the primary key column | ||||||

| - `-c` or `--columns` - List of names of extra columns to compare | ||||||

| - `-l` or `--limit` - Maximum number of differences to find (limits maximum bandwidth and runtime) | ||||||

| - `-s` or `--stats` - Print stats instead of a detailed diff | ||||||

| - `-d` or `--debug` - Print debug info | ||||||

| - `-v` or `--verbose` - Print extra info | ||||||

| - `--bisection-factor` - Segments per iteration. When set to 2, it performs binary search. | ||||||

| - `--bisection-threshold` - Minimal bisection threshold. i.e. maximum size of pages to diff locally. | ||||||

|

|

||||||

|

|

||||||

| # How does it work? | ||||||

|

|

||||||

|

|

@@ -63,57 +110,71 @@ We ran it with a very low bisection factor, and with the verbose flag, to demons | |||||

|

|

||||||

| Note: It's usually much faster to use high bisection factors, especially when there are very few changes, like in this example. | ||||||

|

|

||||||

| ```python | ||||||

| $ data_diff postgres:/// Original postgres:/// Original_1diff -v --bisection-factor=4 | ||||||

| [16:55:19] INFO - Diffing tables of size 25000095 and 25000095 | segments: 4, bisection threshold: 1048576. | ||||||

| [16:55:36] INFO - Diffing segment 0/4 of size 8333364 and 8333364 | ||||||

| [16:55:45] INFO - . Diffing segment 0/4 of size 2777787 and 2777787 | ||||||

| [16:55:52] INFO - . . Diffing segment 0/4 of size 925928 and 925928 | ||||||

| [16:55:54] INFO - . . . Diff found 2 different rows. | ||||||

| + (20000, 942013020) | ||||||

| - (20000, 942013021) | ||||||

| [16:55:54] INFO - . . Diffing segment 1/4 of size 925929 and 925929 | ||||||

| [16:55:55] INFO - . . Diffing segment 2/4 of size 925929 and 925929 | ||||||

| [16:55:55] INFO - . . Diffing segment 3/4 of size 1 and 1 | ||||||

| [16:55:56] INFO - . Diffing segment 1/4 of size 2777788 and 2777788 | ||||||

| [16:55:58] INFO - . Diffing segment 2/4 of size 2777788 and 2777788 | ||||||

| [16:55:59] INFO - . Diffing segment 3/4 of size 1 and 1 | ||||||

| [16:56:00] INFO - Diffing segment 1/4 of size 8333365 and 8333365 | ||||||

| [16:56:06] INFO - Diffing segment 2/4 of size 8333365 and 8333365 | ||||||

| [16:56:11] INFO - Diffing segment 3/4 of size 1 and 1 | ||||||

| [16:56:11] INFO - Duration: 53.51 seconds. | ||||||

| ## Tips for performance | ||||||

|

|

||||||

| It's highly recommended that all involved columns are indexed. | ||||||

|

|

||||||

| ## Development Setup | ||||||

|

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. It's too easy to miss it otherwise. Let's just have one big README.

Collaborator

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Are you sure that we only want to have doc-based instructions for a setup? Check DAT-3098

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. I would love a script, the reason for a doc-based approach for now is (1) these currently take too long to be one script, (2) too hard to test, because it takes too long to test, and (3) the existing script didn't work (because of (2)). We are not mature enough for a script, unfortunately. |

||||||

|

|

||||||

| The development setup centers around using `docker-compose` to boot up various | ||||||

| databases, and then inserting data into them. | ||||||

|

|

||||||

| For Mac for performance of Docker, we suggest enabling in the UI: | ||||||

|

|

||||||

| * Use new Virtualization Framework | ||||||

| * Enable VirtioFS accelerated directory sharing | ||||||

|

|

||||||

| **1. Install Data Diff** | ||||||

|

|

||||||

| When developing/debugging, it's recommended to install dependencies and run it | ||||||

| directly with `poetry` rather than go through the package. | ||||||

|

|

||||||

| ``` | ||||||

| brew install mysql postgresql # MacOS dependencies for C bindings | ||||||

| poetry install | ||||||

| ``` | ||||||

|

|

||||||

| **2. Download CSV of Testing Data** | ||||||

|

|

||||||

| # How to install | ||||||

| ```shell-session | ||||||

| wget https://files.grouplens.org/datasets/movielens/ml-25m.zip | ||||||

| unzip ml-25m.zip -d dev/ | ||||||

| ``` | ||||||

|

|

||||||

| Requires Python 3.7+ with pip. | ||||||

| **3. Start Databases** | ||||||

|

|

||||||

| ```pip install data-diff``` | ||||||

| ```shell-session | ||||||

| docker-compose up -d mysql postgres | ||||||

| ``` | ||||||

|

|

||||||

| or when you need extras like mysql and postgres | ||||||

| **4. Run Unit Tests** | ||||||

|

|

||||||

| ```pip install "data-diff[mysql,pgsql]"``` | ||||||

| ```shell-session | ||||||

| poetry run python3 -m unittest | ||||||

| ``` | ||||||

|

|

||||||

| # How to use | ||||||

| **5. Seed the Database(s)** | ||||||

|

|

||||||

| Usage: `data_diff DB1_URI TABLE1_NAME DB2_URI TABLE2_NAME [OPTIONS]` | ||||||

| If you're just testing, we recommend just setting up one database (e.g. | ||||||

| Postgres) to avoid incurring the long setup time repeatedly. | ||||||

|

|

||||||

| Options: | ||||||

| ```shell-session | ||||||

| preql -f dev/prepare_db.pql postgres://postgres:Password1@127.0.0.1:5432/postgres | ||||||

| preql -f dev/prepare_db.pql mysql://mysql:Password1@127.0.0.1:3306/mysql | ||||||

| preql -f dev/prepare_db.psq snowflake://<uri> | ||||||

| preql -f dev/prepare_db.psq mssql://<uri> | ||||||

| preql -f dev/prepare_db_bigquery.pql bigquery:///<project> # Bigquery has its own | ||||||

|

Collaborator

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more.

Suggested change

|

||||||

| ``` | ||||||

|

|

||||||

| - `--help` - Show help message and exit. | ||||||

| - `-k` or `--key_column` - Name of the primary key column | ||||||

| - `-c` or `--columns` - List of names of extra columns to compare | ||||||

| - `-l` or `--limit` - Maximum number of differences to find (limits maximum bandwidth and runtime) | ||||||

| - `-s` or `--stats` - Print stats instead of a detailed diff | ||||||

| - `-d` or `--debug` - Print debug info | ||||||

| - `-v` or `--verbose` - Print extra info | ||||||

| - `--bisection-factor` - Segments per iteration. When set to 2, it performs binary search. | ||||||

| - `--bisection-threshold` - Minimal bisection threshold. i.e. maximum size of pages to diff locally. | ||||||

| **6. Run data-diff against seeded database** | ||||||

|

|

||||||

| ## Tips for performance | ||||||

| ```bash | ||||||

| poetry run python3 -m data_diff postgres://user:password@host:db Rating mysql://user:password@host:db Rating_del1 -c timestamp --stats | ||||||

|

|

||||||

| It's highly recommended that all involved columns are indexed. | ||||||

| Diff-Total: 250156 changed rows out of 25000095 | ||||||

| Diff-Percent: 1.0006% | ||||||

| Diff-Split: +250156 -0 | ||||||

| ``` | ||||||

|

|

||||||

| # License | ||||||

|

|

||||||

|

|

||||||

This file was deleted.

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -24,7 +24,7 @@ if (db_type == "snowflake") { | |

| print "Uploading ratings CSV" | ||

|

|

||

| run_sql("RM @~/ratings.csv.gz") | ||

| run_sql("PUT file://ml-25m/ratings.csv @~") | ||

| run_sql("PUT file://dev/ml-25m/ratings.csv @~") | ||

|

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. The commands in the README straight up didn't work, because the paths assumed you were |

||

|

|

||

| print "Loading ratings CSV" | ||

|

|

||

|

|

@@ -86,7 +86,7 @@ if (db_type == "snowflake") { | |

| run_sql("create table tmp_rating(userid int, movieid int, rating float, timestamp int)") | ||

| table tmp_rating {...} | ||

| print "Loading ratings CSV" | ||

| run_sql("BULK INSERT tmp_rating from 'ml-25m/ratings.csv' with (fieldterminator = ',', rowterminator = '0x0a', FIRSTROW = 2);") | ||

| run_sql("BULK INSERT tmp_rating from 'dev/ml-25m/ratings.csv' with (fieldterminator = ',', rowterminator = '0x0a', FIRSTROW = 2);") | ||

| print "Populating actual table" | ||

| rating += tmp_rating | ||

| commit() | ||

|

|

@@ -99,7 +99,7 @@ if (db_type == "snowflake") { | |

| rating: float | ||

| timestamp: int | ||

| } | ||

| import_csv(rating, 'ml-25m/ratings.csv', true) | ||

| import_csv(rating, 'dev/ml-25m/ratings.csv', true) | ||

| rating.add_index("id", true) | ||

| rating.add_index("timestamp") | ||

| run_sql("CREATE INDEX index_rating_id_timestamp ON rating (id, timestamp)") | ||

|

|

||

This file was deleted.

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -1,44 +1,12 @@ | ||

| version: "3.8" | ||

|

|

||

| services: | ||

| data-diff: | ||

| container_name: data-diff | ||

| build: | ||

| context: ../ | ||

| dockerfile: ./dev/Dockerfile | ||

| args: | ||

| - DB1_URI | ||

| - TABLE1_NAME | ||

| - DB2_URI | ||

| - TABLE2_NAME | ||

| - OPTIONS | ||

| ports: | ||

| - '9992:9992' | ||

| expose: | ||

| - '9992' | ||

| tty: true | ||

| networks: | ||

| - local | ||

|

|

||

| prepdb: | ||

| container_name: prepdb | ||

| build: | ||

| context: ../ | ||

| dockerfile: ./dev/Dockerfile | ||

| command: ["bash", "./dev/prepdb.sh"] | ||

| volumes: | ||

| - prepdb-data:/app:delegated | ||

| ports: | ||

| - '9991:9991' | ||

| expose: | ||

| - '9991' | ||

| tty: true | ||

| networks: | ||

| - local | ||

|

|

||

| postgres: | ||

| container_name: postgresql | ||

| image: postgres:14.1-alpine | ||

| # work_mem: less tmp files | ||

| # maintenance_work_mem: improve table-level op perf | ||

| # max_wal_size: allow more time before merging to heap | ||

| command: > | ||

| -c work_mem=1GB | ||

| -c maintenance_work_mem=1GB | ||

|

|

@@ -51,25 +19,22 @@ services: | |

| expose: | ||

| - '5432' | ||

| env_file: | ||

| - dev.env | ||

| - dev/dev.env | ||

|

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. You should run |

||

| tty: true | ||

| networks: | ||

| - local | ||

|

|

||

| mysql: | ||

| container_name: mysql | ||

| image: mysql:oracle | ||

| # fsync less aggressively for insertion perf for test setup | ||

|

Collaborator

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. It's unclear where this commend is pointing to.

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Basically all the configuration you put in is this, but I will refer to it 👍🏻 |

||

| command: > | ||

| --default-authentication-plugin=mysql_native_password | ||

| --innodb-buffer-pool-size=8G | ||

| --innodb_io_capacity=2000 | ||

| --innodb_log_file_size=1G | ||

|

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Unless you have a huge computer these settings will make things way worse. MySQL OOM'ed on my machine (errors I got this morning) because the buffer pool size is bigger than I allow Docker. We have to err towards robustness over performance here. These settings are too idiosyncratic |

||

| --binlog-cache-size=16M | ||

| --key_buffer_size=0 | ||

| --max_connections=10 | ||

| --innodb_flush_log_at_trx_commit=2 | ||

| --innodb_flush_log_at_timeout=10 | ||

| --innodb_flush_method=O_DSYNC | ||

| --innodb_log_compressed_pages=OFF | ||

| --sync_binlog=0 | ||

| restart: always | ||

|

|

@@ -81,16 +46,15 @@ services: | |

| expose: | ||

| - '3306' | ||

| env_file: | ||

| - dev.env | ||

| - dev/dev.env | ||

| tty: true | ||

| networks: | ||

| - local | ||

|

|

||

| volumes: | ||

| postgresql-data: | ||

| mysql-data: | ||

| prepdb-data: | ||

|

|

||

| networks: | ||

| local: | ||

| driver: bridge | ||

| driver: bridge | ||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Probably can remove extras since they should be done by default in the dev env