-

-

Notifications

You must be signed in to change notification settings - Fork 5.1k

Closed

Labels

bugSomething isn't workingSomething isn't working

Description

Describe the bug

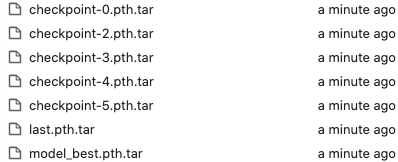

When the parameter max_history in the CheckpointSaver.py is set to 1, the checkpoint is saved for each epoch.

To Reproduce

Steps to reproduce the behavior:

import torch

import timm

from timm.utils import CheckpointSaver

model = timm.models.xception()

optimizer = torch.optim.Adam(model.parameters())

saver = CheckpointSaver(model, optimizer, max_history=1)

saver.save_checkpoint(0, metric=0.5)

saver.save_checkpoint(1, metric=0.5)

saver.save_checkpoint(2, metric=0.6)

saver.save_checkpoint(3, metric=0.7)

saver.save_checkpoint(4, metric=0.8)

saver.save_checkpoint(5, metric=0.7)Expected behavior

The checkpoint of each epoch will be saved.

Desktop (please complete the following information):

- OS: Ubuntu 18.04

- This repository version [e.g. pip 0.3.1 or commit ref]

- PyTorch version w/ CUDA/cuDNN [e.g. from

conda list, 1.7.0 py3.8_cuda11.0.221_cudnn8.0.3_0]

Additional context

Add any other context about the problem here.

Metadata

Metadata

Assignees

Labels

bugSomething isn't workingSomething isn't working