-

Notifications

You must be signed in to change notification settings - Fork 164

feat(BA-942): Add accelerator quantum size field to GQL scaling group #3940

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

feat(BA-942): Add accelerator quantum size field to GQL scaling group #3940

Conversation

Co-authored-by: octodog <mu001@lablup.com>

…or-config-field' into feat/scaling-group-add-accelerator-config-field

Co-authored-by: octodog <mu001@lablup.com>

HyeockJinKim

left a comment

HyeockJinKim

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is this PR done? @fregataa

yomybaby

left a comment

yomybaby

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

User can not use scaling_group and scaling_groups. I got an error when I try these using an user account.

{

"data": {

"scaling_group": null

},

"errors": [

{

"message": "Forbidden operation. (superadmin privilege required)",

"locations": [

{

"line": 2,

"column": 3

}

],

"path": [

"scaling_group"

]

}

]

}

…or-config-field' into feat/scaling-group-add-accelerator-config-field

Co-authored-by: octodog <mu001@lablup.com>

yomybaby

left a comment

yomybaby

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

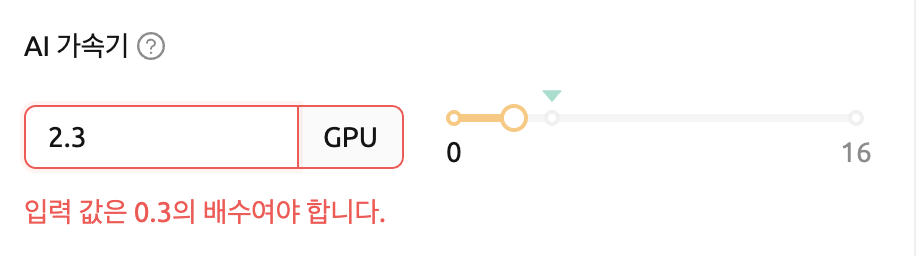

…oup setting (#3383) Resolves #3324 (FR-639) > [!WARNING] > This PR works on lablup/backend.ai#3940 # Add support for accelerator quantum size in resource allocation This PR enhances the resource allocation form to support accelerator quantum sizes, allowing for more precise control over accelerator allocation based on scaling group settings. Rule for accelerator UI step size: - For shares type and single cluster: - If `accelerator_quantum_size` exists, use `accelerator_quantum_size`�. - If `accelerator_quantum_size` does not exist, use `0.1`. - Otherwise, use `1`. Key changes: - Added `accelerator_quantum_size` field to the `ScalingGroup` type in GraphQL schema - Added validation to ensure accelerator values are multiples of the quantum size - Implemented step size adjustment based on the accelerator type and quantum size - Added new translation strings for quantum size validation messages  - Added support for the `custom-accelerator-quantum-size` feature flag for version 25.5.0+ - Added new GraphQL query `accessible_scaling_groups` to fetch scaling groups with quantum size information ### How to test using local backend environment #### Manager - checkout the branch of lablup/backend.ai#3940 - update`VERSION` file to 25.5.0 (to pass the version compatible check for testing) - run `./backend.ai mgr etcd put "config/plugins/accelerator/cuda/quantum_size" 0.3` - run `./backend.ai mgr etcd put "config/plugins/accelerator/mock/allocation_mode" fractional` - restart manager and agent #### WebUI - Now you can select `fgpu` in Session launcher - The slider and input for the accelerator should only allow multiples of 0.3.

resolves #3936 (BA-942)

How to test

Run this command to inject data before test the API.

backend.ai mgr etcd put "config/plugins/accelerator/cuda/quantum_size" 1.0new GQL Query

Checklist: (if applicable)

📚 Documentation preview 📚: https://sorna--3940.org.readthedocs.build/en/3940/

📚 Documentation preview 📚: https://sorna-ko--3940.org.readthedocs.build/ko/3940/