This repository was archived by the owner on Jun 3, 2025. It is now read-only.

fix QAT->Quant conversion of repeated Gemm layers with no activation QDQ #698

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

this PR fixes a bug where repeated QAT Gemm nodes whose outputs are not quantized by a QDQ may be picked up by the wrong QAT->Quant conversion step if the converter mistakes the input QDQ block for the second Gemm node to be the output QDQ. block of the previous.

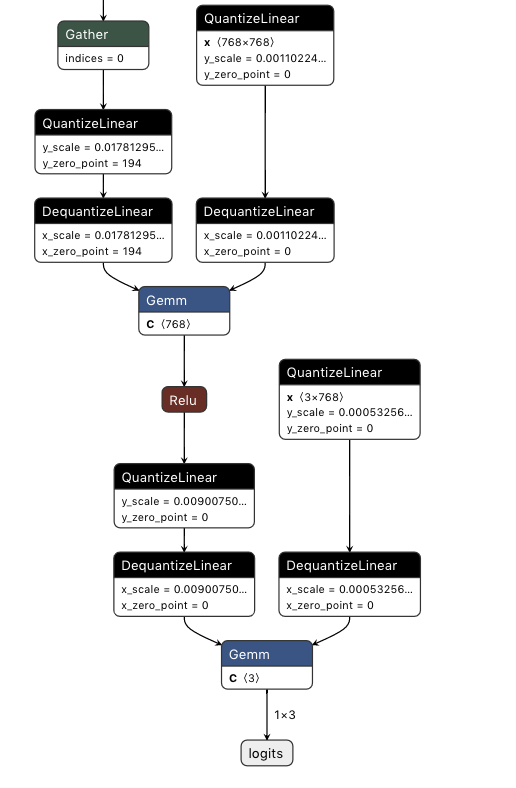

Base QAT graph:

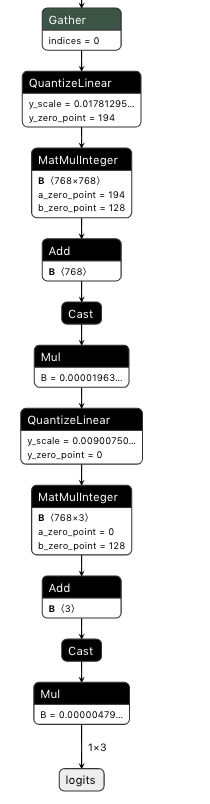

Incorrect Conversion (first Gemm is converted to wrong op type, second Gemm not converted and weights are deleted):

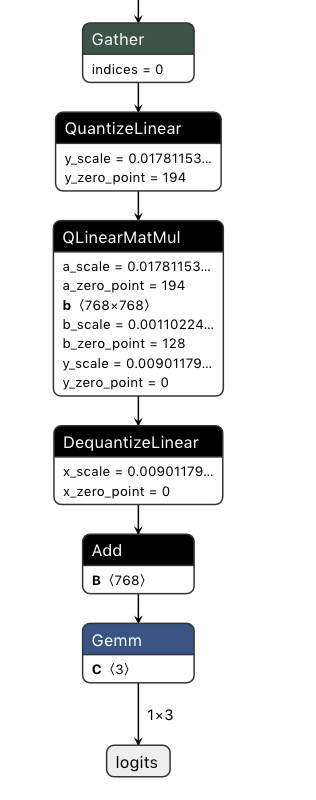

Corrected Conversion (Two repeated MatMulInteger with bias add blocks):