-

Notifications

You must be signed in to change notification settings - Fork 2.9k

Add HTTP recorder for evals; introduce --http-run flag #1312

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

This is a ridiculously helpful change for a project our org is currently working on, and I sincerely hope that it gets merged! |

|

This will prevent us from maintaining a fork. 👍🏻 |

This commit introduces several enhancements to the evaluation script, including: 1. Improved error handling for HTTP requests in the HttpRecorder class. The script now retries failed HTTP requests up to a maximum number of times and throws an exception if all attempts fail. 2. The addition of a new command-line option `--http-run-url` to specify the URL for sending evaluation results in HTTP mode. The existing `--url` option has been replaced with this new option for clarity. 3. Updated help text for the command-line options `--local-run` and `--http-run` to provide more detailed information about their function.

…to specify the number of events to send in each HTTP request when running evaluations in HTTP mode. The default value is 1, which means events are sent individually.

jwang47

left a comment

jwang47

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

One scenario we might also want to handle is if there's an uncaught error that happens during the eval run. We might want to send an event indicating that the eval failed in that situation.

This commit makes several changes to the HttpRecorder class: - Renames the `http_batch_size` parameter to `batch_size` for consistency. - Updates the `HttpRecorder` initializer to accept `batch_size` as an argument. - Updates the `_flush_events_internal` method to use the new `batch_size` attribute. - Removes the retry logic from the `_send_event` method. It now attempts to send each batch of events once, and raises an exception if the request fails. - Updates the `run` method in `oaieval.py` to pass `http_batch_size` as an argument when initializing an `HttpRecorder` instance. These changes simplify the event recording logic and make the code more straightforward to understand. They also give the user more control over the batch size when recording events.

|

Hey @jwang47,

Regarding the handling of errors during the evaluation run, we can represent a failed eval event with a structure similar to a successful event. The For example, an event for a failed evaluation could look like this: {

"run_id": "2307080128125Q6U7IFP",

"event_id": 4,

"sample_id": "abstract-causal-reasoning-text.dev.484",

"type": "eval_failed",

"data": {

"error": {

"type": "service_unavailable",

"code": 503,

"message": "Service Unavailable",

"details": "The upstream service was overloaded."

}

},

"created_by": "",

"created_at": "2023-07-08 01:28:13.704853+00:00"

}Considering that a successful one looks like this: {

"run_id": "2307080128125Q6U7IFP",

"event_id": 3,

"sample_id": "abstract-causal-reasoning-text.dev.484",

"type": "match",

"data": {

"correct": true,

"expected": "ANSWER: off",

"picked": "ANSWER: off",

"sampled": "undetermined."

},

"created_by": "",

"created_at": "2023-07-08 01:28:13.704853+00:00"

}In this structure, the Type field is set to "eval_failed" to indicate that this is an error event. Within the Data field, we have an error object that contains detailed information about the error, such as its type, code, message, and additional details. This allows us to provide comprehensive information about any errors that occur, facilitating easier troubleshooting. Can this be added in another PR, as the complexities of error handling on such level can get rather complex, and I would love to tackle this with the focused attention it needs. |

Sounds good, let's add in a later PR. |

… in `record.py`: 1. Added local fallback support: When an event fails to be sent due to an HTTP error, it is now automatically saved locally using the LocalRecorder class. The local fallback path is passed as an argument to the HttpRecorder's constructor. 2. Implemented a threshold for failed requests: If more than 5% of events fail to be sent, a RuntimeError is raised. This prevents excessive failed requests from potentially overwhelming the system. 3. Improved logging: Added a success log message when events are successfully sent. Also, the warning message and increment of failed requests now only occur if the request does not succeed. 4. Updated the `record_final_report` method to use the LocalRecorder as a fallback when sending the final report fails. This commit improves the resilience of the HttpRecorder and provides more informative logging for debugging and monitoring purposes.

…g/evals into feature/http-recorder

- Add CLI argument to set acceptable failure rate for HTTP requests. - Update to track and act upon failed request rates exceeding the specified threshold. - Refactor error messages for clarity and provide more specific information about failures. - Enhance error handling in the event of exceeded failure thresholds, suggesting a fallback to .

jwang47

left a comment

jwang47

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks good, thanks for the contribution!

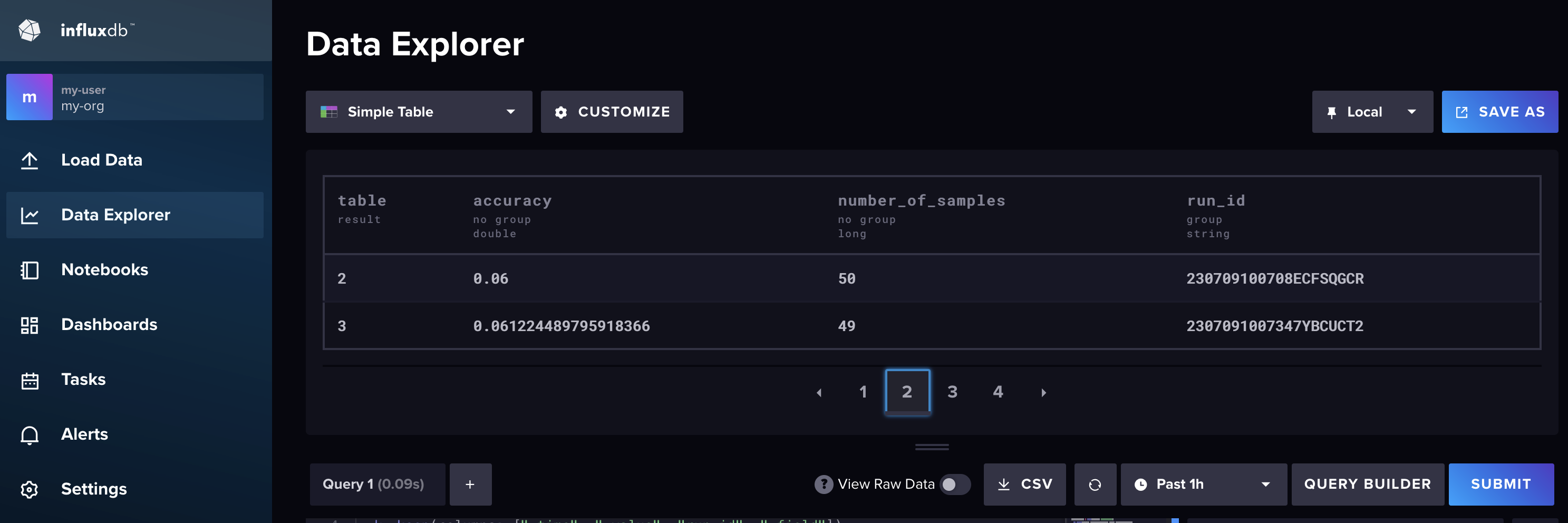

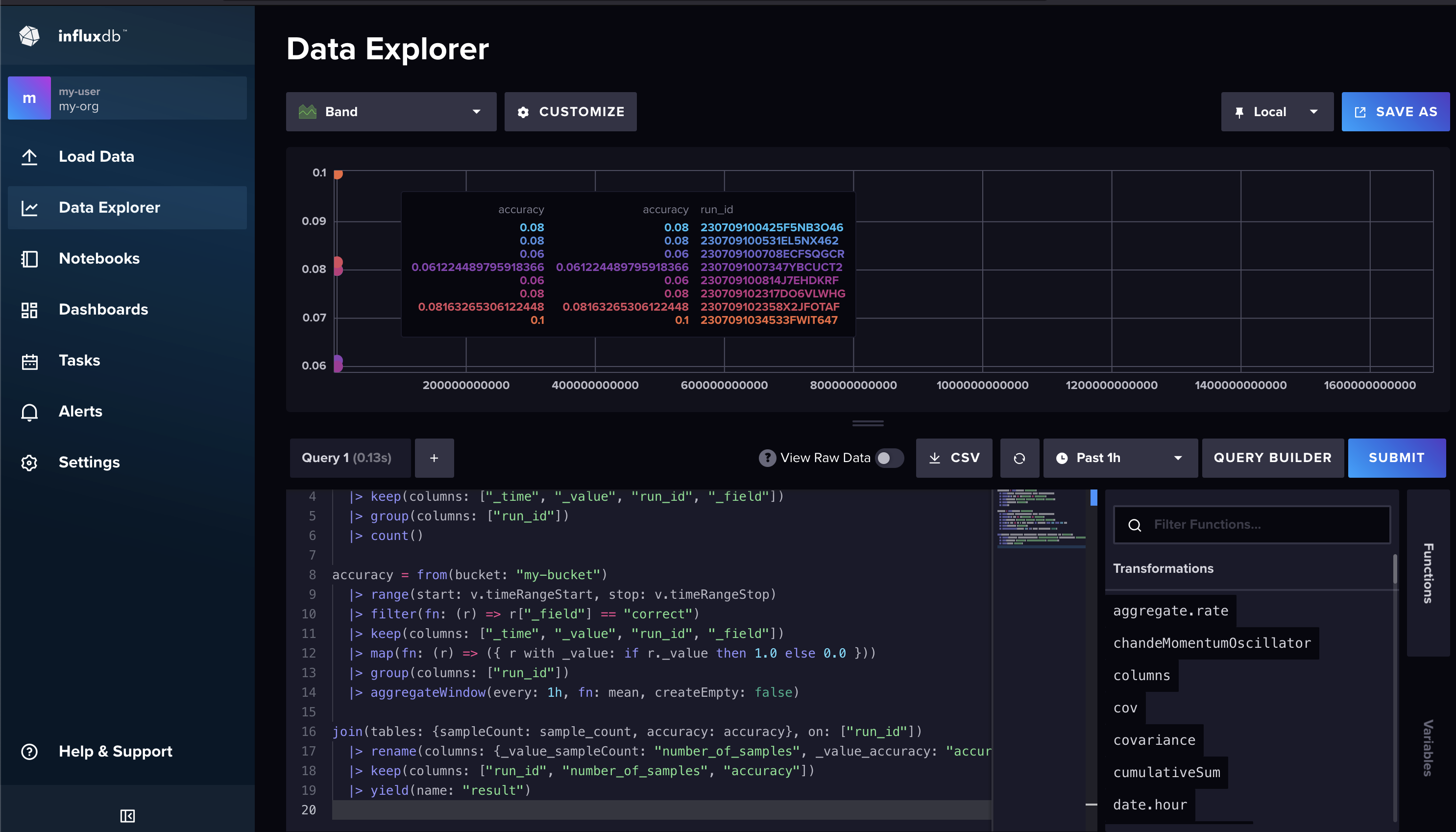

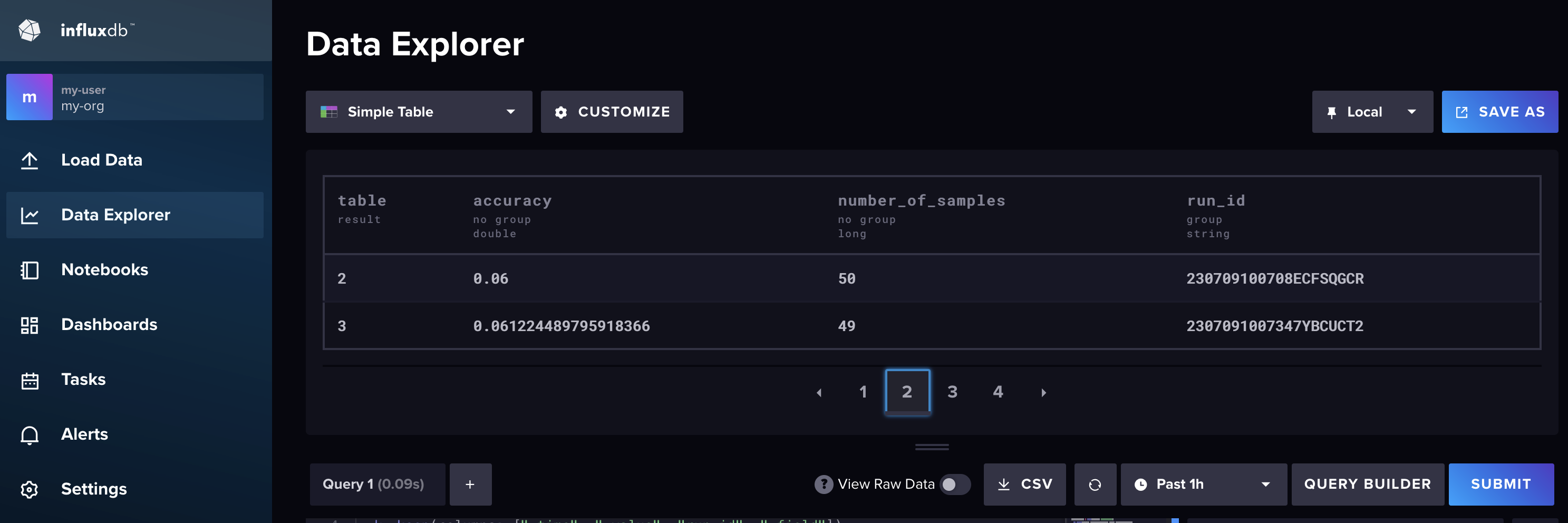

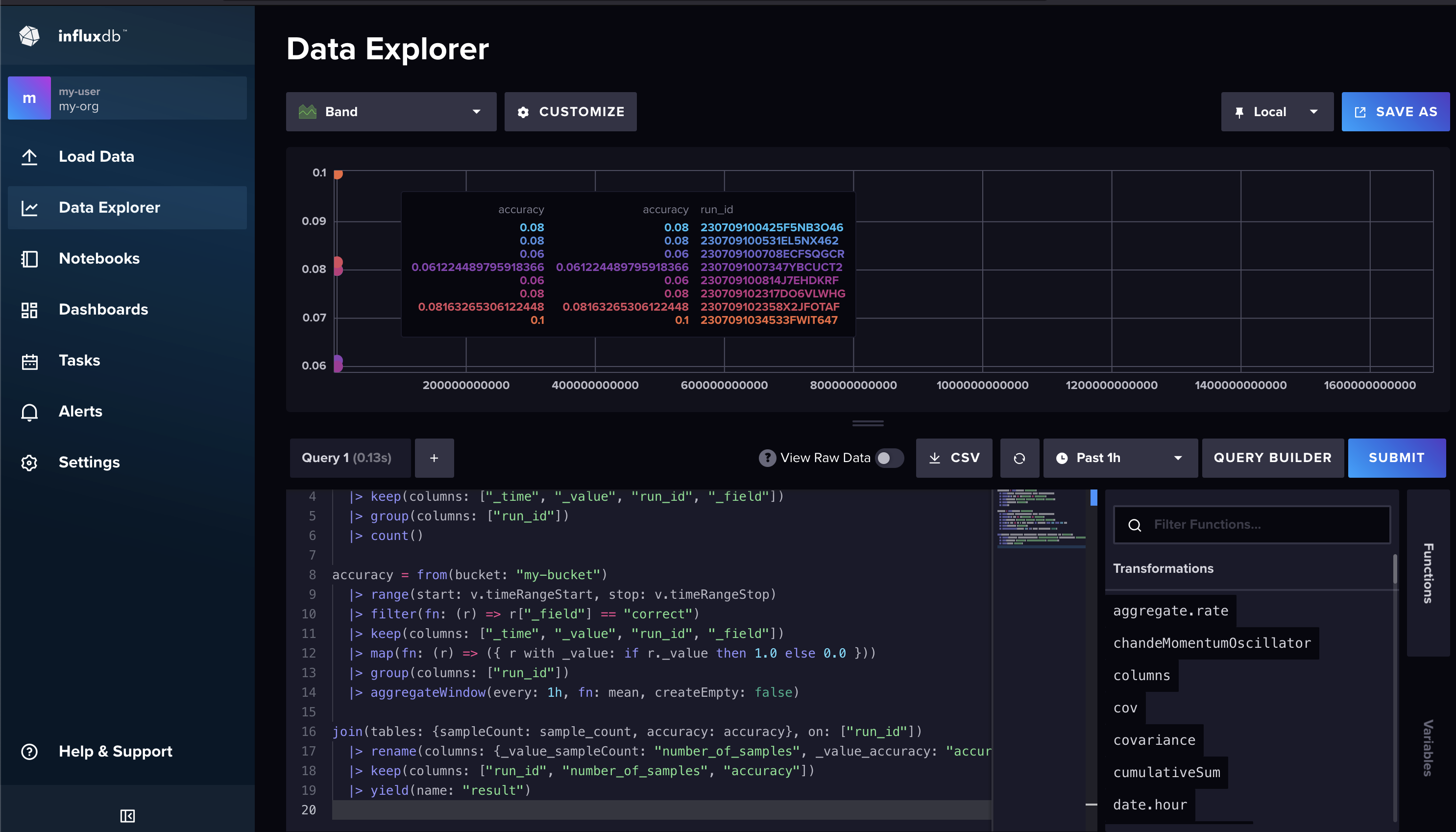

### Change name Introducing HTTP Recorder to OAIEvals Framework ### Change description This pull request introduces the `HttpRecorder` class to the evals framework, extending the current Eval mechanism. #### HTTP Recorder A new class `HttpRecorder` has been added to the `evals.record` module. This new recorder sends evaluation events directly to a specified HTTP endpoint using POST requests. The URL for this endpoint can be specified using the `--http-run-url` command-line argument when running the evaluations. In addition to the local and dry run modes, we now have an HTTP run mode that can be triggered using the `--http-run` flag. #### Motivation This change was largely motivated by the development of the [OAIEvals Collector](https://github.com/nstankov-bg/oaievals-collector). As the creator of this Go application designed specifically for collecting and storing raw evaluation metrics, I saw the need for an HTTP endpoint in our evaluation mechanism. The OAIEvals Collector provides an HTTP handler designed for evals, thus making it an ideal recipient for the data recorded by the new `HttpRecorder`. Allowing for more types of exporters, such as the new `HttpRecorder`, will likely increase the adoption of testing. The flexibility and ease-of-use of the HTTP Recorder allows for more developers to easily integrate testing into their workflows. This could ultimately lead to higher quality code, faster debugging, and an overall more efficient development process. In addition, the integration of the `HttpRecorder` and visualization tools like Grafana dramatically lowers the barrier of entry for developers seeking to visualize their evaluation. By providing a streamlined process for collecting and visualizing metrics, I aim to make data-driven development more accessible, leading to more informed decision-making and improved model quality. #### Demo: **Elasticsearch x Kabana**: | Elasticsearch Test | | :---: | | *Basic Visualization via Kibana* |  **InfluxDB:** | InfluxDB Test 1 | InfluxDB Test 2 | | :---: | :---: | |  |  | **Grafana:** | Grafana Test | | :---: | | *Basic Visualization via InfluxDB & TimeScaleDB* | !<img width="1499" alt="Screenshot 2023-07-11 at 10 03 17 PM" src="https://github.com/nstankov-bg/oaievals-collector/assets/27363885/cd119b1a-939c-4f2d-b141-d26e83784cbc"> **Kafka:** | Kafka Test | | :---: | | *Basic Visualization via Kafka UI* | !<img width="1505" alt="Screenshot 2023-07-12 at 4 35 03 PM" src="https://github.com/nstankov-bg/oaievals-collector/assets/27363885/e8075f06-b628-4773-99d9-a032e28f2472"> #### Testing The new HTTP Recorder feature was thoroughly tested with the following commands: - `python3 oaieval.py gpt-3.5-turbo abstract-causal-reasoning-text.dev.v0 --max_samples=50 --dry-run` - `python3 oaieval.py gpt-3.5-turbo abstract-causal-reasoning-text.dev.v0 --max_samples=50` - `python3 oaieval.py gpt-3.5-turbo abstract-causal-reasoning-text.dev.v0 --max_samples=20 --http-run --http-run-url=http://localhost:8081/events --http-batch-size=10` - `python3 oaieval.py gpt-3.5-turbo abstract-causal-reasoning-text.dev.v0 --max_samples=20 --http-run --http-run-url=http://localhost:8081/events` Each command provided expected results, demonstrating the feature's proper functionality.

### Change name Introducing HTTP Recorder to OAIEvals Framework ### Change description This pull request introduces the `HttpRecorder` class to the evals framework, extending the current Eval mechanism. #### HTTP Recorder A new class `HttpRecorder` has been added to the `evals.record` module. This new recorder sends evaluation events directly to a specified HTTP endpoint using POST requests. The URL for this endpoint can be specified using the `--http-run-url` command-line argument when running the evaluations. In addition to the local and dry run modes, we now have an HTTP run mode that can be triggered using the `--http-run` flag. #### Motivation This change was largely motivated by the development of the [OAIEvals Collector](https://github.com/nstankov-bg/oaievals-collector). As the creator of this Go application designed specifically for collecting and storing raw evaluation metrics, I saw the need for an HTTP endpoint in our evaluation mechanism. The OAIEvals Collector provides an HTTP handler designed for evals, thus making it an ideal recipient for the data recorded by the new `HttpRecorder`. Allowing for more types of exporters, such as the new `HttpRecorder`, will likely increase the adoption of testing. The flexibility and ease-of-use of the HTTP Recorder allows for more developers to easily integrate testing into their workflows. This could ultimately lead to higher quality code, faster debugging, and an overall more efficient development process. In addition, the integration of the `HttpRecorder` and visualization tools like Grafana dramatically lowers the barrier of entry for developers seeking to visualize their evaluation. By providing a streamlined process for collecting and visualizing metrics, I aim to make data-driven development more accessible, leading to more informed decision-making and improved model quality. #### Demo: **Elasticsearch x Kabana**: | Elasticsearch Test | | :---: | | *Basic Visualization via Kibana* |  **InfluxDB:** | InfluxDB Test 1 | InfluxDB Test 2 | | :---: | :---: | |  |  | **Grafana:** | Grafana Test | | :---: | | *Basic Visualization via InfluxDB & TimeScaleDB* | !<img width="1499" alt="Screenshot 2023-07-11 at 10 03 17 PM" src="https://github.com/nstankov-bg/oaievals-collector/assets/27363885/cd119b1a-939c-4f2d-b141-d26e83784cbc"> **Kafka:** | Kafka Test | | :---: | | *Basic Visualization via Kafka UI* | !<img width="1505" alt="Screenshot 2023-07-12 at 4 35 03 PM" src="https://github.com/nstankov-bg/oaievals-collector/assets/27363885/e8075f06-b628-4773-99d9-a032e28f2472"> #### Testing The new HTTP Recorder feature was thoroughly tested with the following commands: - `python3 oaieval.py gpt-3.5-turbo abstract-causal-reasoning-text.dev.v0 --max_samples=50 --dry-run` - `python3 oaieval.py gpt-3.5-turbo abstract-causal-reasoning-text.dev.v0 --max_samples=50` - `python3 oaieval.py gpt-3.5-turbo abstract-causal-reasoning-text.dev.v0 --max_samples=20 --http-run --http-run-url=http://localhost:8081/events --http-batch-size=10` - `python3 oaieval.py gpt-3.5-turbo abstract-causal-reasoning-text.dev.v0 --max_samples=20 --http-run --http-run-url=http://localhost:8081/events` Each command provided expected results, demonstrating the feature's proper functionality.

Change name

Introducing HTTP Recorder to OAIEvals Framework

Change description

This pull request introduces the

HttpRecorderclass to the evals framework, extending the current Eval mechanism.HTTP Recorder

A new class

HttpRecorderhas been added to theevals.recordmodule. This new recorder sends evaluation events directly to a specified HTTP endpoint using POST requests. The URL for this endpoint can be specified using the--http-run-urlcommand-line argument when running the evaluations. In addition to the local and dry run modes, we now have an HTTP run mode that can be triggered using the--http-runflag.Motivation

This change was largely motivated by the development of the OAIEvals Collector. As the creator of this Go application designed specifically for collecting and storing raw evaluation metrics, I saw the need for an HTTP endpoint in our evaluation mechanism. The OAIEvals Collector provides an HTTP handler designed for evals, thus making it an ideal recipient for the data recorded by the new

HttpRecorder.Allowing for more types of exporters, such as the new

HttpRecorder, will likely increase the adoption of testing. The flexibility and ease-of-use of the HTTP Recorder allows for more developers to easily integrate testing into their workflows. This could ultimately lead to higher quality code, faster debugging, and an overall more efficient development process.In addition, the integration of the

HttpRecorderand visualization tools like Grafana dramatically lowers the barrier of entry for developers seeking to visualize their evaluation. By providing a streamlined process for collecting and visualizing metrics, I aim to make data-driven development more accessible, leading to more informed decision-making and improved model quality.Demo:

Elasticsearch x Kabana:

InfluxDB:

Grafana:

Kafka:

Testing

The new HTTP Recorder feature was thoroughly tested with the following commands:

python3 oaieval.py gpt-3.5-turbo abstract-causal-reasoning-text.dev.v0 --max_samples=50 --dry-runpython3 oaieval.py gpt-3.5-turbo abstract-causal-reasoning-text.dev.v0 --max_samples=50python3 oaieval.py gpt-3.5-turbo abstract-causal-reasoning-text.dev.v0 --max_samples=20 --http-run --http-run-url=http://localhost:8081/events --http-batch-size=10python3 oaieval.py gpt-3.5-turbo abstract-causal-reasoning-text.dev.v0 --max_samples=20 --http-run --http-run-url=http://localhost:8081/eventsEach command provided expected results, demonstrating the feature's proper functionality.

Criteria for a good eval ✅

The introduced changes enhance the project's functionality and do not break any existing features. The changes pass all the existing tests and follow the project's contribution guidelines.

Eval structure 🏗️

evals/cli/oaieval.pyfor the new HTTP run mode andevals.recordfor the newHttpRecorder.Final checklist 👀

Eval JSON data

Due to the nature of this PR, there are no new eval samples.