-

Notifications

You must be signed in to change notification settings - Fork 946

[Merged by Bors] - Optimise tree hash caching for block production #2106

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

will fix up the tests on Monday |

|

Ready. |

| validator_graffiti: Option<Graffiti>, | ||

| ) -> Result<BeaconBlockAndState<T::EthSpec>, BlockProductionError> { | ||

| let state = self | ||

| .state_at_slot(slot - 1, StateSkipConfig::WithStateRoots) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Are you aware that the slot - 1 has been removed and we will no longer be able to produce blocks from slots earlier than the head block?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah I did that intentionally, I'll message you

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Good catch! I've restored the slot - 1 with a warning, as we discussed.

I think this will be particularly relevant on the first slot of an epoch when there might be two seemingly legitimate proposers because of propagation delay of the last block of the previous epoch.

paulhauner

left a comment

paulhauner

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nice, it's good to be doing less hashing!

I'm happy to merge this into unstable, regardless of the nit.

| "message" => "this block is more likely to be orphaned", | ||

| "slot" => slot, | ||

| ); | ||

| self.state_at_slot(slot - 1, StateSkipConfig::WithStateRoots) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It does feel like this and L1783-1874 should be de-duped, but I wont block on it.

|

bors r+ |

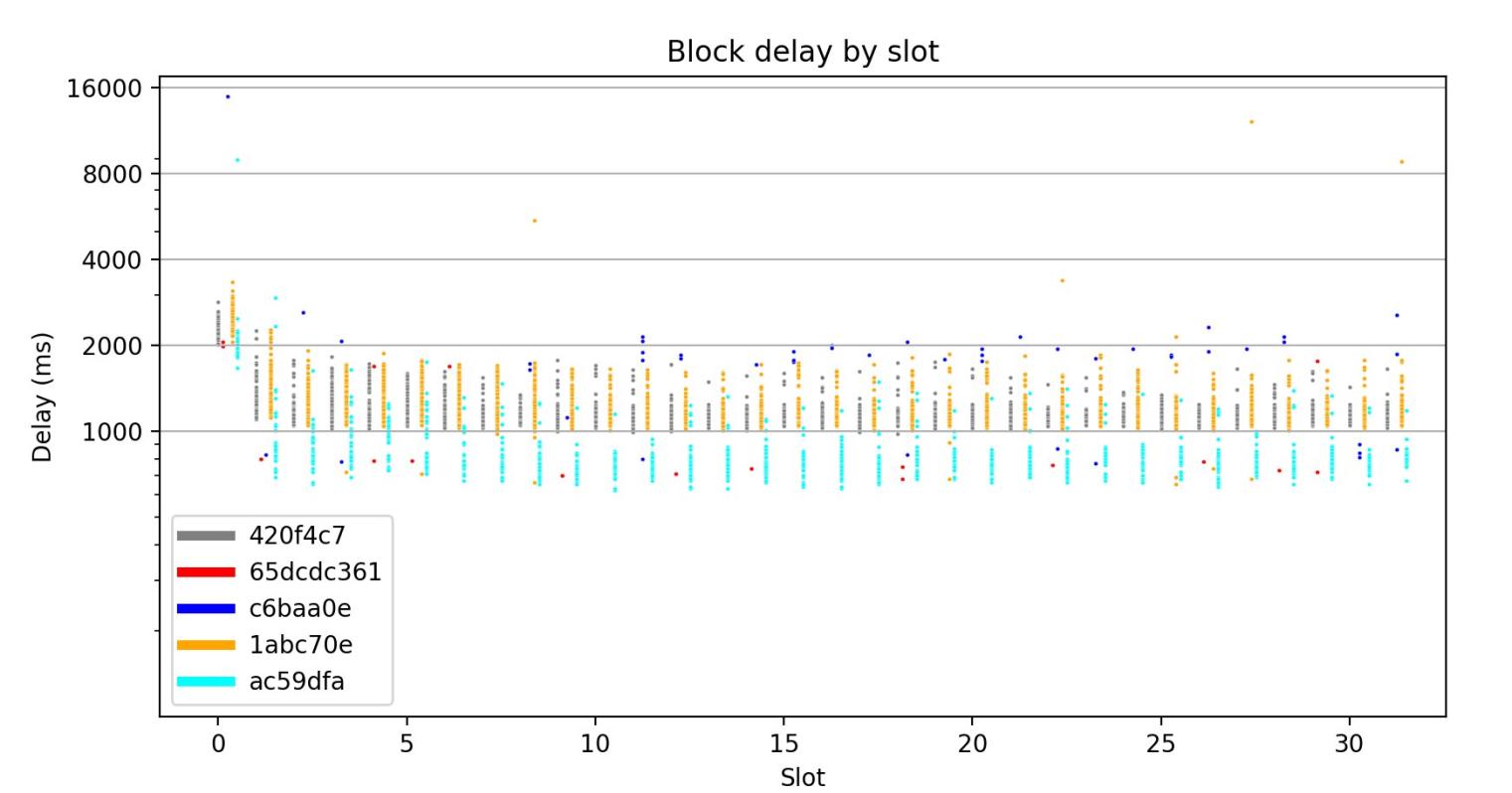

## Proposed Changes `@potuz` on the Eth R&D Discord observed that Lighthouse blocks on Pyrmont were always arriving at other nodes after at least 1 second. Part of this could be due to processing and slow propagation, but metrics also revealed that the Lighthouse nodes were usually taking 400-600ms to even just produce a block before broadcasting it. I tracked the slowness down to the lack of a pre-built tree hash cache (THC) on the states being used for block production. This was due to using the head state for block production, which lacks a THC in order to keep fork choice fast (cloning a THC takes at least 30ms for 100k validators). This PR modifies block production to clone a state from the snapshot cache rather than the head, which speeds things up by 200-400ms by avoiding the tree hash cache rebuild. In practice this seems to have cut block production time down to 300ms or less. Ideally we could _remove_ the snapshot from the cache (and save the 30ms), but it is required for when we re-process the block after signing it with the validator client. ## Alternatives I experimented with 2 alternatives to this approach, before deciding on it: * Alternative 1: ensure the `head` has a tree hash cache. This is too slow, as it imposes a +30ms hit on fork choice, which currently takes ~5ms (with occasional spikes). * Alternative 2: use `Arc<BeaconSnapshot>` in the snapshot cache and share snapshots between the cache and the `head`. This made fork choice blazing fast (1ms), and block production the same as in this PR, but had a negative impact on block processing which I don't think is worth it. It ended up being necessary to clone the full state from the snapshot cache during block production, imposing the +30ms penalty there _as well_ as in block production. In contract, the approach in this PR should only impact block production, and it improves it! Yay for pareto improvements 🎉 ## Additional Info This commit (ac59dfa) is currently running on all the Lighthouse Pyrmont nodes, and I've added a dashboard to the Pyrmont grafana instance with the metrics. In future work we should optimise the attestation packing, which consumes around 30-60ms and is now a substantial contributor to the total.

|

Pull request successfully merged into unstable. Build succeeded: |

|

This branch can potentially be deleted :) |

|

done 😇 |

Proposed Changes

@potuzon the Eth R&D Discord observed that Lighthouse blocks on Pyrmont were always arriving at other nodes after at least 1 second. Part of this could be due to processing and slow propagation, but metrics also revealed that the Lighthouse nodes were usually taking 400-600ms to even just produce a block before broadcasting it.I tracked the slowness down to the lack of a pre-built tree hash cache (THC) on the states being used for block production. This was due to using the head state for block production, which lacks a THC in order to keep fork choice fast (cloning a THC takes at least 30ms for 100k validators). This PR modifies block production to clone a state from the snapshot cache rather than the head, which speeds things up by 200-400ms by avoiding the tree hash cache rebuild. In practice this seems to have cut block production time down to 300ms or less. Ideally we could remove the snapshot from the cache (and save the 30ms), but it is required for when we re-process the block after signing it with the validator client.

Alternatives

I experimented with 2 alternatives to this approach, before deciding on it:

headhas a tree hash cache. This is too slow, as it imposes a +30ms hit on fork choice, which currently takes ~5ms (with occasional spikes).Arc<BeaconSnapshot>in the snapshot cache and share snapshots between the cache and thehead. This made fork choice blazing fast (1ms), and block production the same as in this PR, but had a negative impact on block processing which I don't think is worth it. It ended up being necessary to clone the full state from the snapshot cache during block production, imposing the +30ms penalty there as well as in block production.In contract, the approach in this PR should only impact block production, and it improves it! Yay for pareto improvements 🎉

Additional Info

This commit (ac59dfa) is currently running on all the Lighthouse Pyrmont nodes, and I've added a dashboard to the Pyrmont grafana instance with the metrics.

In future work we should optimise the attestation packing, which consumes around 30-60ms and is now a substantial contributor to the total.