Releases: ludwig-ai/ludwig

v0.7.1

What's Changed

- Fixed confidence_penalty by @tgaddair in #3158

- Fixed set explanations by @tgaddair in #3163

- Bump to hummingbird 0.4.8 by @tgaddair in #3165

- Unpin pyarrow by @tgaddair in #3171

- Make Horovod an optional dependency when using Ray by @tgaddair in #3172

- Cherry-pick sample ratio changes by @tgaddair in #3176

- Fix TorchVision channel preprocessing (#3173) by @geoffreyangus in #3178

- Bump Ludwig to v0.7.1 (#3179) by @tgaddair in #3180

Full Changelog: v0.7...v0.7.1

v0.7

Key Highlights

- Pretrained Vision Models: we’ve added 20 additional TorchVision pretrained models as image encoders, including: AlexNet, EfficientNet, MobileNet v3, and GoogleLeNet.

- Image Augmentation: Ludwig v0.7 also introduces image augmentation, artificially increasing the size of the training dataset by applying a randomized set of transformations to each batch of images during training.

- 50x Faster Fine-Tuning via Automatic Mixed Precision (AMP) Training, Cached Encoder Embeddings, Approximate Training Set evaluation, and automatic batch sizing by default to maximize throughput.

- New Distributed Training Strategies: Distributed Data Parallel (DDP) and Fully Sharded Data Parallel (FSDP)

- Ray 2.0, 2.1, 2.2 and 2.3 support

- A new Ludwig profiler for benchmarking various CPU/GPU performance metrics, as well as comparing different Ludwig model runs.

- Revamped Ludwig datasets API with an even larger number of datasets out of the box.

- API annotations within Ludwig for contributors and Python users

- Schemification of the entire Ludwig Config object for better validation and checks upfront.

What's Changed

- Fix ray nightly import by @jppgks in #2196

- Restructured split config and added datetime splitting by @tgaddair in #2132

- enh: Implements

InferenceModuleas a pipelined module with separate preprocessor, predictor, and postprocessor modules by @brightsparc in #2105 - Explicitly pass data credentials when reading binary files from a RayBackend by @jeffreyftang in #2198

- MlflowCallback: do not end run on_trainer_train_teardown by @jppgks in #2201

- Fail hyperopt with full import error when Ray not installed by @tgaddair in #2203

- Make convert_predictions() backend-aware by @hungcs in #2200

- feat: MVP for explanations using Integrated Gradients from captum by @jppgks in #2205

- [Torchscript] Adds GPU-enabled input types for Vector and Timeseries by @geoffreyangus in #2197

- feat: Added model type GBM (LightGBM tree learner), as an alternative to ECD by @jppgks in #2027

- [Torchscript] Parallelized Text/Sequence Preprocessing by @geoffreyangus in #2206

- feat: Adding feature type shared parameter capability for hyperopt by @arnavgarg1 in #2133

- Bump up version to 0.6.dev. by @justinxzhao in #2209

- Define

FloatOrAutoandIntegerOrAutoschema fields, and use them. by @justinxzhao in #2219 - Define a dataclass for parameter metadata. by @justinxzhao in #2218

- Add explicit handling for zero-length image byte buffers to avoid cryptic errors by @jeffreyftang in #2210

- [pre-commit.ci] pre-commit suggestions by @pre-commit-ci in #2231

- Create dataset util to form repeatable train/vali/test split by @amholler in #2159

- Bug fix: Use safe rename which works across filesystems when writing checkpoints by @dantreiman in #2225

- Add parameter metadata to the trainer schema. by @justinxzhao in #2224

- Add an explicit call to merge_wtih_defaults() when loading a config from a model directory. by @justinxzhao in #2226

- Fixes flaky test test_datetime_split[dask] by @dantreiman in #2232

- Fixes prediction saving for models with Set output by @geoffreyangus in #2211

- Make ExpectedImpact JSON serializable by @hungcs in #2233

- standardised quotation marks, added missing word by @Marvjowa in #2236

- Add boolean postprocessing to dataset type inference for automl by @magdyksaleh in #2193

- Update get_repeatable_train_val_test_split to handle non-stratified split w/ no existing split by @amholler in #2237

- Update R2 score to handle single sample computation by @arnavgarg1 in #2235

- Input/Output Feature Schema Refactor by @connor-mccorm in #2147

- Fix nan in entmax loss and flaky sparsemax/entmax loss tests by @dantreiman in #2238

- Fix preprocessing dataset split API backwards compatibility upgrade bug. by @justinxzhao in #2239

- Removing duplicates in constants from recent PRs by @arnavgarg1 in #2240

- Add attention scores of the vit encoder as an additional return value by @Dennis-Rall in #2192

- Unnest Audio Feature Preprocessing Config by @connor-mccorm in #2242

- Fixed handling of invalud number values to treat as missing values by @tgaddair in #2247

- Support saving numpy predictions to remote FS by @hungcs in #2245

- Use global constant for description.json by @hungcs in #2246

- Removed import warnings when LightGBM and Ray not requested by @tgaddair in #2249

- Adds ability to read images from numpy files and numpy arrays by @geoffreyangus in #2212

- Hyperopt steps per epoch not being computed correctly by @arnavgarg1 in #2175

- Fixed splitting when providing pre-split inputs by @tgaddair in #2248

- Added Backwards Compatibility for Audio Feature Preprocessing by @connor-mccorm in #2254

- [pre-commit.ci] pre-commit suggestions by @pre-commit-ci in #2256

- Fix: Don't skip saving the model if the save path already exists. by @justinxzhao in #2264

- Load best weights outside of finally block, since load may throw an exception by @dantreiman in #2268

- Reduce number of distributed tests. by @justinxzhao in #2270

- [WIP] Adds

inference_utils.pyby @geoffreyangus in #2213 - Run github checks for pushes and merges to *-stable. by @justinxzhao in #2266

- Add ludwig logo and version to CLI help text. by @justinxzhao in #2258

- Add hyperopt_statistics.json constant by @hungcs in #2276

- fix: Make

BaseTrainerConfigan abstract class by @ksbrar in #2273 - [Torchscript] Adds

--deviceargument toexport_torchscriptCLI command by @geoffreyangus in #2275 - Use pytest tmpdir fixture wherever temporary directories are used in tests. by @justinxzhao in #2274

- adding configs used in benchmarking by @abidwael in #2263

- Fixes #2279 by @noahlh in #2284

- adding hardware usage and software packages tracker by @abidwael in #2195

- benchmarking utils by @abidwael in #2260

- dataclasses for summarizing benchmarking results by @abidwael in #2261

- Benchmarking core by @abidwael in #2262

- Fixed default eval_batch_size when setting batch_size=auto by @tgaddair in #2286

- Remove obsolete postprocess_inference_graph function. by @justinxzhao in #2267

- [Torchscript] Adds BERT tokenizer + partial HF tokenizer support by @geoffreyangus in #2272

- Support passing ground_truth as df for visualizations by @hungcs in #2281

- catching urllib3 exception by @abidwael in #2294

- Run pytest workflow on release branches. by @justinxzhao in #2291

- Save checkpoint if train_steps is smaller than batcher's steps_per_epoch by @dantreiman in #2298

- Fix typo in amazon review datasets: s/review_tile/review_title by @dantreiman in #2300

- Refactor non-distributed automl utils into a separate directory. by @justinxzhao in #2296

- Don't skip normalization in TabNet during inference on a single row. by @dantreiman in #2299

- Fix error in postproc_predictions calculation in model.evaluate() by @arnavgarg1 in #2304

- Test for parameter updates in Ludwig components by @jimthompson5802 in #2194

- [pre-commit.ci] pre-commit suggestions by @pre-commit-ci in https://github.com/ludwig-ai/lu...

v0.7.beta

What's Changed

- Fix ray nightly import by @jppgks in #2196

- Restructured split config and added datetime splitting by @tgaddair in #2132

- enh: Implements

InferenceModuleas a pipelined module with separate preprocessor, predictor, and postprocessor modules by @brightsparc in #2105 - Explicitly pass data credentials when reading binary files from a RayBackend by @jeffreyftang in #2198

- MlflowCallback: do not end run on_trainer_train_teardown by @jppgks in #2201

- Fail hyperopt with full import error when Ray not installed by @tgaddair in #2203

- Make convert_predictions() backend-aware by @hungcs in #2200

- feat: MVP for explanations using Integrated Gradients from captum by @jppgks in #2205

- [Torchscript] Adds GPU-enabled input types for Vector and Timeseries by @geoffreyangus in #2197

- feat: Added model type GBM (LightGBM tree learner), as an alternative to ECD by @jppgks in #2027

- [Torchscript] Parallelized Text/Sequence Preprocessing by @geoffreyangus in #2206

- feat: Adding feature type shared parameter capability for hyperopt by @arnavgarg1 in #2133

- Bump up version to 0.6.dev. by @justinxzhao in #2209

- Define

FloatOrAutoandIntegerOrAutoschema fields, and use them. by @justinxzhao in #2219 - Define a dataclass for parameter metadata. by @justinxzhao in #2218

- Add explicit handling for zero-length image byte buffers to avoid cryptic errors by @jeffreyftang in #2210

- [pre-commit.ci] pre-commit suggestions by @pre-commit-ci in #2231

- Create dataset util to form repeatable train/vali/test split by @amholler in #2159

- Bug fix: Use safe rename which works across filesystems when writing checkpoints by @dantreiman in #2225

- Add parameter metadata to the trainer schema. by @justinxzhao in #2224

- Add an explicit call to merge_wtih_defaults() when loading a config from a model directory. by @justinxzhao in #2226

- Fixes flaky test test_datetime_split[dask] by @dantreiman in #2232

- Fixes prediction saving for models with Set output by @geoffreyangus in #2211

- Make ExpectedImpact JSON serializable by @hungcs in #2233

- standardised quotation marks, added missing word by @Marvjowa in #2236

- Add boolean postprocessing to dataset type inference for automl by @magdyksaleh in #2193

- Update get_repeatable_train_val_test_split to handle non-stratified split w/ no existing split by @amholler in #2237

- Update R2 score to handle single sample computation by @arnavgarg1 in #2235

- Input/Output Feature Schema Refactor by @connor-mccorm in #2147

- Fix nan in entmax loss and flaky sparsemax/entmax loss tests by @dantreiman in #2238

- Fix preprocessing dataset split API backwards compatibility upgrade bug. by @justinxzhao in #2239

- Removing duplicates in constants from recent PRs by @arnavgarg1 in #2240

- Add attention scores of the vit encoder as an additional return value by @Dennis-Rall in #2192

- Unnest Audio Feature Preprocessing Config by @connor-mccorm in #2242

- Fixed handling of invalud number values to treat as missing values by @tgaddair in #2247

- Support saving numpy predictions to remote FS by @hungcs in #2245

- Use global constant for description.json by @hungcs in #2246

- Removed import warnings when LightGBM and Ray not requested by @tgaddair in #2249

- Adds ability to read images from numpy files and numpy arrays by @geoffreyangus in #2212

- Hyperopt steps per epoch not being computed correctly by @arnavgarg1 in #2175

- Fixed splitting when providing pre-split inputs by @tgaddair in #2248

- Added Backwards Compatibility for Audio Feature Preprocessing by @connor-mccorm in #2254

- [pre-commit.ci] pre-commit suggestions by @pre-commit-ci in #2256

- Fix: Don't skip saving the model if the save path already exists. by @justinxzhao in #2264

- Load best weights outside of finally block, since load may throw an exception by @dantreiman in #2268

- Reduce number of distributed tests. by @justinxzhao in #2270

- [WIP] Adds

inference_utils.pyby @geoffreyangus in #2213 - Run github checks for pushes and merges to *-stable. by @justinxzhao in #2266

- Add ludwig logo and version to CLI help text. by @justinxzhao in #2258

- Add hyperopt_statistics.json constant by @hungcs in #2276

- fix: Make

BaseTrainerConfigan abstract class by @ksbrar in #2273 - [Torchscript] Adds

--deviceargument toexport_torchscriptCLI command by @geoffreyangus in #2275 - Use pytest tmpdir fixture wherever temporary directories are used in tests. by @justinxzhao in #2274

- adding configs used in benchmarking by @abidwael in #2263

- Fixes #2279 by @noahlh in #2284

- adding hardware usage and software packages tracker by @abidwael in #2195

- benchmarking utils by @abidwael in #2260

- dataclasses for summarizing benchmarking results by @abidwael in #2261

- Benchmarking core by @abidwael in #2262

- Fixed default eval_batch_size when setting batch_size=auto by @tgaddair in #2286

- Remove obsolete postprocess_inference_graph function. by @justinxzhao in #2267

- [Torchscript] Adds BERT tokenizer + partial HF tokenizer support by @geoffreyangus in #2272

- Support passing ground_truth as df for visualizations by @hungcs in #2281

- catching urllib3 exception by @abidwael in #2294

- Run pytest workflow on release branches. by @justinxzhao in #2291

- Save checkpoint if train_steps is smaller than batcher's steps_per_epoch by @dantreiman in #2298

- Fix typo in amazon review datasets: s/review_tile/review_title by @dantreiman in #2300

- Refactor non-distributed automl utils into a separate directory. by @justinxzhao in #2296

- Don't skip normalization in TabNet during inference on a single row. by @dantreiman in #2299

- Fix error in postproc_predictions calculation in model.evaluate() by @arnavgarg1 in #2304

- Test for parameter updates in Ludwig components by @jimthompson5802 in #2194

- [pre-commit.ci] pre-commit suggestions by @pre-commit-ci in #2311

- Use warnings to suppress repeated logs for failed image reads by @arnavgarg1 in #2312

- Use ray dataset and drop type casting in binary_feature prediction post processing for speedup by @magdyksaleh in #2293

- Add size_bytes to DatasetInfo and DataSource by @jeffreyftang in #2306

- Fixes TensorDtype TypeError in Ray nightly by @geoffreyangus in #2320

- Add configuration section for global feature parameters by @arnavgarg1 in #2208

- Ensures unit tests are deleting artifacts during teardown by @geoffreyangus in #2310

- Fixes unit test that had empty Dask partitions after splitting by @geoffreyangus in #2313

- Serve json numpy encoding by @jeffkinnison in #2316

- fix: Mlflow config being injected in hyperopt config by @hungcs in #2321

- Update tests that use preprocessing to match new defaults config structure by @arn...

v0.6.4

What's Changed

- Field fix: by @connor-mccorm in #2714

- AUTO: by @tgaddair in #2719

- Bump Ludwig to 0.6.4 by @arnavgarg1 in #2720

Full Changelog: v0.6.3...v0.6.4

v0.6.3

What's Changed

- Cherry-pick remote file syncing with hyperopt by @tgaddair in #2644

- AUTO: by @tgaddair in #2646

- Cherry-pick bb8bef0 by @tgaddair in #2651

- Cherry-pick: Ensure no ghost ray instances are running in tests (#2607) by @arnavgarg1 in #2654

- AUTO: by @tgaddair in #2660

- AUTO: by @tgaddair in #2677

- Update version to v0.6.3 by @justinxzhao in #2682

Full Changelog: v0.6.2...v0.6.3

v0.6.2

What's Changed

- AUTO: by @tgaddair in #2594

- AUTO: by @tgaddair in #2602

- 0.6.2: cherry-pick Explanation API by @jppgks in #2604

- AUTO: by @tgaddair in #2609

- AUTO: by @tgaddair in #2608

- AUTO: by @tgaddair in #2613

- AUTO: by @tgaddair in #2618

- AUTO: by @tgaddair in #2634

- Cherrypick: feat: adds max_batch_size to auto batch size functionality by @justinxzhao in #2632

- AUTO: by @tgaddair in #2636

- Update version to 0.6.2 by @justinxzhao in #2624

Full Changelog: v0.6.1...v0.6.2

v0.6.1

What's Changed

- Cherry pick hyperopt plots by @arnavgarg1 in #2567

- AUTO: by @tgaddair in #2571

- AUTO: by @tgaddair in #2572

- fix: Limit frequency array to top_n_classes in F1 viz (#2565) by @hungcs in #2575

- AUTO: by @tgaddair in #2581

- AUTO: by @tgaddair in #2583

- AUTO: by @tgaddair in #2590

- Cherrypick: Comprehensive Configs by @justinxzhao in #2580

- AUTO: by @tgaddair in #2591

- Update version to 6.1 by @justinxzhao in #2582

- Readme fixes by @justinxzhao in #2592

Full Changelog: v0.6...v0.6.1

v0.6 - Gradient Boosted Models, Schema Validation, and Pipelined TorchScript

Overview

Ludwig 0.6 introduces several exciting features focused on modeling, deployment, and testing that make it more flexible, reliable, and easy to use in production.

- Gradient boosted models: Historically, Ludwig has been built around a single, flexible neural network architecture called ECD (for Encoder-Combiner-Decoder). With the release of 0.6 we are adding support for a different model architecture: gradient-boosted tree models (GBMs).

- Richer configuration schema and validation: We formalized the schema of Ludwig configurations and now validate it before initialization, which can help you avoid mistakes like typos and syntax errors.

- Probability calibration for binary and multi-class classification: With deep neural networks, the probabilities given by models often don't match the true likelihood of the data. Ludwig now supports temperature scaling calibration (On Calibration of Modern Neural Networks), which brings class probabilities closer to their true likelihoods in the validation set.

- Pipelined TorchScript: We improved the TorchScript model export functionality, making it easier than ever to train and deploy models for high performance inference.

- Model parameter update unit tests: The code to update parameters of deep neural networks can be too complex for developers to make sure the model parameters are updated. To address this difficulty and improve the robustness of our models, we implemented a reusable utility to ensure parameters are updated during one cycle of a forward-pass / backward-pass / optimizer step.

Additional improvements include a new global configuration section, time-based dataset splitting and more flexible hyperparameter optimization configurations. Read more about each specific feature below.

If you are learning about Ludwig for the first time, or if these new features are relevant and exciting to your research or application, we'd love to hear from you. Join our Ludwig Slack Community here.

Gradient Boosted Models (@jppgks)

Historically, Ludwig has been built around a single, flexible neural network architecture called ECD (for Encoder-Combiner-Decoder). With the release of 0.6 we are, adding support for a different model architecture: gradient-boosted tree models (GBM).

This is motivated by the fact that tree models still outperform neural networks on some tabular datasets, and the fact that tree models are generally less compute-intensive, making them a better choice for some applications. In Ludwig, users can now experiment with both neural and tree-based architectures within the same framework, taking advantage of all of the additional functionalities and conveniences that Ludwig offers like: preprocessing, hyperparameter optimization, integration with different backends (local, ray, horovod), and interoperability with different data sources (pandas, dask, modin).

How to use it

Install the tree extra package with pip install ludwig[tree]. After the installation, you can use the new gbm model type in the configuration. Ludwig will default to using the ECD architecture, which can be overridden as follows to use GBM:

In some initial benchmarking we found that GBMs are particularly performant on smaller tabular datasets and can sometimes deal better with class imbalance compared to neural networks. Stay tuned for a more in-depth blogpost on the topic. Like the ECD neural networks, GBMs can be sensitive to hyperparameter values, and hyperparameter tuning is important to get a well-performing model.

Under the hood, Ludwig uses LightGBM for training gradient-boosted tree models, and the LightGBM trainer parameters can be configured in the trainer section of the configuration. For serving, the LightGBM model is converted to a PyTorch graph using Hummingbird for efficient evaluation and inference.

Limitations

Ludwig's initial support for GBM is limited to tabular data (binary, categorical and numeric features) with a single output feature target.

Calibrating probabilities for category and binary output features (@dantreiman)

Suppose your model outputs a class probability of 90%. Is there a 90% chance that the model prediction is correct? Do the probabilities given by your model match the true likelihood of the data? With deep neural networks, they often don't.

Drawing on the methods described in On Calibration of Modern Neural Networks (Chuan Guo, Geoff Pleiss, Yu Sun, Kilian Q. Weinberger), Ludwig now supports temperature scaling for binary and category output features. Temperature scaling brings a model's output probabilities closer to the true likelihood while preserving the same accuracy and top k predictions.

How to use Calibration

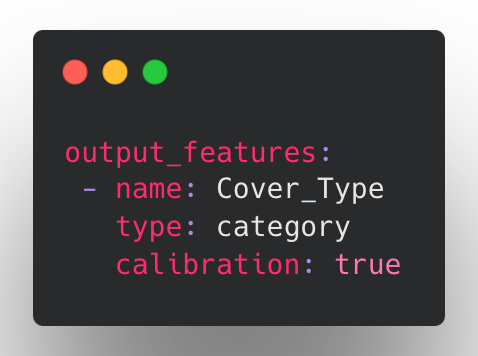

To enable calibration, add calibration: true to any binary or category output feature configuration:

With calibration enabled, Ludwig will find a scale factor (temperature) which will bring the class probabilities closer to their true likelihoods in the validation set. The calibration scale factor is determined in a short phase after training is complete. If no validation split is provided, the training set is used instead.

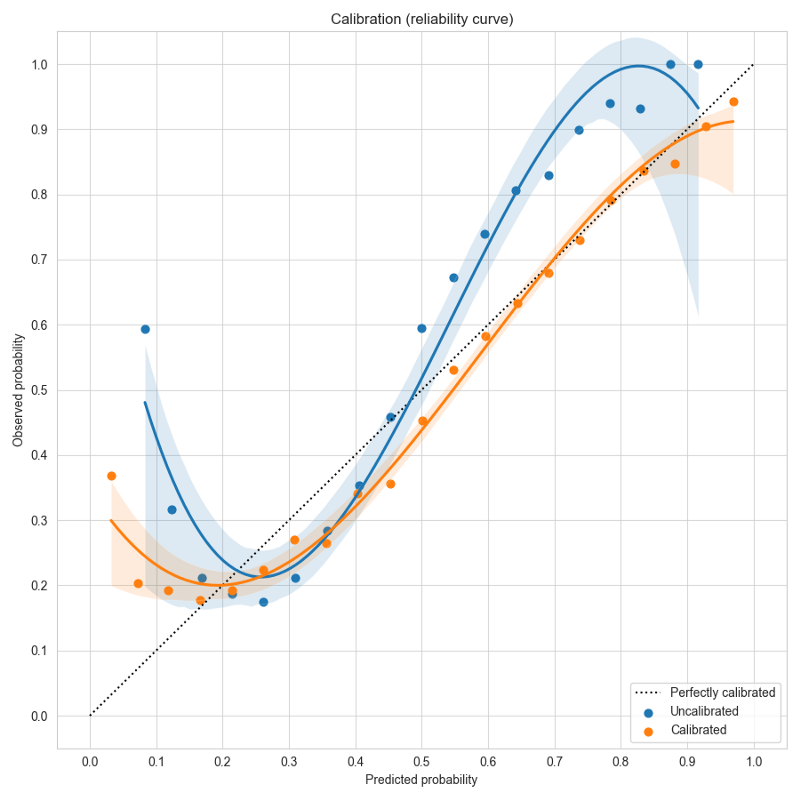

To visualize the effects of calibration in Ludwig, you can use Calibration Plots, which bin the data based on model probability and plot the model probability (X) versus observed (Y) for each bin (see code examples).

In a perfectly calibrated model, the observed probability equals the predicted probability, and all predictions will land on the dotted line y=x. In this example using the forest cover dataset, the uncalibrated model in blue gives over-confident predictions near the left and right edges close to probability values of 0 or 1. Temperature scaling learns a scale factor of 0.51 which improves the calibration curve in orange, moving it closer to y=x.

Limitations

Calibration is currently limited to models with binary and category output features.

Richer configuration schema and validation (@connor-mccorm @ksbrar @justinxzhao )

Ludwig configurations are flexible by design, as they internally map to Python function signatures. This allows configurations for expressive configurations with many parameters for the users to play with, but we have found that users would too easily have typos in their configs like incorrect value types or other syntactical inconsistencies that were not easy to catch.

We have now formalized the Ludwig config with a strongly typed schema, serving as a centralized source of truth for parameter documentation and config validation. Ludwig validation now explicitly restricts each parameter's values to valid ones, decreasing the chance of syntactical and logical errors and signaling immediately to the user where the issues lie, before processing data or starting training. Schemas also provide many future benefits including autocompletion.

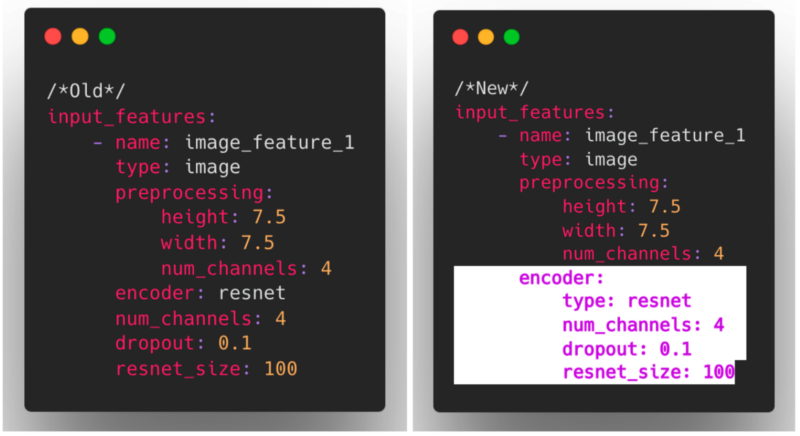

Nested encoder and decoder parameters (@connor-mccorm )

We have also restructured the way that encoders and decoders are configured to now use a nested structure, consistent with other modules in Ludwig such as combiners and loss.

As these changes impact what constitutes a valid Ludwig config, we also introduced a mechanism for ensuring backward compatibility that invisibly and automatically upgrades older configs to the current config structure.

We hope with the new Ludwig schema and the improved encoder/decoder nesting structure, that you find using Ludwig to be a much more robust and user friendly experience!

New Defaults Ludwig Section (@arnavgarg1 )

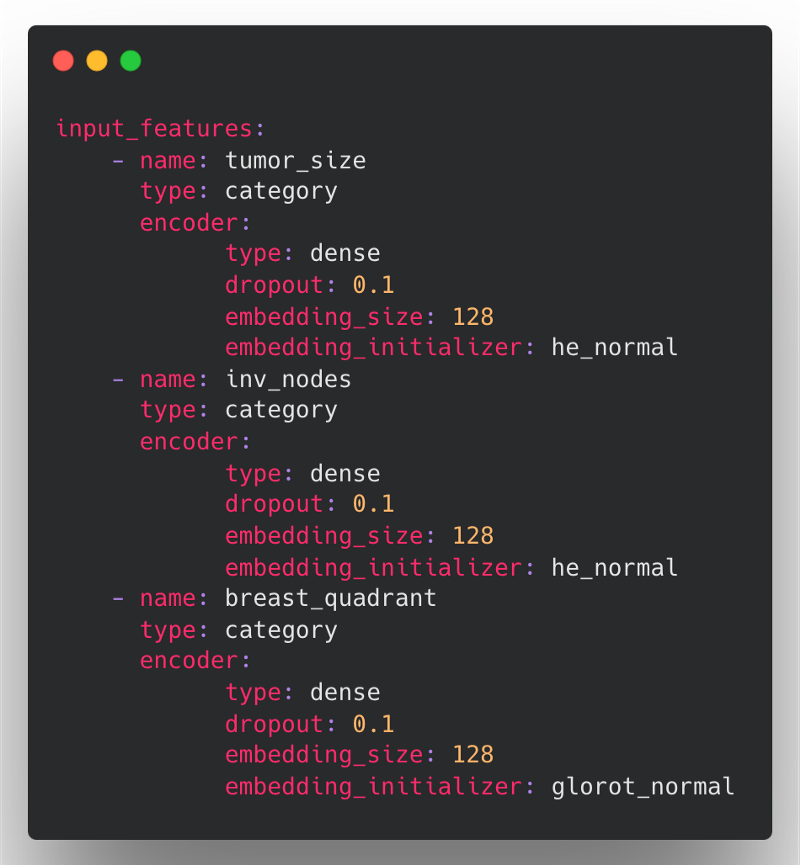

In Ludwig 0.5, users could specify global preprocessing parameters on a per-feature-type basis through the preprocessing section in Ludwig configs. This is useful if users know they always want to apply certain transformations to their data for every feature of the same type. However, there was no equivalent mechanism for global encoder, decoder or loss related parameters.

For example, say we have a mammography dataset to predict breast cancer that contains many categorical features. In Ludwig 0.5, we might define our input features with encoder parameters in the following way:

Here, the problem is that we have to redefine the same encoder parameters (type, dropout, and embedding_size) for each of the input features if we want to override the default value across all categorical features.

In Ludwig 0.6, we are introducing a new defaults section within the Ludwig config to define feature-type defaults for preprocessing, encoders, decoders, and loss. Default preprocessing and encoder configurations will be applied to all input_features of that feature type, while decoder ...

v0.6rc1

What's Changed

- [release-0.6] Cherry-pick bugfixes from upstream by @tgaddair in #2471

- [release-0.6] Cherry-pick upstream commits by @tgaddair in #2473

- [release-0.6] Cherry-pick upstream by @tgaddair in #2476

- Cherry-pick backwards-compatibility fixes by @jeffreyftang in #2487

- [cherry-pick] Fixed usage of checkpoints for AutoML in Ray 2.0 (#2485) by @tgaddair in #2491

- fix: Automatically assign title to OneOfOptionsField (#2480) by @ksbrar in #2492

- [cherry-pick] Fixed stratified splitting with Dask (#1883) by @jppgks in #2494

- AUTO: by @tgaddair in #2505

- AUTO: Enable hyperopt to be launched from a ray client by @tgaddair in #2504

- [cherry-pick] Pin transformers < 4.22 until issues resolved (#2495) by @tgaddair in #2510

- [cherry-pick] Fix flaky ray nightly image test (#2493) by @tgaddair in #2511

- AUTO: by @tgaddair in #2513

- Add in-memory dataset size calculation to dataset statistics and hyperopt (#2509) by @arnavgarg1 in #2518

- AUTO: by @tgaddair in #2521

- AUTO: by @tgaddair in #2528

- AUTO: by @tgaddair in #2534

- Cherrypick: Cleanup: move to per-module loggers instead of the global logging object by @justinxzhao in #2539

- Update version to 0.6rc1. by @justinxzhao in #2529

Full Changelog: v0.6.beta...v0.6rc1

v0.6.beta

What's Changed

- Fix ray nightly import by @jppgks in #2196

- Restructured split config and added datetime splitting by @tgaddair in #2132

- enh: Implements

InferenceModuleas a pipelined module with separate preprocessor, predictor, and postprocessor modules by @brightsparc in #2105 - Explicitly pass data credentials when reading binary files from a RayBackend by @jeffreyftang in #2198

- MlflowCallback: do not end run on_trainer_train_teardown by @jppgks in #2201

- Fail hyperopt with full import error when Ray not installed by @tgaddair in #2203

- Make convert_predictions() backend-aware by @hungcs in #2200

- feat: MVP for explanations using Integrated Gradients from captum by @jppgks in #2205

- [Torchscript] Adds GPU-enabled input types for Vector and Timeseries by @geoffreyangus in #2197

- feat: Added model type GBM (LightGBM tree learner), as an alternative to ECD by @jppgks in #2027

- [Torchscript] Parallelized Text/Sequence Preprocessing by @geoffreyangus in #2206

- feat: Adding feature type shared parameter capability for hyperopt by @arnavgarg1 in #2133

- Bump up version to 0.6.dev. by @justinxzhao in #2209

- Define

FloatOrAutoandIntegerOrAutoschema fields, and use them. by @justinxzhao in #2219 - Define a dataclass for parameter metadata. by @justinxzhao in #2218

- Add explicit handling for zero-length image byte buffers to avoid cryptic errors by @jeffreyftang in #2210

- [pre-commit.ci] pre-commit suggestions by @pre-commit-ci in #2231

- Create dataset util to form repeatable train/vali/test split by @amholler in #2159

- Bug fix: Use safe rename which works across filesystems when writing checkpoints by @dantreiman in #2225

- Add parameter metadata to the trainer schema. by @justinxzhao in #2224

- Add an explicit call to merge_wtih_defaults() when loading a config from a model directory. by @justinxzhao in #2226

- Fixes flaky test test_datetime_split[dask] by @dantreiman in #2232

- Fixes prediction saving for models with Set output by @geoffreyangus in #2211

- Make ExpectedImpact JSON serializable by @hungcs in #2233

- standardised quotation marks, added missing word by @Marvjowa in #2236

- Add boolean postprocessing to dataset type inference for automl by @magdyksaleh in #2193

- Update get_repeatable_train_val_test_split to handle non-stratified split w/ no existing split by @amholler in #2237

- Update R2 score to handle single sample computation by @arnavgarg1 in #2235

- Input/Output Feature Schema Refactor by @connor-mccorm in #2147

- Fix nan in entmax loss and flaky sparsemax/entmax loss tests by @dantreiman in #2238

- Fix preprocessing dataset split API backwards compatibility upgrade bug. by @justinxzhao in #2239

- Removing duplicates in constants from recent PRs by @arnavgarg1 in #2240

- Add attention scores of the vit encoder as an additional return value by @Dennis-Rall in #2192

- Unnest Audio Feature Preprocessing Config by @connor-mccorm in #2242

- Fixed handling of invalud number values to treat as missing values by @tgaddair in #2247

- Support saving numpy predictions to remote FS by @hungcs in #2245

- Use global constant for description.json by @hungcs in #2246

- Removed import warnings when LightGBM and Ray not requested by @tgaddair in #2249

- Adds ability to read images from numpy files and numpy arrays by @geoffreyangus in #2212

- Hyperopt steps per epoch not being computed correctly by @arnavgarg1 in #2175

- Fixed splitting when providing pre-split inputs by @tgaddair in #2248

- Added Backwards Compatibility for Audio Feature Preprocessing by @connor-mccorm in #2254

- [pre-commit.ci] pre-commit suggestions by @pre-commit-ci in #2256

- Fix: Don't skip saving the model if the save path already exists. by @justinxzhao in #2264

- Load best weights outside of finally block, since load may throw an exception by @dantreiman in #2268

- Reduce number of distributed tests. by @justinxzhao in #2270

- [WIP] Adds

inference_utils.pyby @geoffreyangus in #2213 - Run github checks for pushes and merges to *-stable. by @justinxzhao in #2266

- Add ludwig logo and version to CLI help text. by @justinxzhao in #2258

- Add hyperopt_statistics.json constant by @hungcs in #2276

- fix: Make

BaseTrainerConfigan abstract class by @ksbrar in #2273 - [Torchscript] Adds

--deviceargument toexport_torchscriptCLI command by @geoffreyangus in #2275 - Use pytest tmpdir fixture wherever temporary directories are used in tests. by @justinxzhao in #2274

- adding configs used in benchmarking by @abidwael in #2263

- Fixes #2279 by @noahlh in #2284

- adding hardware usage and software packages tracker by @abidwael in #2195

- benchmarking utils by @abidwael in #2260

- dataclasses for summarizing benchmarking results by @abidwael in #2261

- Benchmarking core by @abidwael in #2262

- Fixed default eval_batch_size when setting batch_size=auto by @tgaddair in #2286

- Remove obsolete postprocess_inference_graph function. by @justinxzhao in #2267

- [Torchscript] Adds BERT tokenizer + partial HF tokenizer support by @geoffreyangus in #2272

- Support passing ground_truth as df for visualizations by @hungcs in #2281

- catching urllib3 exception by @abidwael in #2294

- Run pytest workflow on release branches. by @justinxzhao in #2291

- Save checkpoint if train_steps is smaller than batcher's steps_per_epoch by @dantreiman in #2298

- Fix typo in amazon review datasets: s/review_tile/review_title by @dantreiman in #2300

- Refactor non-distributed automl utils into a separate directory. by @justinxzhao in #2296

- Don't skip normalization in TabNet during inference on a single row. by @dantreiman in #2299

- Fix error in postproc_predictions calculation in model.evaluate() by @arnavgarg1 in #2304

- Test for parameter updates in Ludwig components by @jimthompson5802 in #2194

- [pre-commit.ci] pre-commit suggestions by @pre-commit-ci in #2311

- Use warnings to suppress repeated logs for failed image reads by @arnavgarg1 in #2312

- Use ray dataset and drop type casting in binary_feature prediction post processing for speedup by @magdyksaleh in #2293

- Add size_bytes to DatasetInfo and DataSource by @jeffreyftang in #2306

- Fixes TensorDtype TypeError in Ray nightly by @geoffreyangus in #2320

- Add configuration section for global feature parameters by @arnavgarg1 in #2208

- Ensures unit tests are deleting artifacts during teardown by @geoffreyangus in #2310

- Fixes unit test that had empty Dask partitions after splitting by @geoffreyangus in #2313

- Serve json numpy encoding by @jeffkinnison in #2316

- fix: Mlflow config being injected in hyperopt config by @hungcs in #2321

- Update tests that use preprocessing to match new defaults config structure by @arn...