Datastreaming

The datastreaming system is being built as part of in-kind work to ESS. It will be the system that the ESS uses to take data and write it to file - basically their equivalent to the ICP. The system may also replace the ICP at ISIS in the future.

In general the system works by passing both neutron and SE data into Kafka and having clients that either view data live (like Mantid) or write the data to file, additional information can be found here and here.

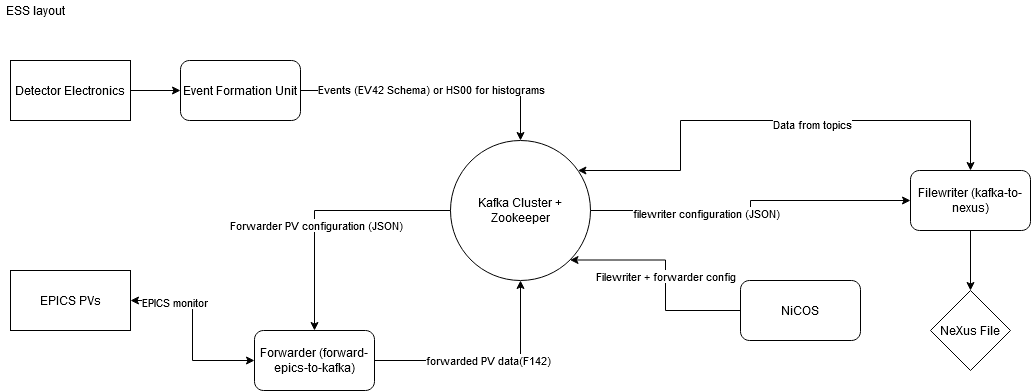

The datastreaming layout proposed looks something like this, not including the mantid steps or anything before event data is collected:

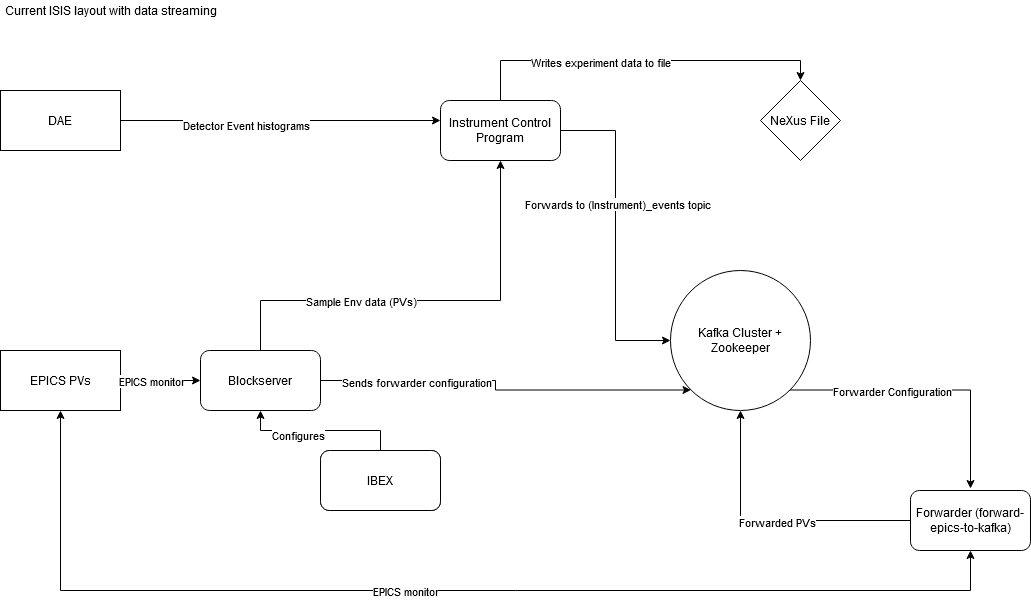

Part of our in-kind contribution to datastreaming is to test the system in production at ISIS. Currently it is being tested in the following way, with explanations of each component below:

There are two Kafka clusters, production (livedata.isis.cclrc.ac.uk:9092) and development (tenten.isis.cclrc.ac.uk:9092 or sakura.isis.cclrc.ac.uk:9092 or hinata.isis.cclrc.ac.uk:9092). The development cluster is set up to auto-create topics and so when new developer machines are run up all the required topics will be created. However, the production server does not auto-create topics this means that when a new real instrument comes online corresponding topics must be created on this cluster, which is done as part of the install script. Credentials for both clusters can be found in the keeper shared folder.

A Grafana dashboard for the production cluster can be found at madara.isis.cclrc.ac.uk:3000. This shows the topic data rate and other useful information. Admin credentials can also be found in the sharepoint.

Deployment involves the use of Ansible playbooks, the playbooks and instructions for using these can be found here.

The ICP on any instrument that is running in full event mode and with a DAE3 is streaming neutron events into Kafka.

See Forwarding Sample Environment

See File writing

Currently system tests are being run to confirm that the start/stop run and event data messages are being sent into Kafka and that a Nexus file is being written with these events. The Kafka cluster and filewriter are being run in docker containers for these tests and so must be run on a Windows 10 machine. To run these tests you will need to install docker for windows and add yourself as a docker-user.

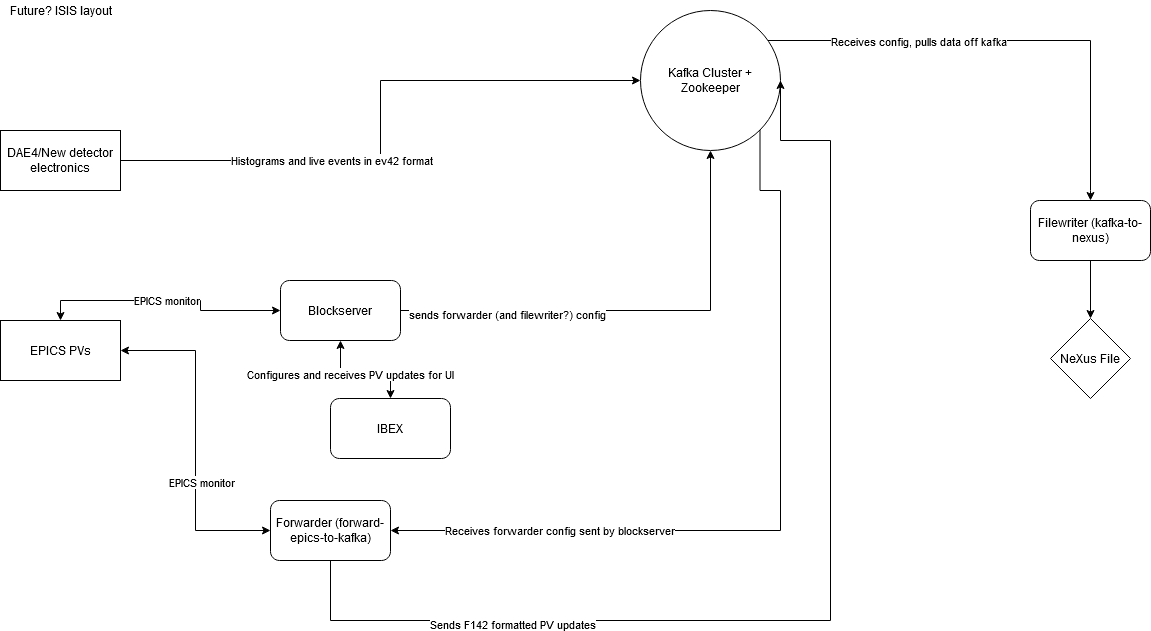

After the in-kind work finishes and during the handover, there are some proposed changes that affect the layout and integration of data streaming at ISIS. This diagram is subject to change, but shows a brief overview of what the future system might look like: